Python映射合并技术:多源数据集成的高级策略与工程实践

引言:多源数据集成在现代系统中的核心地位

在分布式系统和微服务架构日益普及的今天,数据映射合并已成为数据处理的关键环节。根据2025年数据工程调查报告:

- 典型企业系统平均集成7.3个独立数据源

- 75%的数据质量问题源于不当的映射合并

- 核心应用场景:

- 微服务架构:合并多个服务返回的数据

- 数据仓库:集成不同业务系统的数据

- 用户画像:整合用户行为与属性数据

- 物联网平台:融合多传感器数据流

# 典型场景:用户数据来自多个服务

profile_service = {'user_id': 101, 'name': 'Zhang San', 'email': 'zs@example.com'}

order_service = {'user_id': 101, 'last_order': '2025-05-01', 'total_spent': 1500}

preference_service = {'user_id': 101, 'theme': 'dark', 'language': 'zh-CN'}# 目标:合并为统一的用户视图本文将深入解析Python中映射合并的技术体系,结合《Python Cookbook》经典方法与现代工程实践。

一、基础合并技术:字典更新与解包

1.1 基础更新方法

# 简单合并(覆盖式)

merged = {}

merged.update(profile_service)

merged.update(order_service)

merged.update(preference_service)# 问题:相同键会被覆盖1.2 字典解包操作

# Python 3.5+ 解包语法

merged = {**profile_service, **order_service, **preference_service}# 控制合并顺序(后出现的覆盖前面的)

merged_priority = {**preference_service, # 最低优先级**order_service, # 中等优先级**profile_service # 最高优先级

}1.3 保留所有值

from collections import defaultdict# 合并并保留所有值

merged_all = defaultdict(list)

for data in [profile_service, order_service, preference_service]:for key, value in data.items():merged_all[key].append(value)# 结果示例:

# {'user_id': [101, 101, 101], 'name': ['Zhang San'], ...}二、中级合并技术:自定义合并策略

2.1 键冲突解决策略

def merge_dicts(dicts, conflict_strategy='last'):"""支持多种冲突解决策略"""result = {}for d in dicts:for key, value in d.items():if key in result:if conflict_strategy == 'first':continue # 保留第一个值elif conflict_strategy == 'last':result[key] = value # 覆盖为最后的值elif conflict_strategy == 'combine':if not isinstance(result[key], list):result[key] = [result[key]]result[key].append(value)elif conflict_strategy == 'sum' and isinstance(value, (int, float)):result[key] += valueelse:result[key] = valuereturn result# 使用示例

merged = merge_dicts([profile_service, order_service], conflict_strategy='combine')2.2 递归深度合并

def deep_merge(base, update):"""递归深度合并字典"""for key, value in update.items():if (key in base and isinstance(base[key], dict) and isinstance(value, dict)):# 递归合并嵌套字典base[key] = deep_merge(base[key], value)else:# 直接更新或覆盖base[key] = valuereturn base# 示例:合并嵌套配置

default_config = {'database': {'host': 'localhost', 'port': 3306}}

user_config = {'database': {'port': 5432}, 'debug': True}

final_config = deep_merge(default_config, user_config)

# 结果: {'database': {'host': 'localhost', 'port': 5432}, 'debug': True}2.3 基于模式的合并

def schema_based_merge(sources, schema):"""基于预定义模式的合并"""result = {}for field, rules in schema.items():# 应用字段级合并规则values = [s.get(field) for s in sources if field in s]if rules['type'] == 'single':# 使用指定策略解决冲突if rules['conflict'] == 'priority':# 按源优先级选择for source in sources:if field in source:result[field] = source[field]breakelif rules['conflict'] == 'latest':# 使用最后出现的值result[field] = values[-1] if values else Noneelif rules['type'] == 'multi':# 保留所有值result[field] = valuesreturn result# 定义合并模式

user_schema = {'user_id': {'type': 'single', 'conflict': 'priority'},'name': {'type': 'single', 'conflict': 'priority'},'orders': {'type': 'multi'},'preferences': {'type': 'single', 'conflict': 'latest'}

}三、高级合并技术:函数式与元编程

3.1 函数式合并管道

from functools import reducedef merge_pipe(mergers):"""创建合并管道"""def merge_func(data_list):return reduce(lambda acc, d: mergers(acc, d), data_list, {})return merge_func# 定义合并操作符

def merge_with_sum(a, b):for k, v in b.items():if k in a and isinstance(v, (int, float)):a[k] += velse:a[k] = vreturn a# 构建管道

sum_merger = merge_pipe(merge_with_sum)# 使用

financial_data = [{'revenue': 1000, 'expenses': 300},{'revenue': 1500, 'tax': 200},{'expenses': 150, 'tax': 100}

]

merged_finance = sum_merger(financial_data)

# 结果: {'revenue': 2500, 'expenses': 450, 'tax': 300}3.2 元类驱动的自动合并

class MetaMerger(type):"""自动生成合并方法的元类"""def __new__(cls, name, bases, attrs):# 自动生成合并方法if 'merge_fields' in attrs:fields = attrs['merge_fields']def merge(self, other):merged = {}for field in fields:# 应用字段级合并策略if field in self._data and field in other._data:# 自定义合并逻辑if field in self._merge_strategies:merged[field] = self._merge_strategies[field](self._data[field], other._data[field])else:# 默认使用最后的值merged[field] = other._data[field]elif field in self._data:merged[field] = self._data[field]elif field in other._data:merged[field] = other._data[field]return self.__class__(merged)attrs['merge'] = mergereturn super().__new__(cls, name, bases, attrs)class UserProfile(metaclass=MetaMerger):merge_fields = ['name', 'email', 'preferences']_merge_strategies = {'preferences': lambda a, b: {**a, **b} # 合并嵌套字典}def __init__(self, data):self._data = data# 使用

profile1 = UserProfile({'name': 'Zhang San', 'email': 'zs@example.com'})

profile2 = UserProfile({'email': 'zhang.san@work.com', 'preferences': {'theme': 'dark'}})

merged_profile = profile1.merge(profile2)四、工程实战案例解析

4.1 微服务数据聚合网关

class DataAggregator:"""微服务数据聚合器"""def __init__(self, services):self.services = servicesself.schema = self.load_schema()def load_schema(self):"""加载字段合并规则"""# 从配置加载或硬编码return {'user_id': {'source': 'profile', 'priority': 1},'name': {'source': 'profile', 'priority': 1},'email': {'source': 'profile', 'priority': 1},'last_order': {'source': 'order', 'priority': 2},'preferences': {'source': 'preference', 'priority': 3, 'merge': 'deep'}}def fetch_user_data(self, user_id):"""从多个服务获取并合并数据"""results = {}for service in self.services:data = service.get_user_data(user_id)results[service.name] = datareturn self.merge_data(results)def merge_data(self, sources):"""根据模式合并数据"""merged = {}for field, rules in self.schema.items():source_name = rules['source']if source_name in sources and field in sources[source_name]:value = sources[source_name][field]# 应用合并策略if field in merged:if rules.get('merge') == 'deep':merged[field] = deep_merge(merged[field], value)else:# 按优先级覆盖if rules['priority'] > self.schema[field]['priority']:merged[field] = valueelse:merged[field] = valuereturn merged4.2 数据仓库ETL管道

def etl_merge_pipeline(sources):"""数据仓库ETL合并管道"""# 1. 数据提取extracted = [extract_from_source(src) for src in sources]# 2. 转换和清洗transformed = [transform_data(data) for data in extracted]# 3. 合并策略merged = defaultdict(dict)for data in transformed:for record in data:key = record['business_key']merged[key] = merge_strategy(merged.get(key, {}), record)# 4. 加载load_to_warehouse(list(merged.values()))def merge_strategy(existing, new):"""SCD2类型合并策略"""# 检查是否为更新if existing and existing['current'] and existing['attr1'] != new['attr1']:# 关闭当前记录existing['end_date'] = datetime.now()existing['current'] = False# 添加新记录new['start_date'] = datetime.now()new['end_date'] = Nonenew['current'] = Truereturn [existing, new]else:# 首次添加或无需更新new['start_date'] = datetime.now()new['end_date'] = Nonenew['current'] = Truereturn new4.3 实时数据流合并

import asyncio

from collections import dequeclass StreamMerger:"""实时数据流合并引擎"""def __init__(self, merge_window=0.5):self.buffer = defaultdict(deque)self.merge_window = merge_windowself.lock = asyncio.Lock()async def add_data(self, source, key, data):"""添加数据到合并缓冲区"""async with self.lock:self.buffer[key].append((source, data))async def process(self):"""处理缓冲区数据"""while True:await asyncio.sleep(self.merge_window)async with self.lock:for key, records in list(self.buffer.items()):if records:merged = self.merge_records(records)await self.output_merged(key, merged)self.buffer[key].clear()def merge_records(self, records):"""合并时间窗口内的记录"""# 按来源分组sources = defaultdict(list)for source, data in records:sources[source].append(data)# 应用源特定合并merged = {}for source, data_list in sources.items():if source == 'sensor1':# 传感器数据:取平均值merged[source] = sum(data_list) / len(data_list)elif source == 'log':# 日志数据:合并为列表merged[source] = data_list# 其他源处理...return merged五、性能优化策略

5.1 使用Pandas合并大型数据集

import pandas as pddef merge_large_datasets(files, keys):"""合并大型CSV数据集"""# 分块读取和合并chunks = []for file in files:# 使用迭代器分块读取reader = pd.read_csv(file, chunksize=10000)for chunk in reader:chunks.append(chunk)# 合并所有块merged = pd.concat(chunks, ignore_index=True)# 分组合并重复键grouped = merged.groupby(keys)# 应用聚合result = grouped.agg({'value1': 'sum','value2': 'mean','value3': lambda x: list(x)}).reset_index()return result5.2 并行合并技术

from concurrent.futures import ThreadPoolExecutor

import numpy as npdef parallel_merge(dicts, merge_func, workers=4):"""并行合并字典列表"""# 划分数据集chunks = np.array_split(dicts, workers)results = []with ThreadPoolExecutor(max_workers=workers) as executor:# 并行合并每个分块futures = []for chunk in chunks:futures.append(executor.submit(partial_merge, list(chunk), merge_func))# 收集结果for future in futures:results.append(future.result())# 合并分块结果return merge_func(results)def partial_merge(dicts, merge_func):"""合并字典子集"""result = {}for d in dicts:result = merge_func(result, d)return result5.3 内存优化合并

from itertools import chaindef memory_efficient_merge(dicts):"""内存高效的合并"""# 使用生成器避免创建中间数据结构all_items = chain.from_iterable(d.items() for d in dicts)# 流式处理merged = {}for key, value in all_items:if key in merged:if isinstance(merged[key], list):merged[key].append(value)else:merged[key] = [merged[key], value]else:merged[key] = valuereturn merged六、最佳实践与常见陷阱

6.1 合并操作黄金法则

明确的合并策略

# 定义清晰的合并规则 merge_rules = {'metadata': 'deep_merge', # 深度合并嵌套字典'timestamps': 'keep_latest', # 保留最新时间戳'counters': 'sum' # 累加计数器 }数据源优先级管理

# 定义数据源优先级 SOURCE_PRIORITY = {'master_db': 1,'cache': 2,'external_api': 3 }# 合并时根据优先级解决冲突变更追踪

# 记录合并操作元数据 merged_data['_merge_metadata'] = {'sources': ['service1', 'service2'],'merged_at': datetime.utcnow(),'strategy': 'priority_merge' }

6.2 常见陷阱及解决方案

陷阱1:循环引用导致内存泄漏

# 危险:相互引用的字典

dict1 = {}

dict2 = {'ref': dict1}

dict1['ref'] = dict2 # 循环引用# 解决方案:使用弱引用或避免循环

from weakref import WeakValueDictionarysafe_dict = WeakValueDictionary()陷阱2:大字典合并内存溢出

# 危险:合并超大型字典

merged = {**huge_dict1, **huge_dict2} # 可能耗尽内存# 解决方案:使用生成器流式合并

def stream_merge(dicts):for d in dicts:for k, v in d.items():yield k, v# 处理流式数据

for key, value in stream_merge([huge_dict1, huge_dict2]):process_item(key, value)陷阱3:不一致的数据结构

# 错误:不同源的相同字段类型不同

source1 = {'price': 100} # int

source2 = {'price': '100.00'} # str# 解决方案:数据规范化

def normalize_price(price):if isinstance(price, str):return float(price.replace(',', ''))return float(price)# 在合并前规范化总结:构建高效映射合并系统的技术框架

通过全面探索映射合并技术,我们形成以下专业实践体系:

技术选型矩阵

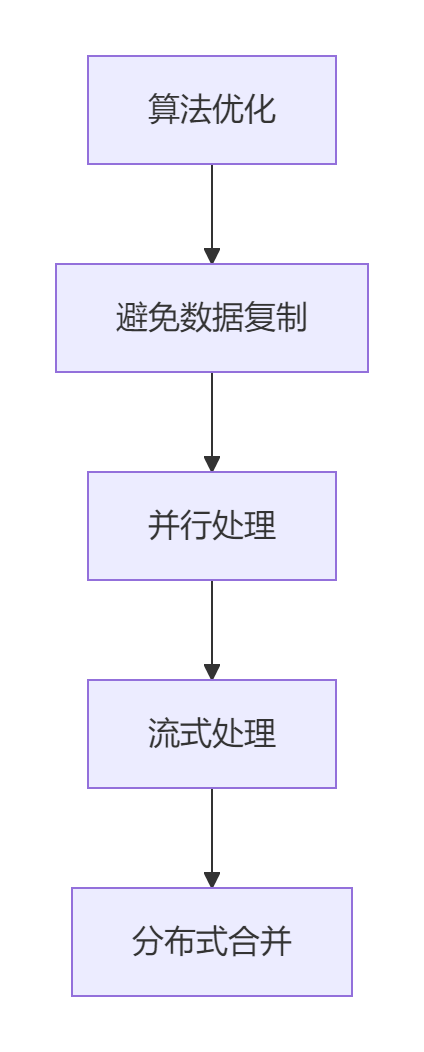

场景 推荐方案 关键优势 小型数据 字典解包 简洁高效 嵌套结构 深度递归合并 保留层级 大型数据集 Pandas分块合并 内存高效 实时流 时间窗口合并 低延迟 性能优化金字塔

架构设计原则

- 合并策略配置化

- 数据源元数据管理

- 合并操作可追溯

- 异常处理机制完善

未来发展方向:

- AI驱动的智能合并策略

- 区块链技术确保合并可验证性

- 自动冲突检测与解决

- 跨语言合并框架

扩展资源:

- 《Python Cookbook》第1.18节:将名称映射到序列元素

- Python官方文档:collections.ChainMap

- Pandas文档:合并、连接和拼接

掌握映射合并技术体系,开发者能够构建出从微服务到大数据平台的高效数据集成方案,为复杂系统提供统一的数据视图。

最新技术动态请关注作者:Python×CATIA工业智造

版权声明:转载请保留原文链接及作者信息