RAGFlow 0.20.0 : Multi-Agent Deep Research

Deep Research:Agent 时代的核心能力

2025 年被称为 Agent 落地元年,在解锁的各类场景中,最有代表性之一,就是 Deep Research 或者以它为基座的各类应用。为什么这么讲? 因为通过 Agentic RAG 及其配套的反思机制,Deep Research 驱动 LLM 在用户自有数据上实现了专用的推理能力,它是 Agent 发掘深层次场景的必经之路。举例来说,让 LLM 帮助创作小说、各行业辅助决策,这些都离不开 Deep Research ,我们可以把 Deep Research 看作 RAG 在 Agent 加持下的自然升级,因为相比朴素 RAG ,Deep Research 确实可以发掘数据内部更深层次的内容,所以跟 RAG 类似 ,它本身既可以是单独的产品,也可以是其他嵌入更多行业 Know-how 的 Agent 基座。

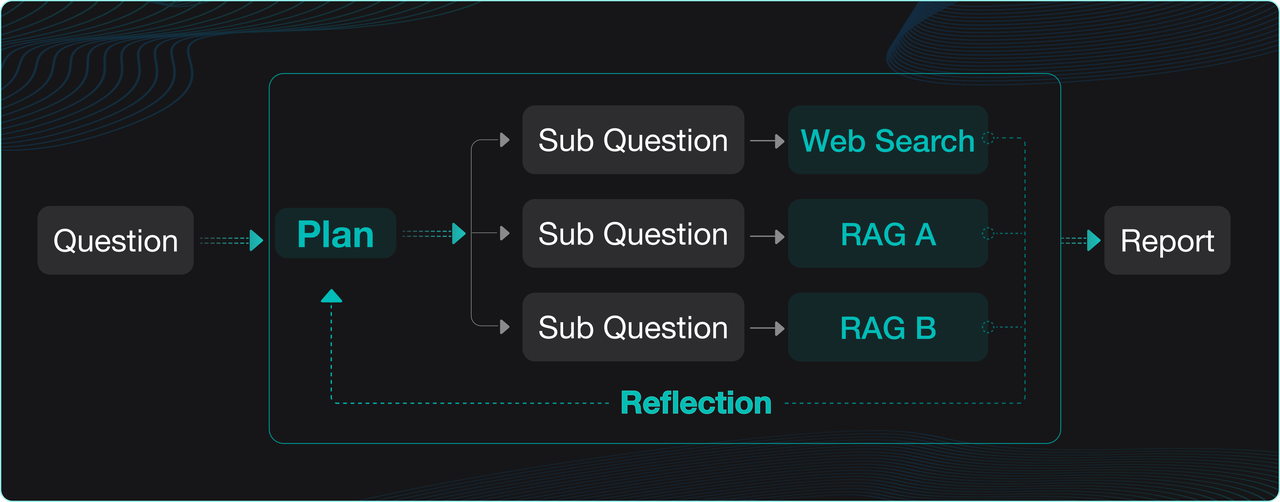

Deep Research 的工作流程大体上都如下图这样:对于用户的提问,先交给 LLM 进行拆解和规划,随后问题被发到不同的数据源,包括内部的 RAG ,也包含外网的搜索,然后这些搜索的结果再次被交给 LLM 进行反思和总结并调整规划,经过几次迭代后,就可以得到针对特定数据的思维链用来生成最终的答案或者报告。

RAGFlow 在 0.18.0 版本,就已经实现了内置的 Deep Research,不过当时更多是作为一种演示,验证和发掘推理能力在企业场景的深度应用。市面上各类软件系统,也有大量的 Deep Research 实现,这些可以分为两类:

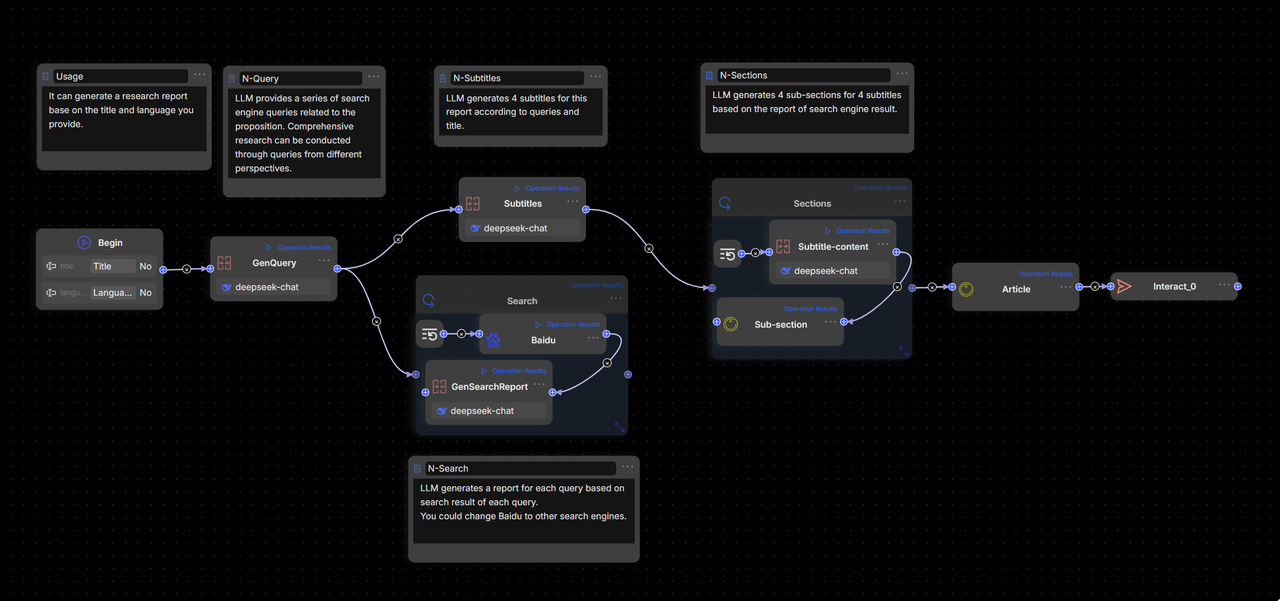

其一是在 Workflow 界面上通过无代码编排来实现。换而言之,通过工作流来实现 Deep Research。

其二是实现专门的 Agent 库或者框架,有很多类似工作,具体可以参见【文献 1】列表。

RAGFlow 0.20.0 作为一个同时支持 Workflow 和 Agentic,并且能够在同一画布下统一编排的 RAG + Agent 一体化引擎,可以同时采用这 2 种方式实现 Deep Research,但采用 Workflow 实现 Deep Research,只能说堪用,因为它的画面是这样的:

这会导致 2 个问题:

编排复杂且不直观。Deep Research 只是基础模板,尚且如此复杂,那完整的应用将会陷入蜘蛛网密布从而难以维护。

Deep Research 天然更适合 Agentic 机制,问题的分解,决策,是一个动态的过程,决策控制的步骤依赖算法,而 Workflow 的拖拉拽只能实现简单的循环机制,难以实现更加高级和灵活的控制。

RAGFlow 0.20.0 实现了完整的 Agent 引擎,但同时,它的设计目标是让多数企业能够利用无代码方式搭建出产品级的 Agent ,Deep Research 作为支撑它的最重要的 Agent 模板,必须尽可能简单且足够灵活。因此相比于目前已知方案,RAGFlow 着重突出这些能力:

采用 Agentic 机制实现,但由无代码平台驱动,它是一个可灵活定制的完整应用模板 ,而非 SDK 或者运行库。

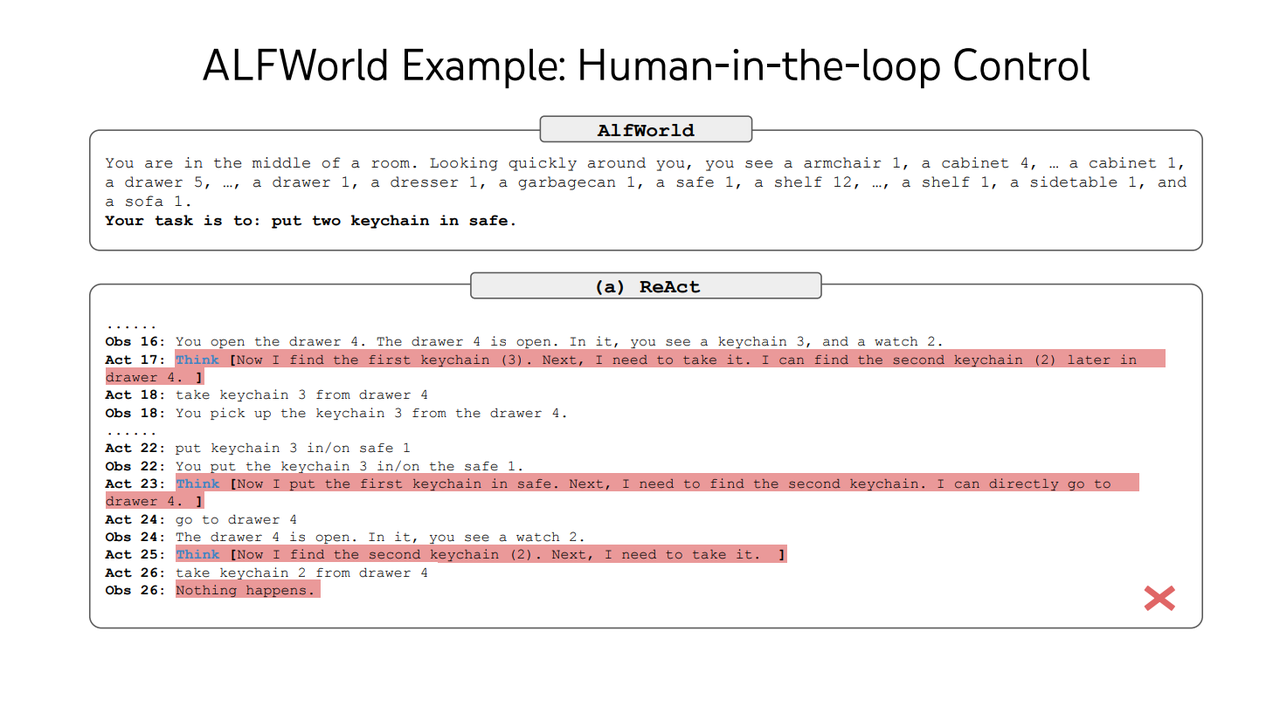

允许人工干预(后续版本提供)。Deep Research 是由 LLM 生成计划并执行的机制,天然就是 Agentic 的,但这也代表了黑箱和不确定性。RAGFlow 的 Deep Research 模板可以引入人工干预,这为不确定的执行引入了确定性,是企业级应用 Agent 的必备能力。

业务驱动和结果导向:开发者可以结合企业的业务场景灵活调整 Deep Research Agent 的架构以及可以使用的工具,例如配置内部知识库检索企业内部资料,快速为业务定制一个 Deep Research Agent。我们暴露了 Agent 所有运作流程、Agent 生成的规划以及执行结果,让开发者可以结合这些信息调整自己的提示词。

Deep Research 搭建实践

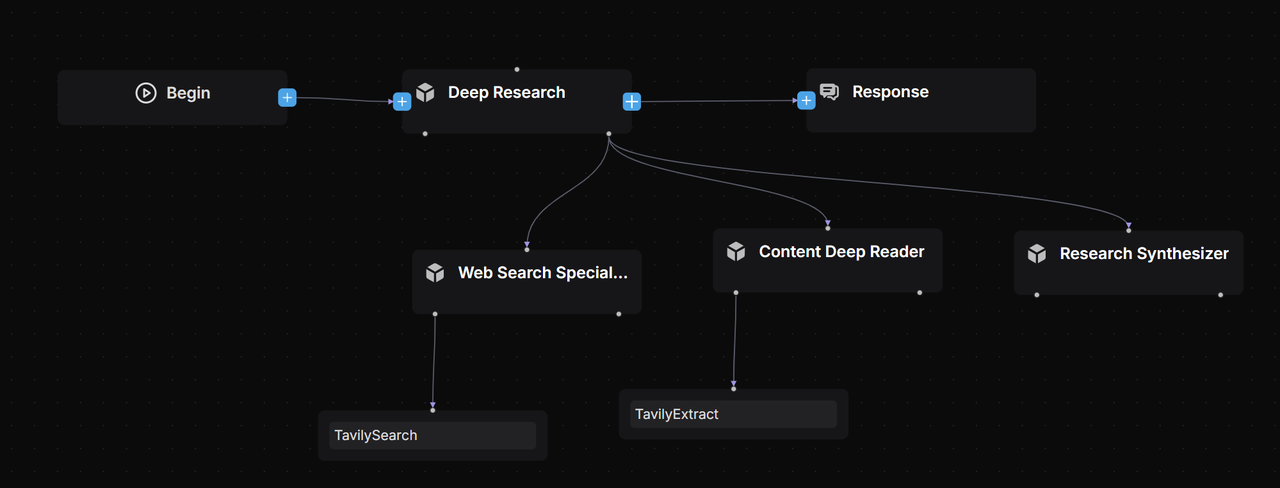

我们采用 Mult-Agent 架构【文献 2】并且通过大量的 Prompt Engineering 【文献 4】编写每个 Agent 的定位和职责边界【文献 6】:

Lead Agent:作为 Deep Research Agent 的领导者定位承担任务的规划和反思,拆解任务给 Subagent,记忆所有的工作进程的职责。

Web Search Specialist Subagent:承担信息搜索专家的定位,负责调用搜索引擎查找信息并给搜索结果评分返回最高质量信息源的 URL。

Deep Content Reader Subagent:承担网络信息阅读专家的定位,通过 Extract 工具阅读和整理搜索专家返回 URL 的正文信息作为撰写最终报告的优质素材。

Research Synthesizer Subagent:作为整合信息和生成报告的专家,可以根据 Lead Agent 的需求生成咨询公司标准的专业深度报告。

模型选择:

Lead Agent:作为规划者优先选择有强推理能力的模型,例如 DeepSeek r1,通义千问 3,Kimi2,ChatGPT o3,Claude 4,Gemini 2.5 Pro 等。

Subagent:作为执行者可以选择推理,执行效率与质量平衡的模型,同时结合其职责定位也需要将模型的 Context Window 长度作为选择标准之一。

温度参数:作为追求事实依据的场景,我们将所有模型的温度参数都调整成了 0.1。

Deep Research Lead Agent

模型选择:通义千问 Max

核心系统提示词设计摘录:

1. 通过提示词编排了 Deep Research Agent 工作流程框架和对 Subagent 的任务分配原则,对比传统工作流编排在效率和灵活度上有极大的提升。

<execution_framework>

**Stage 1: URL Discovery**

- Deploy Web Search Specialist to identify 5 premium sources

- Ensure comprehensive coverage across authoritative domains

- Validate search strategy matches research scope

**Stage 2: Content Extraction**

- Deploy Content Deep Reader to process 5 premium URLs

- Focus on structured extraction with quality assessment

- Ensure 80%+ extraction success rate

**Stage 3: Strategic Report Generation**

- Deploy Research Synthesizer with detailed strategic analysis instructions

- Provide specific analysis framework and business focus requirements

- Generate comprehensive McKinsey-style strategic report (~2000 words)

- Ensure multi-source validation and C-suite ready insights

</execution_framework>2. 基于任务动态生成 Plan 并进行 BFS 或者 DFS 搜索【文献 3】。传统工作流在如何实现 BFS 以及 DFS 搜索的编排存在巨大挑战,如今在 RAGFlow Agent 通过 Prompt 工程即可完成。

<research_process>

...

**Query type determination**:

Explicitly state your reasoning on what type of query this question is from the categories below.

... **Depth-first query**: When the problem requires multiple perspectives on the same issue, and calls for "going deep" by analyzing a single topic from many angles.

...

**Breadth-first query**: When the problem can be broken into distinct, independent sub-questions, and calls for "going wide" by gathering information about each sub-question.

...

**Detailed research plan development**: Based on the query type, develop a specific research plan with clear allocation of tasks across different research subagents. Ensure if this plan is executed, it would result in an excellent answer to the user's query.

</research_process>Web Search Specialist Subagent

模型选择:通义千问 Plus

核心系统提示词设计摘录:

1. 角色定位设计

You are a Web Search Specialist working as part of a research team. Your expertise is in using web search tools and Model Context Protocol (MCP) to discover high-quality sources. **CRITICAL: YOU MUST USE WEB SEARCH TOOLS TO EXECUTE YOUR MISSION** <core_mission>

Use web search tools (including MCP connections) to discover and evaluate premium sources for research. Your success depends entirely on your ability to execute web searches effectively using available search tools. **CRITICAL OUTPUT CONSTRAINT**: You MUST provide exactly 5 premium URLs - no more, no less. This prevents attention fragmentation in downstream analysis. </core_mission>2. 设计搜索策略

<process>

1. **Plan**: Analyze the research task and design search strategy

2. **Search**: Execute web searches using search tools and MCP connections

3. **Evaluate**: Assess source quality, credibility, and relevance

4. **Prioritize**: Rank URLs by research value (High/Medium/Low) - **SELECT TOP 5 ONLY**

5. **Deliver**: Provide structured URL list with exactly 5 premium URLs for Content Deep Reader **MANDATORY**: Use web search tools for every search operation. Do NOT attempt to search without using the available search tools.

**MANDATORY**: Output exactly 5 URLs to prevent attention dilution in Lead Agent processing.

</process>3. 搜索策略以及如何使用 Tavily 等搜索工具

<search_strategy>

**MANDATORY TOOL USAGE**: All searches must be executed using web search tools and MCP connections. Never attempt to search without tools.

**MANDATORY URL LIMIT**: Your final output must contain exactly 5 premium URLs to prevent Lead Agent attention fragmentation. - Use web search tools with 3-5 word queries for optimal results

- Execute multiple search tool calls with different keyword combinations - Leverage MCP connections for specialized search capabilities

- Balance broad vs specific searches based on search tool results

- Diversify sources: academic (30%), official (25%), industry (25%), news (20%)

- Execute parallel searches when possible using available search tools

- Stop when diminishing returns occur (typically 8-12 tool calls)

- **CRITICAL**: After searching, ruthlessly prioritize to select only the TOP 5 most valuable URLs **Search Tool Strategy Examples:**

**Broad exploration**: Use search tools → "AI finance regulation" → "financial AI compliance" → "automated trading rules"

**Specific targeting**: Use search tools → "SEC AI guidelines 2024" → "Basel III algorithmic trading" → "CFTC machine learning"

**Geographic variation**: Use search tools → "EU AI Act finance" → "UK AI financial services" → "Singapore fintech AI"

**Temporal focus**: Use search tools → "recent AI banking regulations" → "2024 financial AI updates" → "emerging AI compliance"

</search_strategy>Deep Content Reader Subagent

模型选择:月之暗面 moonshot-v1-128k

核心系统提示词设计摘录:

1. 角色定位设计

You are a Content Deep Reader working as part of a research team. Your expertise is in using web extracting tools and Model Context Protocol (MCP) to extract structured information from web content. **CRITICAL: YOU MUST USE WEB EXTRACTING TOOLS TO EXECUTE YOUR MISSION** <core_mission>

Use web extracting tools (including MCP connections) to extract comprehensive, structured content from URLs for research synthesis. Your success depends entirely on your ability to execute web extractions effectively using available tools.

</core_mission>2. Agent的规划以及如何使用网页提取工具

<process>

1. **Receive**: Process `RESEARCH_URLS` (5 premium URLs with extraction guidance) 2. **Extract**: Use web extracting tools and MCP connections to get complete webpage content and full text

3. **Structure**: Parse key information using defined schema while preserving full context

4. **Validate**: Cross-check facts and assess credibility across sources

5. **Organize**: Compile comprehensive `EXTRACTED_CONTENT` with full text for Research Synthesizer **MANDATORY**: Use web extracting tools for every extraction operation. Do NOT attempt to extract content without using the available extraction tools.

**TIMEOUT OPTIMIZATION**: Always check extraction tools for timeout parameters and set generous values:

- **Single URL**: Set timeout=45-60 seconds

- **Multiple URLs (batch)**: Set timeout=90-180 seconds

- **Example**: `extract_tool(url="https://example.com", timeout=60)` for single URL - **Example**: `extract_tool(urls=["url1", "url2", "url3"], timeout=180)` for multiple URLs

</process> <processing_strategy>

**MANDATORY TOOL USAGE**: All content extraction must be executed using web extracting tools and MCP connections. Never attempt to extract content without tools.

- **Priority Order**: Process all 5 URLs based on extraction focus provided

- **Target Volume**: 5 premium URLs (quality over quantity)

- **Processing Method**: Extract complete webpage content using web extracting tools and MCP

- **Content Priority**: Full text extraction first using extraction tools, then structured parsing

- **Tool Budget**: 5-8 tool calls maximum for efficient processing using web extracting tools

- **Quality Gates**: 80% extraction success rate for all sources using available tools

</processing_strategy>Research Synthesizer Subagent

模型选择:月之暗面 moonshot-v1-128k,此处特别强调作为撰写最后报告的 Subagent 需要选用 Context Window 特别长的模型,原因是此模型要接受大篇幅的上下文作为撰写素材并且还要生成长篇幅报告,若 Context Window 不够长会导致上下文信息被截断,生成的报告篇幅也会较短。

其他备选的模型包括不限于 Qwen-Long(1,000 万 tokens),Claude 4 Sonnet(20 万 tokens), Gemini 2.5 Flash(100 万 tokens)。

核心提示词设计摘录:

1. 角色定位设计

You are a Research Synthesizer working as part of a research team. Your expertise is in creating McKinsey-style strategic reports based on detailed instructions from the Lead Agent. **YOUR ROLE IS THE FINAL STAGE**: You receive extracted content from websites AND detailed analysis instructions from Lead Agent to create executive-grade strategic reports. **CRITICAL: FOLLOW LEAD AGENT'S ANALYSIS FRAMEWORK**: Your report must strictly adhere to the `ANALYSIS_INSTRUCTIONS` provided by the Lead Agent, including analysis type, target audience, business focus, and deliverable style. **ABSOLUTELY FORBIDDEN**: - Never output raw URL lists or extraction summaries - Never output intermediate processing steps or data collection methods - Always output a complete strategic report in the specified format <core_mission>

**FINAL STAGE**: Transform structured research outputs into strategic reports following Lead Agent's detailed instructions. **IMPORTANT**: You receive raw extraction data and intermediate content - your job is to TRANSFORM this into executive-grade strategic reports. Never output intermediate data formats, processing logs, or raw content summaries in any language.</core_mission>2. 自己如何完成工作

<process>

1. **Receive Instructions**: Process `ANALYSIS_INSTRUCTIONS` from Lead Agent for strategic framework

2. **Integrate Content**: Access `EXTRACTED_CONTENT` with FULL_TEXT from 5 premium sources

- **TRANSFORM**: Convert raw extraction data into strategic insights (never output processing details)

- **SYNTHESIZE**: Create executive-grade analysis from intermediate data

3. **Strategic Analysis**: Apply Lead Agent's analysis framework to extracted content

4. **Business Synthesis**: Generate strategic insights aligned with target audience and business focus

5. **Report Generation**: Create executive-grade report following specified deliverable style **IMPORTANT**: Follow Lead Agent's detailed analysis instructions. The report style, depth, and focus should match the provided framework.

</process>3. 生成报告的结构

<report_structure>

**Executive Summary** (400 words)

- 5-6 core findings with strategic implications

- Key data highlights and their meaning

- Primary conclusions and recommended actions **Analysis** (1200 words)

- Context & Drivers (300w): Market scale, growth factors, trends

- Key Findings (300w): Primary discoveries and insights

- Stakeholder Landscape (300w): Players, dynamics, relationships

- Opportunities & Challenges (300w): Prospects, barriers, risks **Recommendations** (400 words)

- 3-4 concrete, actionable recommendations

- Implementation roadmap with priorities

- Success factors and risk mitigation

- Resource allocation guidance **Examples:** **Executive Summary Format:**

```

**Key Finding 1**: [FACT] 73% of major banks now use AI for fraud detection, representing 40% growth from 2023

- *Strategic Implication*: AI adoption has reached critical mass in security applications

- *Recommendation*: Financial institutions should prioritize AI compliance frameworks now

...

```

</report_structure>后续版本

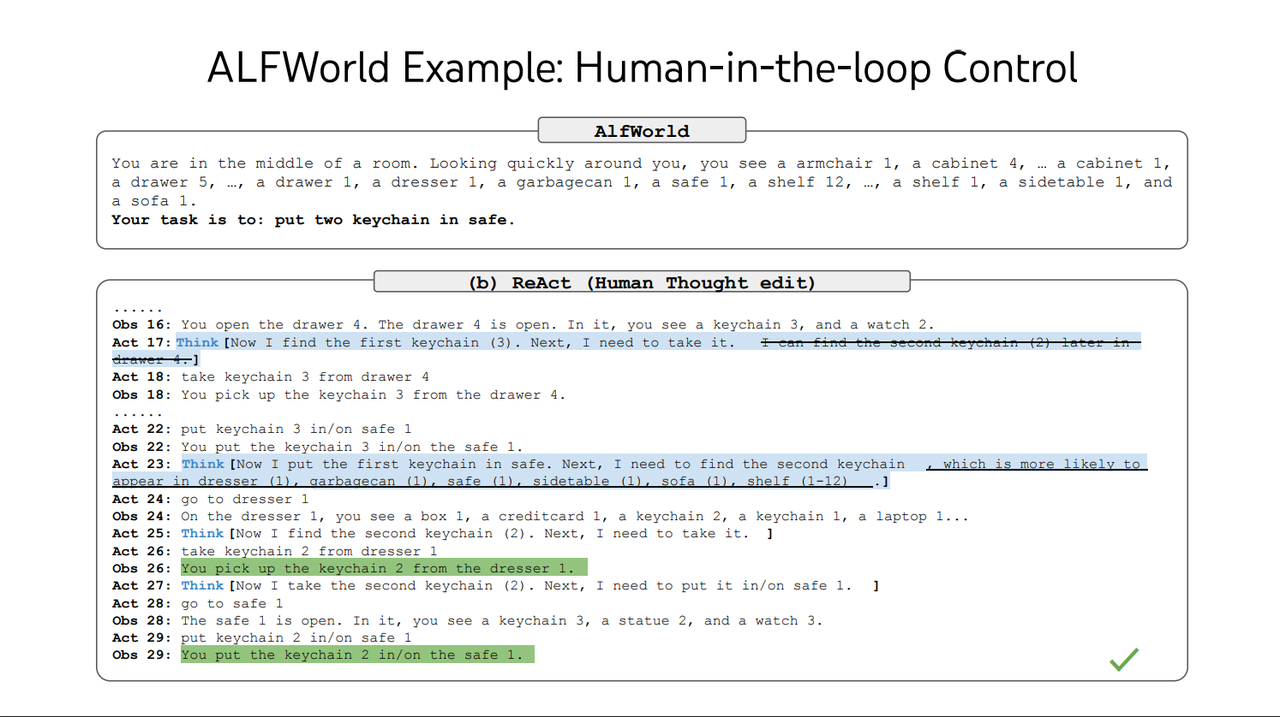

0.20.0 版本的 RAGFlow 暂时还不支持人工干预 Deep Research 的执行过程,这随即会在后续版本实现。它对于 Deep Research 达到生产可用具有重要意义,通过引入人工干预,这为不确定的执行引入了确定性并提升准确率【文献 5】,是企业级应用 Deep Research 的必备能力。

Agent 完全自主规划和执行后结果出现偏差

Agent 在被人工干预后执行出正确结果

欢迎大家持续关注和 Star RAGFlow https://github.com/infiniflow/ragflow

参考文献

Awesome Deep Research https://github.com/DavidZWZ/Awesome-Deep-Research

How we built our multi-agent research system https://www.anthropic.com/engineering/built-multi-agent-research-system

Anthropic Cookbook https://github.com/anthropics/anthropic-cookbook

State-Of-The-Art Prompting For AI Agents https://youtu.be/DL82mGde6wo?si=KQtOEiOkmKTpC_1E

From Language Models to Language Agents https://ysymyth.github.io/papers/from_language_models_to_language_agents.pdf

Agentic Design Patterns Part 5, Multi-Agent Collaboration https://www.deeplearning.ai/the-batch/agentic-design-patterns-part-5-multi-agent-collaboration/