elasticsearch-集成prometheus监控(k8s)

一. 简介:

关于elasticsearch的简介和部署,可以参考单独的文章elasticsearch基础概念与集群部署-CSDN博客,这里就不细说了。这里只讲讲如何在k8s中部署export并基于prometheus做es的指标采集。

二. 实现方式:

首先我们需要先部署exporter采集器,提供给prometheus调用 ,这里我们选择在k8s中部署,好处是: k8s有健康检测机制,不需要独立维护exporter的故障问题。这里采用deployment的方式:

apiVersion: apps/v1

kind: Deployment

metadata:name: elastic-exporternamespace: monitoring

spec:replicas: 1strategy:rollingUpdate:maxSurge: 1maxUnavailable: 0type: RollingUpdateselector:matchLabels:app: elastic-exportertemplate:metadata:labels:app: elastic-exporterspec:containers:- command:- /bin/elasticsearch_exporter- --es.uri=http://elastic:xxxx@10.0.36.1:9200 #这里采用了认证方式,如果没有认证,可直接配置url地址,即;http://10.0.36.1:9200- --es.all #采集集群所有节点的指标image: quay.io/prometheuscommunity/elasticsearch-exporter:latestsecurityContext:capabilities:drop:- SETPCAP- MKNOD- AUDIT_WRITE- CHOWN- NET_RAW- DAC_OVERRIDE- FOWNER- FSETID- KILL- SETGID- SETUID- NET_BIND_SERVICE- SYS_CHROOT- SETFCAPreadOnlyRootFilesystem: truelivenessProbe:httpGet:path: /healthzport: 9114initialDelaySeconds: 30timeoutSeconds: 10name: elastic-exporterports:- containerPort: 9114name: httpreadinessProbe:httpGet:path: /healthzport: 9114initialDelaySeconds: 10timeoutSeconds: 10resources:limits:cpu: 100mmemory: 128Mirequests:cpu: 25mmemory: 64MirestartPolicy: AlwayssecurityContext:runAsNonRoot: truerunAsGroup: 10000runAsUser: 10000fsGroup: 10000部署svc:

apiVersion: v1

kind: Service

metadata:name: elastic-exporternamespace: monitoringlabels:app: elastic-exporter

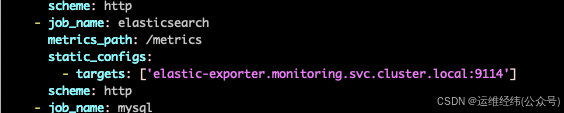

spec:type: ClusterIPports:- port: 9114targetPort: 9114protocol: TCPname: elastic-exporterselector:app: elastic-exporter配置prometheus的指标采集:

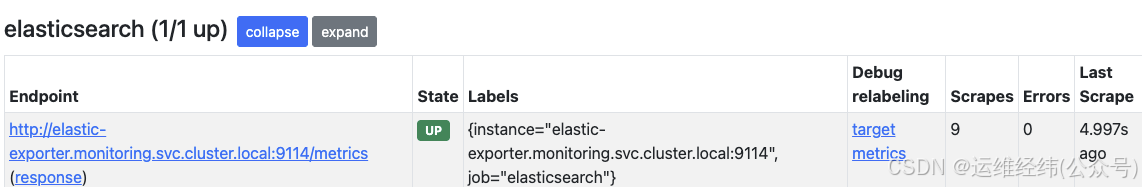

接下来,就可以验证指标了:

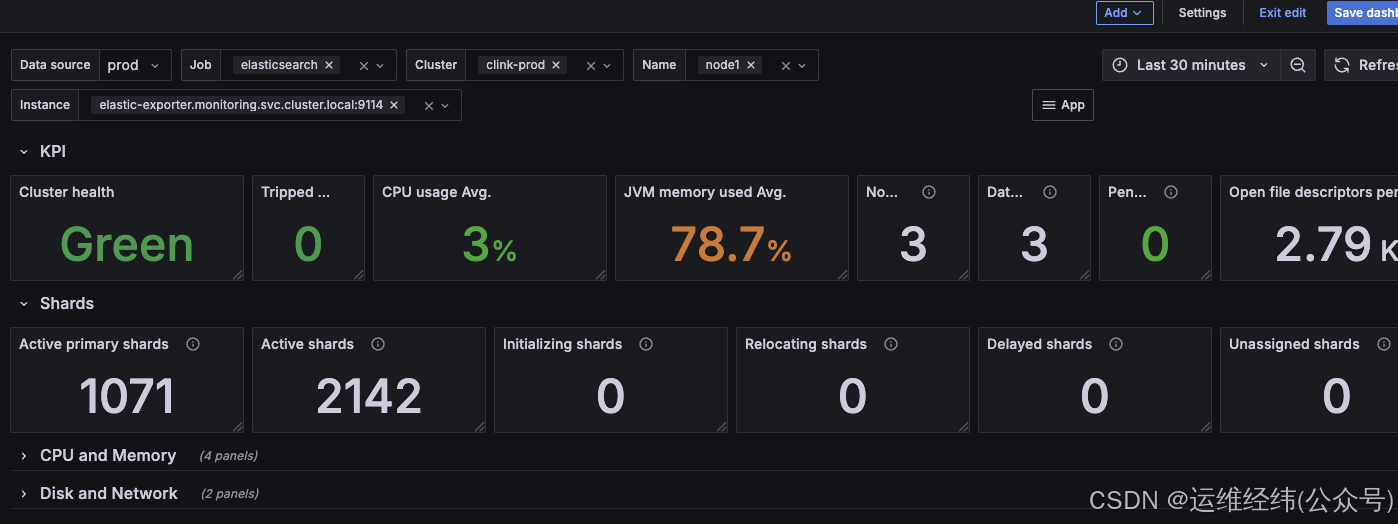

三. grafana中绘制dashboard看板:

也可以通过网络上的开源模版,直接导入:

配置完成!

---------------------------------------------------------------------------------------

深耕运维行业多年,擅长运维体系建设,方案落地。欢迎交流!

V: ywjw996

《 运维经纬 》