编排之神-Kubernetes微服务专题--ingress-nginx及金丝雀Canary的演练

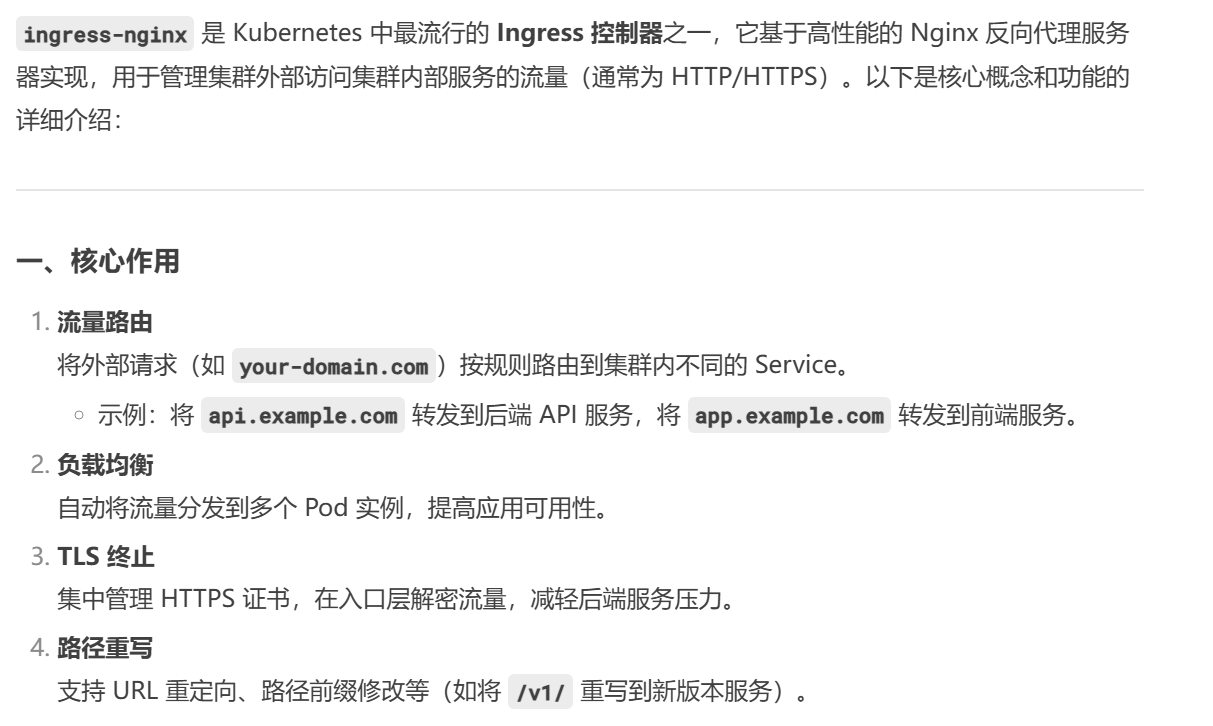

7.5 Ingress-nginx

官网:

https://kubernetes.github.io/ingress-nginx/deploy/#bare-metal-clusters

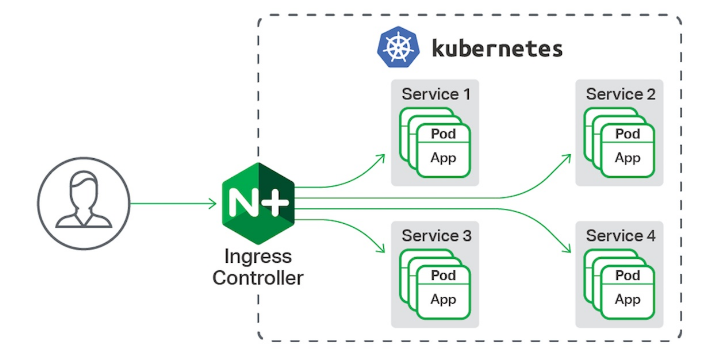

7.5.1 ingress-nginx功能

- 一种全局的、为了代理不同后端 Service 而设置的负载均衡服务,支持7层

- Ingress由两部分组成:Ingress controller和Ingress服务

- Ingress Controller 会根据你定义的 Ingress 对象,提供对应的代理能力。

- 业界常用的各种反向代理项目,比如 Nginx、HAProxy、Envoy、Traefik 等,都已经为Kubernetes 专门维护了对应的 Ingress Controller。

7.5.2 部署ingress

7.5.2.1 下载部署文件

# 建议使用资源包里面的内容# 以下为网络上直接下载的

# [root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.10.6.2/deploy/static/provider/baremetal/deploy.yaml

上传ingress所需镜像到harbor

# 上传资源包里面的内容,并在harbor仓库建立新项目

[root@k8s-master mnt]# unzip ingress-1.13.1.zip[root@k8s-master mnt]# docker load -i ingress-nginx-1.13.1.tar

Loaded image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.6.1

Loaded image: registry.k8s.io/ingress-nginx/controller:v1.13.1[root@k8s-master mnt]# docker tag registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.6.1 reg.dhj.org/ingress-nginx/kube-webhook-certgen:v1.6.1

[root@k8s-master mnt]# docker tag registry.k8s.io/ingress-nginx/controller:v1.13.1 reg.dhj.org/ingress-nginx/controller:v1.13.1

[root@k8s-master mnt]# docker push reg.dhj.org/ingress-nginx/kube-webhook-certgen:v1.6.1[root@k8s-master mnt]# docker push reg.dhj.org/ingress-nginx/controller:v1.13.1

7.5.2.2 安装ingress

[root@k8s-master ~]# vim deploy.yaml

444 image: ingress-nginx/controller:v1.13.1

547 image: ingress-nginx/kube-webhook-certgen:v1.6.1

603 image: ingress-nginx/kube-webhook-certgen:v1.6.1[root@k8s-master mnt]# kubectl apply -f deploy.yaml[root@k8s-master mnt]# kubectl -n ingress-nginx get pods

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-2k8vn 0/1 ContainerCreating 0 3s # 这俩是init容器,后续就没了

ingress-nginx-admission-patch-9gqlt 0/1 ContainerCreating 0 3s # 这俩是init容器,后续就没了

ingress-nginx-controller-7bf698f798-9tqv7 0/1 ContainerCreating 0 3s[root@k8s-master mnt]# kubectl -n ingress-nginx get pods

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-7bf698f798-9tqv7 0/1 Running 0 8s[root@k8s-master mnt]# kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.98.27.12 <none> 80:32244/TCP,443:34533/TCP 3m23s

ingress-nginx-controller-admission ClusterIP 10.96.154.255 <none> 443/TCP 3m23s# 修改微服务为loadbalancer

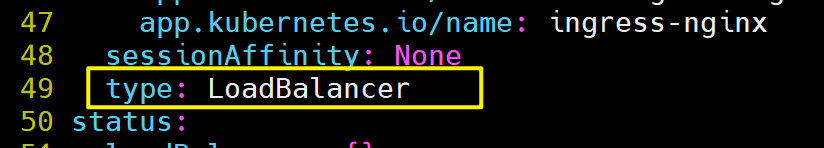

[root@k8s-master ~]# kubectl -n ingress-nginx edit svc ingress-nginx-controller

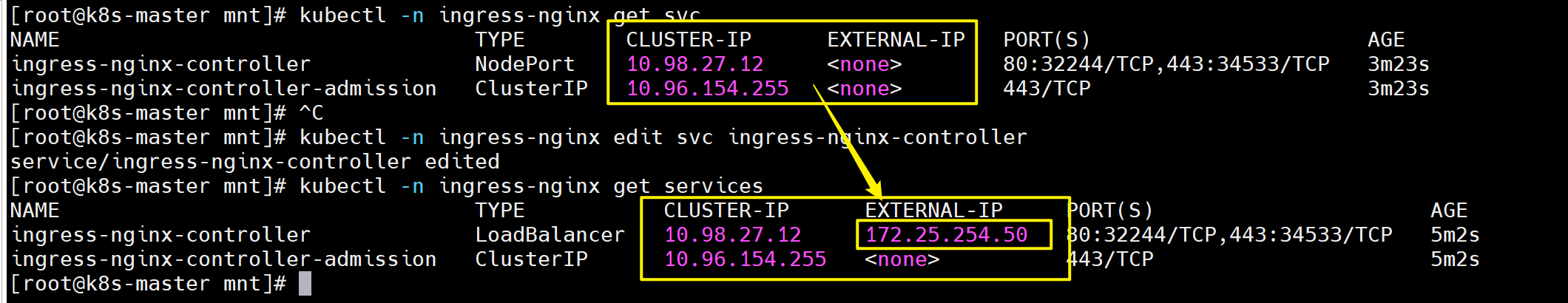

49 type: LoadBalancer[root@k8s-master mnt]# kubectl -n ingress-nginx get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.98.27.12 172.25.254.50 80:32244/TCP,443:34533/TCP 5m2s

ingress-nginx-controller-admission ClusterIP 10.96.154.255 <none> 443/TCP 5m2s

[!NOTE]

在ingress-nginx-controller中看到的对外IP就是ingress最终对外开放的ip

7.5.2.3 测试ingress

# 生成yaml文件

[root@k8s-master ~]# kubectl create ingress webcluster --rule '*/=dhj-svc:80' --dry-run=client -o yaml > dhj-ingress.yml# 定义路由规则:'*/=dhj-svc:80'

# *:匹配任意主机域名(如 example.com、test.com 等)。

# /:匹配所有路径(根路径及子路径)。

# dhj-svc:80:将流量路由到名为 dhj-svc 的 Kubernetes Service 的 80 端口。[root@k8s-master ~]# vim dhj-ingress.yml

aapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: test-ingress

spec:ingressClassName: nginxrules:- http:paths:- backend:service:name: dhj-svcport:number: 80path: /pathType: Prefix # Exact(精确匹配),ImplementationSpecific(特定实现),Prefix(前缀匹配),Regular expression(正则表达式匹配)# 建立ingress控制器

[root@k8s-master ~]# kubectl apply -f dhj-ingress.yml

ingress.networking.k8s.io/webserver created[root@k8s-master mnt]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

webcluster nginx * 172.25.254.20 80 4m57s

[!NOTE]

ingress必须和输出的service资源处于同一namespace

7.5.3 ingress的高级用法

7.5.3.1 基于路径的访问

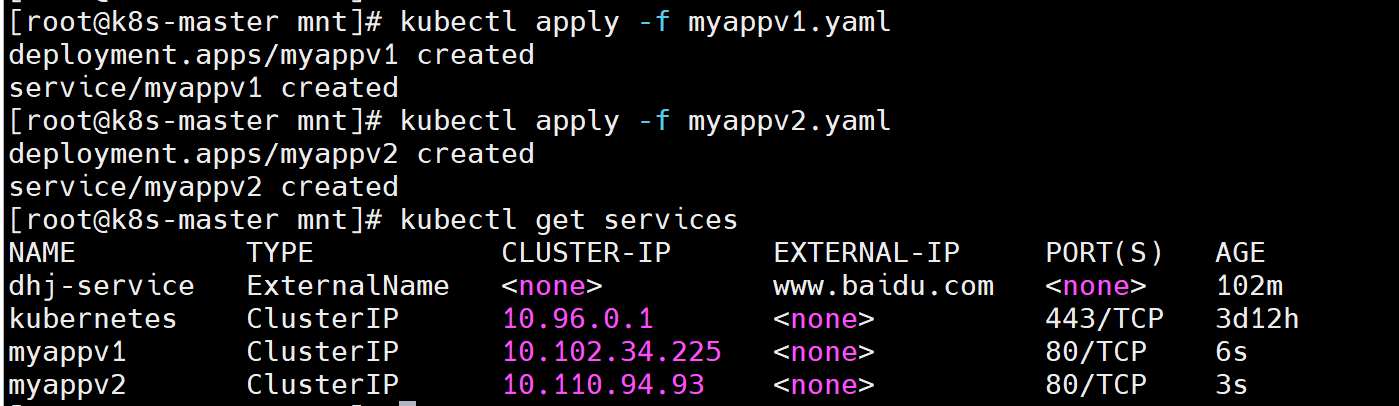

1.建立用于测试的控制器myapp

[root@k8s-master app]# kubectl create deployment myappv1 --image myapp:v1 --dry-run=client -o yaml > myappv1.yaml[root@k8s-master app]# kubectl create deployment myappv2 --image myapp:v2 --dry-run=client -o yaml > myappv2.yaml[root@k8s-master app]# kubectl expose deployment myappv1 --port 80 --target-port 80 --dry-run=client -o yaml >> myappv1.yaml[root@k8s-master app]# kubectl expose deployment myappv2 --port 80 --target-port 80 --dry-run=client -o yaml >> myappv1.yaml[root@k8s-master app]# vim myappv1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: myappv1name: myappv1

spec:replicas: 1selector:matchLabels:app: myappv1template:metadata:labels:app: myappv1spec:containers:- image: myapp:v1name: myappv1

---

apiVersion: v1

kind: Service

metadata:labels:app: myappv1name: myappv1

spec:ports:- name: myappv1port: 80protocol: TCPtargetPort: 80selector:app: myappv1type: ClusterIP[root@k8s-master app]# vim myappv2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: myappv2name: myappv2

spec:replicas: 1selector:matchLabels:app: myappv2template:metadata:labels:app: myappv2spec:containers:- image: myapp:v2name: myappv2

---

apiVersion: v1

kind: Service

metadata:labels:app: myappv2name: myappv2

spec:ports:- name: myappv2port: 80protocol: TCPtargetPort: 80selector:app: myappv2type: ClusterIP[root@k8s-master mnt]# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myappv1 ClusterIP 10.102.34.225 <none> 80/TCP 6s

myappv2 ClusterIP 10.110.94.93 <none> 80/TCP 3s

2.建立ingress的yaml

[root@k8s-master app]# vim ingress1.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:nginx.ingress.kubernetes.io/rewrite-target: / # 访问路径后加任何内容都被定向到/name: ingress1

spec:ingressClassName: nginxrules:- host: www.dhj.orghttp:paths:- backend:service:name: myappv1port:number: 80path: /v1pathType: Prefix- backend:service:name: myappv2port:number: 80path: /v2pathType: Prefix[root@k8s-master mnt]# kubectl describe ingress ingress1www.dhj.org/v1 myappv1:80 (10.244.2.6:80)/v2 myappv2:80 (10.244.1.6:80)

Annotations: nginx.ingress.kubernetes.io/rewrite-target: /# 这个是之前实验使用metllab插件搭建的类似于给微服务做dhcp转发ip的工具

[root@k8s-master mnt]# kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.98.27.12 172.25.254.50 80:32244/TCP,443:34533/TCP 3h26m

ingress-nginx-controller-admission ClusterIP 10.96.154.255 <none> 443/TCP 3h26m# 测试:

[root@reg ~]# echo 172.25.254.50 www.dhj.org >> /etc/hosts[root@k8s-master mnt]# echo 172.25.254.50 www.dhj.org >> /etc/hosts

[root@k8s-master mnt]# curl www.dhj.org/v1

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@k8s-master mnt]# curl www.dhj.org/v2

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a># nginx.ingress.kubernetes.io/rewrite-target: / 的功能实现

[root@reg ~]# curl www.dhj.org/v2/aaaa

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

7.5.3.2 基于域名的访问

# 在测试主机中设定解析

[root@reg ~]# vim /etc/hosts

172.25.254.50 www.dhj.org myappv1.dhj.org myappv2.org[root@k8s-master mnt]# cp ingress1.yml ingress2.yml

[root@k8s-master mnt]# vim ingress2.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:nginx.ingress.kubernetes.io/rewrite-target: /name: ingress2

spec:ingressClassName: nginxrules:- host: myappv1.dhj.orghttp:paths:- backend:service:name: myappv1port:number: 80path: /pathType: Prefix- host: myappv2.dhj.orghttp:paths:- backend:service:name: myappv2port:number: 80path: /pathType: Prefix[root@k8s-master mnt]# kubectl apply -f ingress2.yml[root@k8s-master mnt]# kubectl describe ingress ingress2[root@k8s-master mnt]# kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.98.27.12 172.25.254.50 80:32244/TCP,443:34533/TCP 3h35m

ingress-nginx-controller-admission ClusterIP 10.96.154.255 <none> 443/TCP 3h35m[root@k8s-master mnt]# curl myappv1.dhj.org

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@k8s-master mnt]# curl myappv2.dhj.org

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

7.5.3.3 建立tls加密(实现https)

# 建立证书

[root@k8s-master app]# openssl req -newkey rsa:2048 -nodes -keyout tls.key -x509 -days 365 -subj "/CN=nginxsvc/O=nginxsvc" -out tls.crt

# 建立加密资源类型secret

[root@k8s-master app]# kubectl create secret tls web-tls-secret --key tls.key --cert tls.crt

secret/web-tls-secret created

[root@k8s-master app]# kubectl get secrets

NAME TYPE DATA AGE

web-tls-secret kubernetes.io/tls 2 6s

[!NOTE]

secret通常在kubernetes中存放敏感数据,他并不是一种加密方式,在后面课程中会有专门讲解

# 建立ingress3基于tls认证的yml文件

[root@k8s-master app]# vim ingress3.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:nginx.ingress.kubernetes.io/rewrite-target: /name: ingress3

spec:tls:- hosts:- myapp-tls.dhj.orgsecretName: web-tls-secretingressClassName: nginxrules:- host: myapp-tls.dhj.orghttp:paths:- backend:service:name: myappv1port:number: 80path: /pathType: Prefix[root@k8s-master mnt]# vim /etc/hosts

172.25.254.50 myapp-tls.dhj.org# 测试

[root@k8s-master mnt]# curl -k https://myapp-tls.dhj.org

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

7.5.3.4 建立auth认证

# 建立认证文件

[root@k8s-master app]# dnf install httpd-tools -y

[root@k8s-master app]# htpasswd -cm auth admin

New password:admin

Re-type new password:admin[root@k8s-master mnt]# cat auth

admin:$apr1$Ny8jCT8n$Hiuu0FRvZBN60p1tA3g0Y.# 建立认证类型资源(创建一个名为auth-web的通用型Secret资源)

# generic:# 创建通用型 Secret

# --from-file auth:# 从本地文件系统加载数据到 Secret # auth:当前目录下的文件名(或目录路径)[root@k8s-master mnt]# kubectl create secret generic auth-web --from-file auth

[root@k8s-master mnt]# kubectl describe secrets auth-web

# 建立ingress4基于用户认证的yaml文件

[root@k8s-master app]# vim ingress4.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:nginx.ingress.kubernetes.io/auth-type: basicnginx.ingress.kubernetes.io/auth-secret: auth-webnginx.ingress.kubernetes.io/auth-realm: "Please input username and password"name: ingress4

spec:tls:- hosts:- myapp-tls.dhj.orgsecretName: web-tls-secretingressClassName: nginxrules:- host: myapp-tls.dhj.orghttp:paths:- backend:service:name: myappv1port:number: 80path: /pathType: Prefix# 建立ingress4

[root@k8s-master app]# kubectl apply -f ingress4.yml[root@k8s-master app]# kubectl describe ingress ingress4

TLS:web-tls-secret terminates myapp-tls.dhj.org

Rules:Host Path Backendsmyapp-tls.dhj.org/ myappv1:80 (10.244.2.6:80)

Annotations: nginx.ingress.kubernetes.io/auth-realm: Please input username and passwordnginx.ingress.kubernetes.io/auth-secret: auth-webnginx.ingress.kubernetes.io/auth-type: basic# 测试:

[root@k8s-master mnt]# curl -k https://myapp-tls.dhj.org

<html>

<head><title>401 Authorization Required</title></head>

<body>

<center><h1>401 Authorization Required</h1></center>

<hr><center>nginx</center>

</body>

</html>

[root@k8s-master mnt]# curl -k https://myapp-tls.dhj.org -uadmin:admin

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

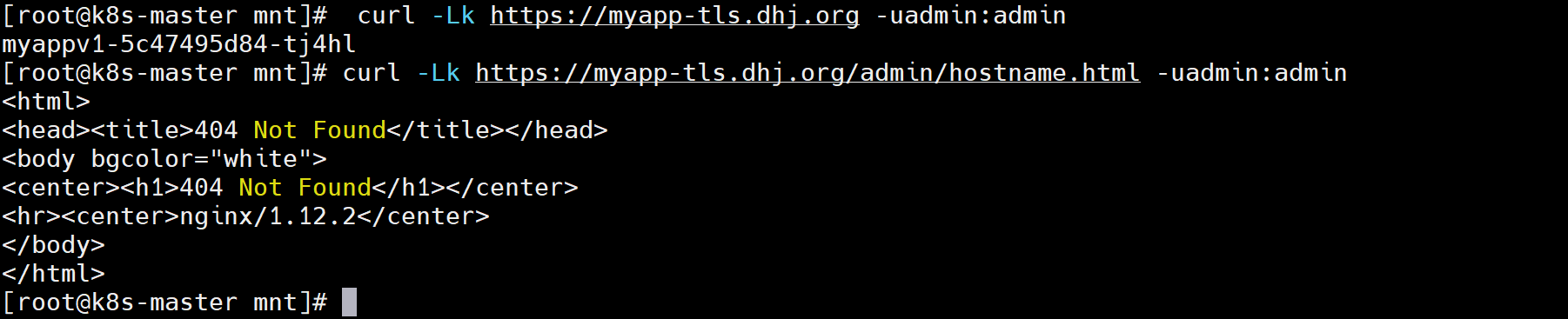

7.5.3.5 rewrite重定向

# 将默认访问路径重定向到/hostname.html,并启用基础认证

[root@k8s-master app]# vim ingress5.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:nginx.ingress.kubernetes.io/app-root: /hostname.html # 根路径重定向到 hostname.htmlnginx.ingress.kubernetes.io/auth-type: basic # 启用基础认证nginx.ingress.kubernetes.io/auth-secret: auth-web # 认证使用的 Secretnginx.ingress.kubernetes.io/auth-realm: "Please input username and password" # 认证提示name: ingress5

spec:tls: # TLS配置- hosts:- myapp-tls.dhj.orgsecretName: web-tls-secret # TLS证书SecretingressClassName: nginxrules:- host: myapp-tls.dhj.orghttp:paths:- backend:service:name: myappv1 # 后端服务port:number: 80path: /pathType: Prefix[root@k8s-master app]# kubectl apply -f ingress5.yml[root@k8s-master app]# kubectl describe ingress ingress5# 测试:

[root@reg ~]# curl -Lk https://myapp-tls.dhj.org -uadmin:admin[root@reg ~]# curl -Lk https://myapp-tls.dhj.org/admin/hostname.html -uadmin:admin

<html>

<head><title>404 Not Found</title></head>

<body bgcolor="white">

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.10.7.2</center>

</body>

</html>

[root@k8s-master app]# vim ingress5.yml

# 解决重定向路径问题

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:nginx.ingress.kubernetes.io/rewrite-target: /$2 # 重写路径:捕获组 $2nginx.ingress.kubernetes.io/use-regex: "true" # 启用正则匹配# 保留基础认证配置nginx.ingress.kubernetes.io/auth-type: basicnginx.ingress.kubernetes.io/auth-secret: auth-webnginx.ingress.kubernetes.io/auth-realm: "Please input username and password"name: ingress6

spec:tls:- hosts:- myapp-tls.dhj.orgsecretName: web-tls-secretingressClassName: nginxrules:- host: myapp-tls.dhj.orghttp:paths:- backend:service:name: myappv1port:number: 80path: / # 规则1:根路径pathType: Prefix- backend:service:name: myappv1port:number: 80path: /admin(/|$)(.*) # 规则2:正则匹配/admin/或/admin开头的路径pathType: ImplementationSpecific# 测试

[root@reg ~]# curl -Lk https://myapp-tls.dhj.org/admin/hostname.html -uadmin:admin

myappv1-5c47495d84-tj4hl

7.6 Canary金丝雀发布

Canary Deployments - Ingress-Nginx Controller

7.6.1 什么是金丝雀发布

金丝雀发布(Canary Release)也称为灰度发布,是一种软件发布策略。

主要目的是在将新版本的软件全面推广到生产环境之前,先在一小部分用户或服务器上进行测试和验证,以降低因新版本引入重大问题而对整个系统造成的影响。

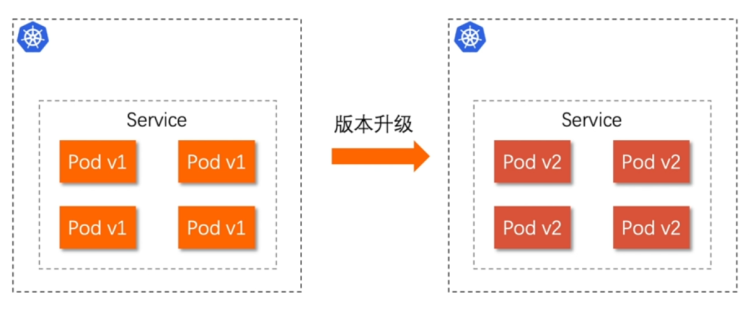

是一种Pod的发布方式。金丝雀发布采取先添加、再删除的方式,保证Pod的总量不低于期望值。并且在更新部分Pod后,暂停更新,当确认新Pod版本运行正常后再进行其他版本的Pod的更新。

7.6.2 Canary发布方式

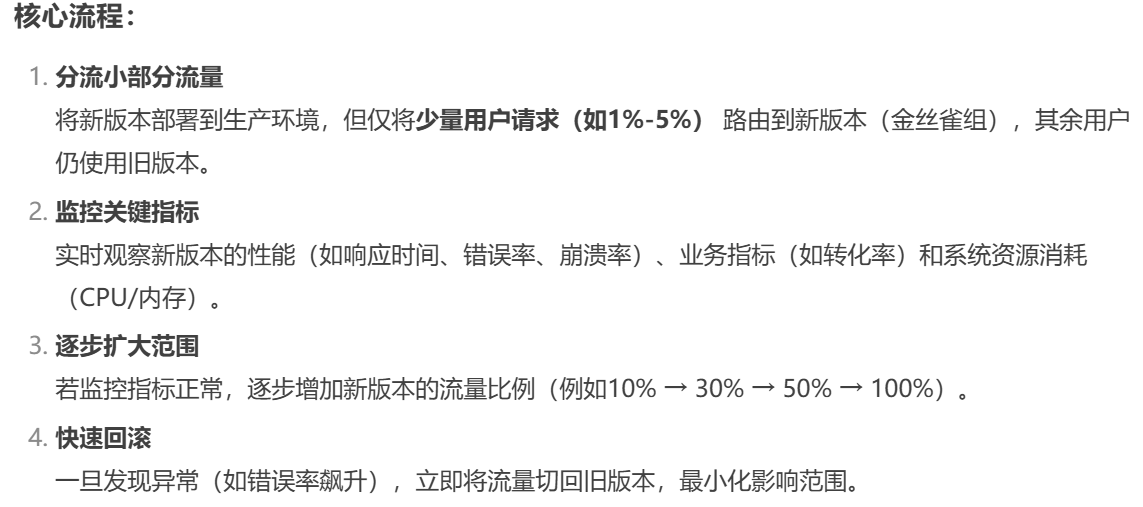

其中header和weight中的最多

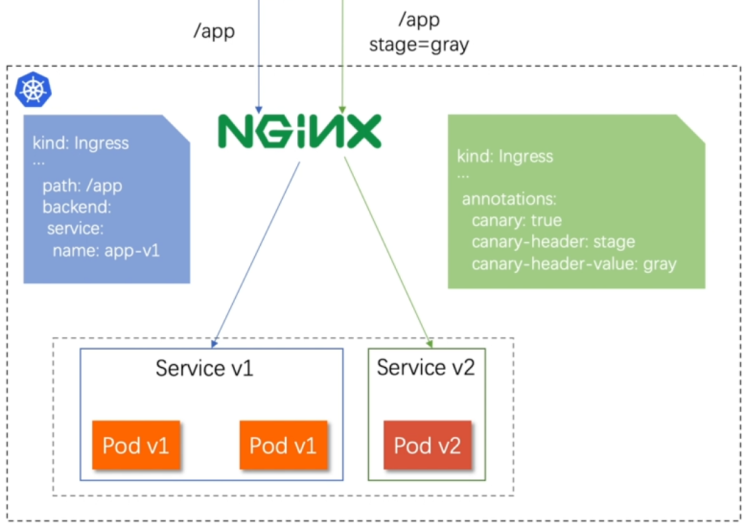

7.6.2.1 基于header(http包头)灰度

- 通过Annotaion扩展

- 创建灰度ingress,配置灰度头部key以及value

- 灰度流量验证完毕后,切换正式ingress到新版本

- 之前我们在做升级时可以通过控制器做滚动更新,默认25%利用header可以使升级更为平滑,通过key 和vule 测试新的业务体系是否有问题。

1.示例

# 做此实验前将之前的实验ingress文件删除,以防扰乱实验效果

# 建立版本1的ingress

[root@k8s-master app]# vim canary-old.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:name: myapp-v1-ingress

spec:ingressClassName: nginxrules:- host: myapp.dhj.orghttp:paths:- backend:service:name: myappv1port:number: 80path: /pathType: Prefix[root@k8s-master app]# kubectl apply -f canary-old.yml[root@k8s-master app]# kubectl describe ingress myapp-v1-ingressmyapp.dhj.org/ myappv1:80 (10.244.2.6:80) # v1# 建立基于header的ingress

[root@k8s-master app]# vim canary-new.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:nginx.ingress.kubernetes.io/canary: "true"nginx.ingress.kubernetes.io/canary-by-header: "version"nginx.ingress.kubernetes.io/canary-by-header-value: "2"name: myapp-v2-ingress

spec:ingressClassName: nginxrules:- host: myapp.dhj.orghttp:paths:- backend:service:name: myappv2port:number: 80path: /pathType: Prefix[root@k8s-master app]# kubectl apply -f canary-new.yml[root@k8s-master mnt]# kubectl describe ingress myapp-v2-ingressmyapp.dhj.org/ myappv2:80 (10.244.1.6:80) # v2

Annotations: nginx.ingress.kubernetes.io/canary: truenginx.ingress.kubernetes.io/canary-by-header: versionnginx.ingress.kubernetes.io/canary-by-header-value: 2[root@k8s-master mnt]# vim /etc/hosts

172.25.254.50 myapp.dhj.org# 测试:

[root@reg ~]# curl myapp.dhj.org

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@reg ~]# curl -H "version: 2" myapp.dhj.org

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

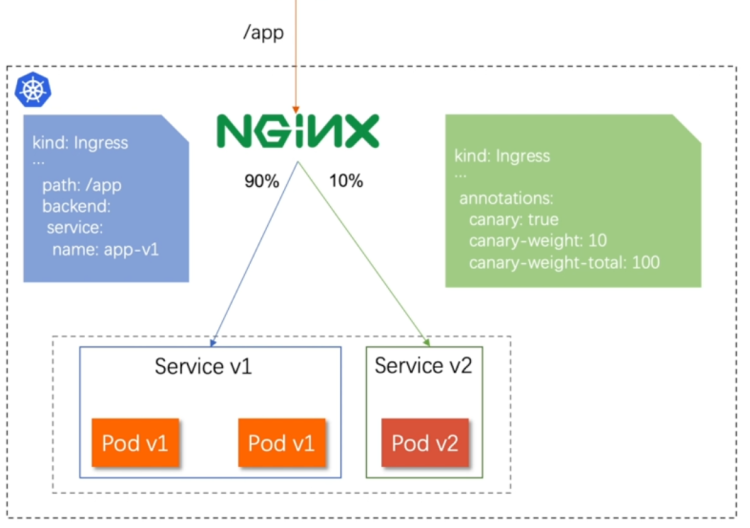

7.6.2.2 基于权重的灰度发布

- 通过Annotaion拓展

- 创建灰度ingress,配置灰度权重以及总权重

- 灰度流量验证完毕后,切换正式ingress到新版本

1.示例

# 基于权重的灰度发布

[root@k8s-master app]# vim canary-new.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:nginx.ingress.kubernetes.io/canary: "true"nginx.ingress.kubernetes.io/canary-weight: "10" # 更改权重值nginx.ingress.kubernetes.io/canary-weight-total: "100"name: myapp-v2-ingress

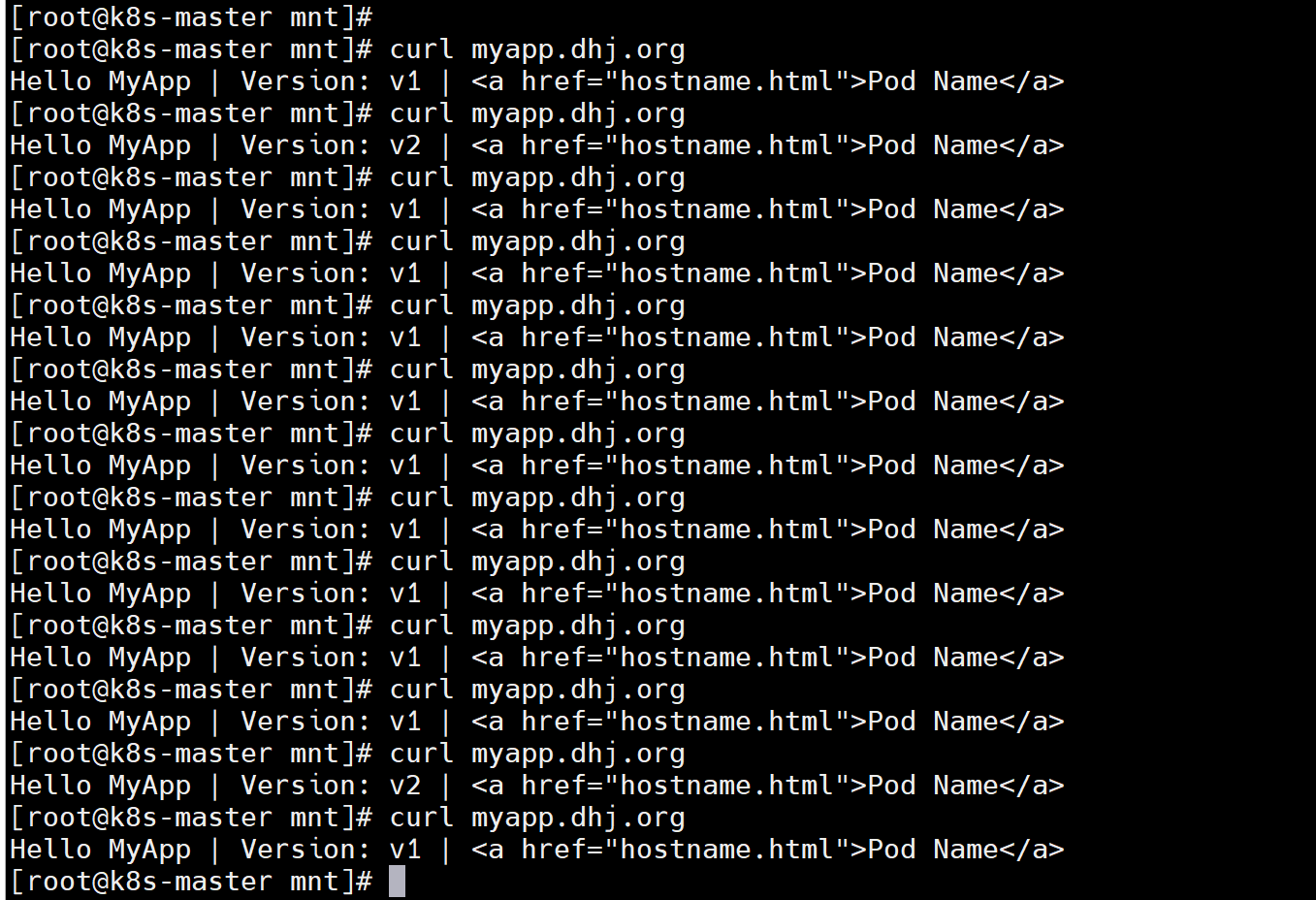

spec:ingressClassName: nginxrules:- host: myapp.dhj.orghttp:paths:- backend:service:name: myappv2port:number: 80path: /pathType: Prefix[root@k8s-master app]# kubectl apply -f canary-new.yml# 测试:

[root@reg ~]# vim check_ingress.sh

#!/bin/bash

v1=0

v2=0for (( i=0; i<100; i++))

doresponse=`curl -s myapp.dhj.org | grep -c v1`v1=`expr $v1 + $response`v2=`expr $v2 + 1 - $response`done

echo "v1:$v1, v2:$v2"[root@k8s-master mnt]# sh check_ingress.sh

v1:88, v2:12# 更改完毕权重后继续测试可观察变化(将权重调为50来看看测试效果)

[root@k8s-master mnt]# sh check_ingress.sh

v1:55, v2:45