pytorch+tensorboard+可视化CNN

数据预处理:

transform = transforms.Compose([transforms.Resize((224,224)),transforms.ToTensor(),transforms.Normalize(mean=[0.5, 0.5, 0.5],std=[0.5, 0.5, 0.5])

])

改变了尺寸、归一化

加载数据集:

fold_path = '../images'

dataset = ImageFolder(fold_path,transform=transform)

dataloader = DataLoader(dataset,batch_size=1)

定义网络结构并实例化

class Net(nn.Module):def __init__(self):super(Net,self).__init__()self.conv1 = nn.Conv2d(3,6,3,1,0)self.bn1 = nn.BatchNorm2d(6)self.relu1 = nn.ReLU()self.pool1 = nn.MaxPool2d(2,2)#self.pool2 = nn.AvgPool2d(2,2)self.flatten1 = nn.Flatten()self.linear = nn.Linear(111*111*6,2)def forward(self,x):x = self.conv1(x)x = self.bn1(x)x = self.relu1(x)x = self.pool1(x)#x = self.pool2(x)x = self.flatten1(x)x = self.linear(x)return x#实例化网络

net = Net()

效果展示:

output = torch.reshape(output,(-1,3,111,111))

这个地方是池化之后是这样的

池化之前是

output = torch.reshape(output,(-1,3,222,222))

writer = SummaryWriter('../hcy_logs')cnt = 0

for data in dataloader:img,label = dataprint(img.shape)output = net(img)print(output.shape)#writer.add_images('input',img,cnt)output = torch.reshape(output,(-1,3,111,111))writer.add_images('output',output,cnt)cnt += 1writer.close()

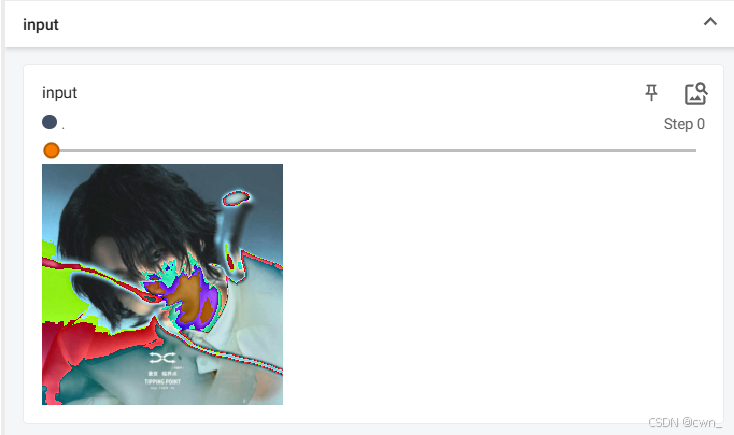

原图:(量变临界点 强推 wyy可听)

原图归一化后效果:

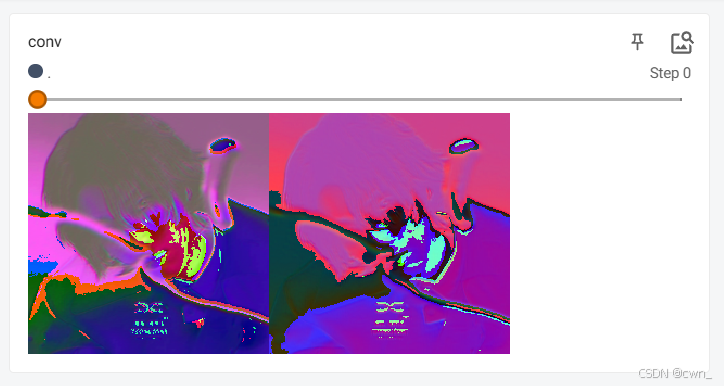

卷积后效果 卷积核是3*3 stride=1 padding=0

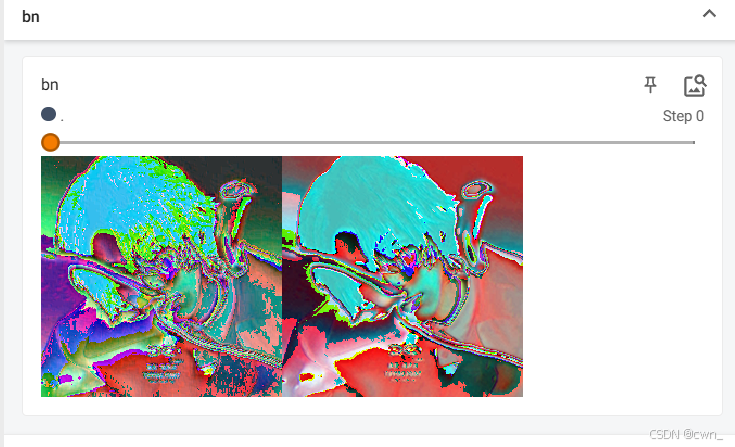

BN 批量归一化效果:

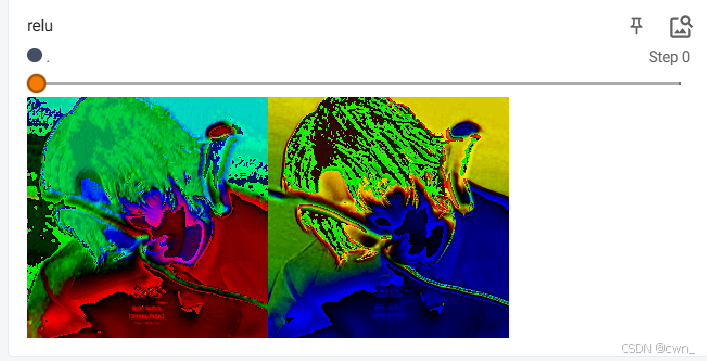

relu非线性激活效果:

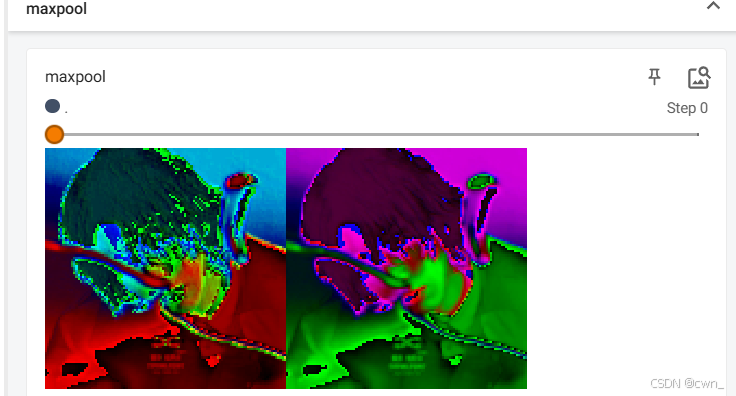

最大池化效果

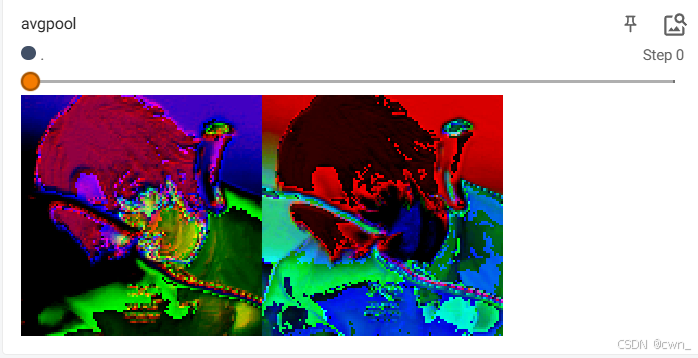

平均池化效果: