用TensorFlow进行逻辑回归(五)

Softmax分类

#List3-50

%matplotlib inline

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

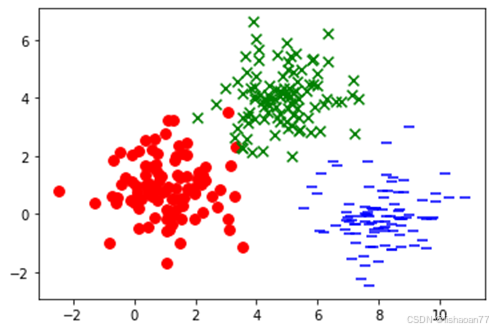

x1_label0 = np.random.normal(1, 1, (100, 1))

x2_label0 = np.random.normal(1, 1, (100, 1))

x1_label1 = np.random.normal(5, 1, (100, 1))

x2_label1 = np.random.normal(4, 1, (100, 1))

x1_label2 = np.random.normal(8, 1, (100, 1))

x2_label2 = np.random.normal(0, 1, (100, 1))

plt.scatter(x1_label0, x2_label0, c='r', marker='o', s=60)

plt.scatter(x1_label1, x2_label1, c='g', marker='x', s=60)

plt.scatter(x1_label2, x2_label2, c='b', marker='_', s=60)

plt.show()

xs_label0 = np.hstack((x1_label0, x2_label0))

xs_label1 = np.hstack((x1_label1, x2_label1))

xs_label2 = np.hstack((x1_label2, x2_label2))

xs = np.vstack((xs_label0, xs_label1, xs_label2))

labels = np.matrix([[1., 0., 0.]] * len(x1_label0) + [[0., 1., 0.]] * len(x1_label1) + [[0., 0., 1.]] * len(x1_label2))

arr = np.arange(xs.shape[0])

np.random.shuffle(arr)

xs = xs[arr, :]

labels = labels[arr, :]

test_x1_label0 = np.random.normal(1, 1, (10, 1))

test_x2_label0 = np.random.normal(1, 1, (10, 1))

test_x1_label1 = np.random.normal(5, 1, (10, 1))

test_x2_label1 = np.random.normal(4, 1, (10, 1))

test_x1_label2 = np.random.normal(8, 1, (10, 1))

test_x2_label2 = np.random.normal(0, 1, (10, 1))

test_xs_label0 = np.hstack((test_x1_label0, test_x2_label0))

test_xs_label1 = np.hstack((test_x1_label1, test_x2_label1))

test_xs_label2 = np.hstack((test_x1_label2, test_x2_label2))

test_xs = np.vstack((test_xs_label0, test_xs_label1, test_xs_label2))

test_labels = np.matrix([[1., 0., 0.]] * 10 + [[0., 1., 0.]] * 10 + [[0., 0., 1.]] * 10)

num_labels = 3

train_size, num_features = xs.shape

W = tf.Variable(tf.zeros([num_features, num_labels]))

b = tf.Variable(tf.zeros([num_labels]))

learning_rate = 0.01

training_epochs = 1000

batch_size = 100

optimizer = tf.optimizers.SGD(learning_rate)

for step in range(training_epochs * train_size // batch_size):

offset = (step * batch_size) % train_size

batch_xs = xs[offset:(offset + batch_size), :]

batch_labels = labels[offset:(offset + batch_size)]

X=tf.cast(batch_xs,tf.float32)

Y=tf.cast(batch_labels,tf.float32)

with tf.GradientTape() as g:

y_model = tf.nn.softmax(tf.matmul(X, W) + b)

cost = -tf.reduce_sum(Y * tf.math.log(y_model))

gradients = g.gradient(cost, [W, b])

# 更新梯度

optimizer.apply_gradients(zip(gradients, [W, b]))

if step % 100 == 0:

print (step, cost.numpy())

W_val = W

print('w', W_val.numpy())

b_val = b

print('b', b_val.numpy())

#train_op = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)

correct_prediction = tf.equal(tf.argmax(y_model, 1), tf.argmax(Y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

print("accuracy", accuracy.numpy())