神经网络入门

神经网络的基本骨架

1. nn.Module的使用

- 所有的模型都要继承 Module 类

- 需要重写初始化函数和运算步骤函数

eg:

import torch.nn as nn

import torch.nn.functional as Fclass Model(nn.Module): # 继承父类Module def __init__(self): # 重写初始化函数super().__init__() # 调用父类初始化self.conv1 = nn.Conv2d(1, 20, 5)self.conv2 = nn.Conv2d(20, 20, 5)def forward(self, x): # 神经网络的运算步骤--前向传播x = F.relu(self.conv1(x)) # x->卷积->非线性return F.relu(self.conv2(x)) # x->卷积->非线性

代码示例:

import torch

from torch import nnclass Kun(nn.Module):def __init__(self):super().__init__()def forward(self, input):output = input+1 # 实现输出加1return outputkun = Kun()

x = torch.tensor(1.0)

output = kun(x)

print(output) # tensor(2.)

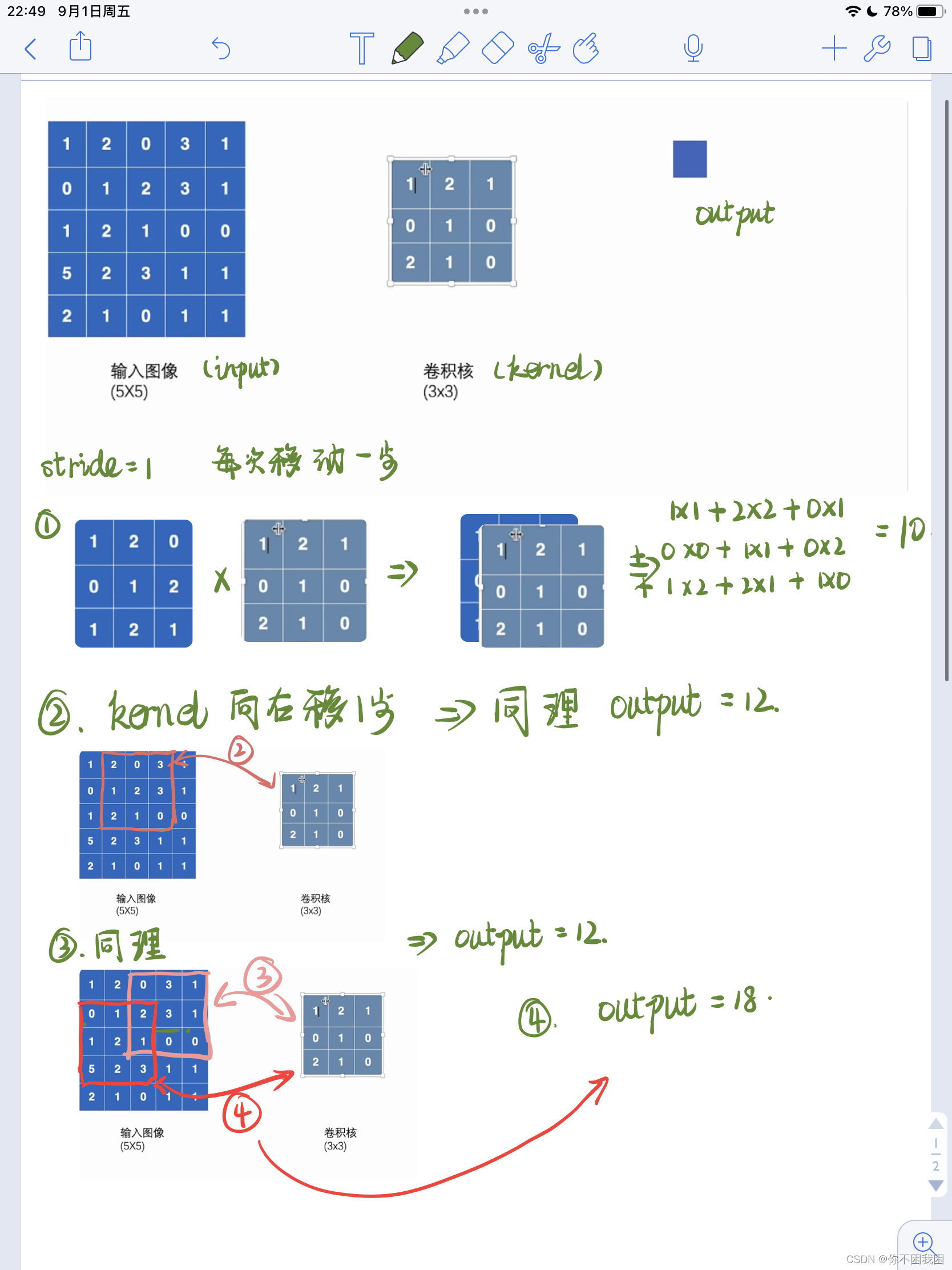

2. 卷积

conv2可选参数

卷积计算过程示意:

import torch# 输入图像(5*5)

input = torch.tensor([[1, 2, 0, 3, 1],[0, 1, 2, 3, 1],[1, 2, 1, 0, 0],[5, 2, 3, 1, 1],[2, 1, 0, 1, 1]]) # 输入tensor数据类型的二维矩阵# 卷积核

kernel = torch.tensor([[1, 2, 1],[0, 1, 0],[2, 1, 0]])print(input.shape)

print(kernel.shape)

torch.Size([5, 5])

torch.Size([3, 3])

如果不调整尺寸会报错:Expected 3D(unbatched) or 4D(batched) input to conv2d, but got input of size: [5, 5]

所以需要调整

input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

output = F.conv2d(input, kernel, stride=1)

print(output)--------------------------------------------------------------------------

tensor([[[[10, 12, 12],[18, 16, 16],[13, 9, 3]]]])

stride可以选择移动的步长

output2 = F.conv2d(input, kernel, stride=2)

print(output2)

----------------------------------------------------------------------------

tensor([[[[10, 12],[13, 3]]]])

padding进行填充(默认填充0)

output3 = F.conv2d(input, kernel, stride=1, padding=1)

print(output3)

-----------------------------------------------------------------------------

tensor([[[[ 1, 3, 4, 10, 8],[ 5, 10, 12, 12, 6],[ 7, 18, 16, 16, 8],[11, 13, 9, 3, 4],[14, 13, 9, 7, 4]]]])

示例代码:

import torch

import torch.nn.functional as F

# 输入图像(5*5)

input = torch.tensor([[1, 2, 0, 3, 1],[0, 1, 2, 3, 1],[1, 2, 1, 0, 0],[5, 2, 3, 1, 1],[2, 1, 0, 1, 1]]) # 输入tensor数据类型的二维矩阵# 卷积核

kernel = torch.tensor([[1, 2, 1],[0, 1, 0],[2, 1, 0]])

# 调整输入的尺寸

# 如果不调整尺寸会报错

# Expected 3D(unbatched) or 4D(batched) input to conv2d, but got input of size: [5, 5]

input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

# print(input.shape) # torch.Size([1, 1, 5, 5])

# print(kernel.shape) # torch.Size([1, 1, 3, 3])output = F.conv2d(input, kernel, stride=1)

print(output)output2 = F.conv2d(input, kernel, stride=2)

print(output2)output3 = F.conv2d(input, kernel, stride=1, padding=1)

print(output3)