企业级MCP部署实战:从开发到生产的完整DevOps流程

企业级MCP部署实战:从开发到生产的完整DevOps流程

🌟 Hello,我是摘星!

🌈 在彩虹般绚烂的技术栈中,我是那个永不停歇的色彩收集者。

🦋 每一个优化都是我培育的花朵,每一个特性都是我放飞的蝴蝶。

🔬 每一次代码审查都是我的显微镜观察,每一次重构都是我的化学实验。

🎵 在编程的交响乐中,我既是指挥家也是演奏者。让我们一起,在技术的音乐厅里,奏响属于程序员的华美乐章。

目录

企业级MCP部署实战:从开发到生产的完整DevOps流程

摘要

1. 企业级MCP部署架构设计

1.1 整体架构概览

1.2 核心组件设计

1.2.1 MCP服务器集群配置

1.2.2 高可用性设计模式

1.3 安全架构设计

2. 容器化部署与Kubernetes集成

2.1 Docker容器化配置

2.1.1 多阶段构建Dockerfile

2.1.2 容器优化配置

2.2 Kubernetes部署配置

2.2.1 命名空间和资源配置

2.2.2 服务和Ingress配置

2.3 配置管理和密钥管理

3. CI/CD流水线配置与自动化测试

3.1 GitLab CI/CD配置

3.2 自动化测试策略

3.2.1 测试金字塔实现

3.2.2 单元测试配置

3.2.3 集成测试配置

3.3 性能测试和负载测试

3.3.1 性能测试配置

4. 生产环境监控与运维管理

4.1 监控体系架构

4.2 Prometheus监控配置

4.2.1 监控指标定义

4.2.2 Prometheus配置文件

4.3 告警规则配置

4.4 Grafana仪表板配置

4.5 日志管理与分析

4.5.1 结构化日志配置

4.5.2 分布式链路追踪

5. 运维自动化与故障处理

5.1 自动化运维脚本

5.2 故障自动恢复

5.3 性能调优与容量规划

5.3.1 资源使用分析

6. 安全加固与合规性

6.1 安全扫描与漏洞管理

6.2 网络安全策略

7. 成本优化与资源管理

7.1 资源配额管理

7.2 自动扩缩容配置

总结

参考资料

摘要

作为一名深耕AI基础设施多年的技术博主摘星,我深刻认识到Model Context Protocol(MCP)在企业级应用中的巨大潜力和部署挑战。随着AI Agent技术的快速发展,越来越多的企业开始将MCP集成到其核心业务系统中,但从开发环境到生产环境的部署过程往往充满了复杂性和不确定性。在过去的项目实践中,我见证了许多企业在MCP部署过程中遇到的各种问题:从架构设计的不合理导致的性能瓶颈,到容器化部署中的资源配置错误,再到生产环境中的监控盲区和运维困难。这些问题不仅影响了系统的稳定性和性能,更重要的是阻碍了企业AI能力的快速迭代和创新。因此,建立一套完整的企业级MCP部署DevOps流程变得至关重要。本文将从企业环境下的部署架构设计出发,深入探讨容器化部署与Kubernetes集成的最佳实践,详细介绍CI/CD流水线配置与自动化测试的实施方案,并提供生产环境监控与运维管理的完整解决方案。通过系统性的方法论和实战经验分享,帮助企业技术团队构建稳定、高效、可扩展的MCP部署体系,实现从开发到生产的无缝衔接,为企业AI能力的持续发展奠定坚实的基础设施基础。

1. 企业级MCP部署架构设计

1.1 整体架构概览

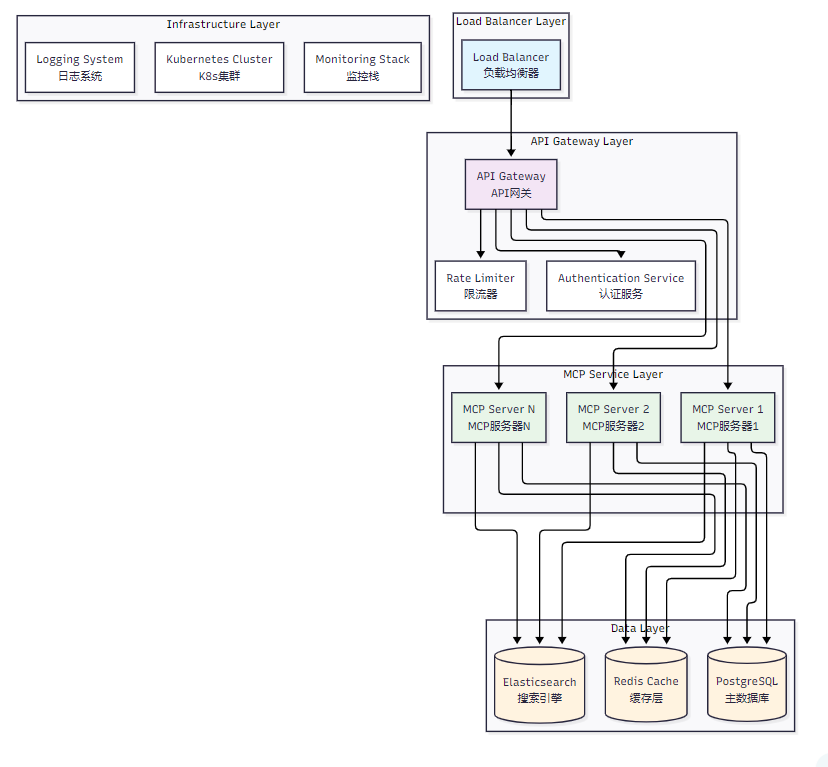

企业级MCP部署需要考虑高可用性、可扩展性、安全性和可维护性等多个维度。以下是推荐的整体架构设计:

图1:企业级MCP部署整体架构图

1.2 核心组件设计

1.2.1 MCP服务器集群配置

// mcp-server-config.ts

interface MCPServerConfig {server: {port: number;host: string;maxConnections: number;timeout: number;};cluster: {instances: number;loadBalancing: 'round-robin' | 'least-connections' | 'ip-hash';healthCheck: {interval: number;timeout: number;retries: number;};};resources: {memory: string;cpu: string;storage: string;};

}const productionConfig: MCPServerConfig = {server: {port: 8080,host: '0.0.0.0',maxConnections: 1000,timeout: 30000},cluster: {instances: 3,loadBalancing: 'least-connections',healthCheck: {interval: 10000,timeout: 5000,retries: 3}},resources: {memory: '2Gi',cpu: '1000m',storage: '10Gi'}

};1.2.2 高可用性设计模式

| 组件 | 高可用策略 | 故障转移时间 | 数据一致性 |

| MCP服务器 | 多实例部署 + 健康检查 | < 5秒 | 最终一致性 |

| 数据库 | 主从复制 + 自动故障转移 | < 30秒 | 强一致性 |

| 缓存层 | Redis Cluster | < 2秒 | 最终一致性 |

| 负载均衡器 | 双机热备 | < 1秒 | 无状态 |

1.3 安全架构设计

# security-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: mcp-security-config

data:security.yaml: |authentication:type: "jwt"secret: "${JWT_SECRET}"expiration: "24h"authorization:rbac:enabled: truepolicies:- role: "admin"permissions: ["read", "write", "delete"]- role: "user"permissions: ["read"]encryption:tls:enabled: truecert: "/etc/ssl/certs/mcp.crt"key: "/etc/ssl/private/mcp.key"network:allowedOrigins:- "https://app.company.com"- "https://admin.company.com"rateLimiting:requests: 1000window: "1h"2. 容器化部署与Kubernetes集成

2.1 Docker容器化配置

2.1.1 多阶段构建Dockerfile

# Dockerfile

# 第一阶段:构建阶段

FROM node:18-alpine AS builderWORKDIR /app# 复制依赖文件

COPY package*.json ./

COPY tsconfig.json ./# 安装依赖

RUN npm ci --only=production && npm cache clean --force# 复制源代码

COPY src/ ./src/# 构建应用

RUN npm run build# 第二阶段:运行阶段

FROM node:18-alpine AS runtime# 创建非root用户

RUN addgroup -g 1001 -S nodejs && \adduser -S mcp -u 1001WORKDIR /app# 复制构建产物

COPY --from=builder --chown=mcp:nodejs /app/dist ./dist

COPY --from=builder --chown=mcp:nodejs /app/node_modules ./node_modules

COPY --from=builder --chown=mcp:nodejs /app/package.json ./# 健康检查

HEALTHCHECK --interval=30s --timeout=3s --start-period=5s --retries=3 \CMD curl -f http://localhost:8080/health || exit 1# 切换到非root用户

USER mcp# 暴露端口

EXPOSE 8080# 启动命令

CMD ["node", "dist/server.js"]2.1.2 容器优化配置

# docker-compose.yml

version: '3.8'services:mcp-server:build:context: .dockerfile: Dockerfiletarget: runtimeimage: mcp-server:latestcontainer_name: mcp-serverrestart: unless-stopped# 资源限制deploy:resources:limits:memory: 2Gcpus: '1.0'reservations:memory: 1Gcpus: '0.5'# 环境变量environment:- NODE_ENV=production- LOG_LEVEL=info- DB_HOST=postgres- REDIS_HOST=redis# 端口映射ports:- "8080:8080"# 健康检查healthcheck:test: ["CMD", "curl", "-f", "http://localhost:8080/health"]interval: 30stimeout: 10sretries: 3start_period: 40s# 依赖服务depends_on:postgres:condition: service_healthyredis:condition: service_healthy# 网络配置networks:- mcp-networkpostgres:image: postgres:15-alpinecontainer_name: mcp-postgresrestart: unless-stoppedenvironment:- POSTGRES_DB=mcp- POSTGRES_USER=mcp_user- POSTGRES_PASSWORD=${DB_PASSWORD}volumes:- postgres_data:/var/lib/postgresql/datahealthcheck:test: ["CMD-SHELL", "pg_isready -U mcp_user -d mcp"]interval: 10stimeout: 5sretries: 5networks:- mcp-networkredis:image: redis:7-alpinecontainer_name: mcp-redisrestart: unless-stoppedcommand: redis-server --appendonly yes --requirepass ${REDIS_PASSWORD}volumes:- redis_data:/datahealthcheck:test: ["CMD", "redis-cli", "ping"]interval: 10stimeout: 3sretries: 3networks:- mcp-networkvolumes:postgres_data:redis_data:networks:mcp-network:driver: bridge2.2 Kubernetes部署配置

2.2.1 命名空间和资源配置

# k8s/namespace.yaml

apiVersion: v1

kind: Namespace

metadata:name: mcp-productionlabels:name: mcp-productionenvironment: production---

# k8s/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: mcp-servernamespace: mcp-productionlabels:app: mcp-serverversion: v1.0.0

spec:replicas: 3strategy:type: RollingUpdaterollingUpdate:maxSurge: 1maxUnavailable: 0selector:matchLabels:app: mcp-servertemplate:metadata:labels:app: mcp-serverversion: v1.0.0spec:# 安全上下文securityContext:runAsNonRoot: truerunAsUser: 1001fsGroup: 1001# 容器配置containers:- name: mcp-serverimage: mcp-server:v1.0.0imagePullPolicy: Always# 端口配置ports:- containerPort: 8080name: httpprotocol: TCP# 环境变量env:- name: NODE_ENVvalue: "production"- name: DB_HOSTvalueFrom:secretKeyRef:name: mcp-secretskey: db-host- name: DB_PASSWORDvalueFrom:secretKeyRef:name: mcp-secretskey: db-password# 资源限制resources:requests:memory: "1Gi"cpu: "500m"limits:memory: "2Gi"cpu: "1000m"# 健康检查livenessProbe:httpGet:path: /healthport: 8080initialDelaySeconds: 30periodSeconds: 10timeoutSeconds: 5failureThreshold: 3readinessProbe:httpGet:path: /readyport: 8080initialDelaySeconds: 5periodSeconds: 5timeoutSeconds: 3failureThreshold: 3# 卷挂载volumeMounts:- name: config-volumemountPath: /app/configreadOnly: true- name: logs-volumemountPath: /app/logs# 卷配置volumes:- name: config-volumeconfigMap:name: mcp-config- name: logs-volumeemptyDir: {}# 节点选择nodeSelector:kubernetes.io/os: linux# 容忍度配置tolerations:- key: "node-role.kubernetes.io/master"operator: "Exists"effect: "NoSchedule"2.2.2 服务和Ingress配置

# k8s/service.yaml

apiVersion: v1

kind: Service

metadata:name: mcp-server-servicenamespace: mcp-productionlabels:app: mcp-server

spec:type: ClusterIPports:- port: 80targetPort: 8080protocol: TCPname: httpselector:app: mcp-server---

# k8s/ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: mcp-server-ingressnamespace: mcp-productionannotations:kubernetes.io/ingress.class: "nginx"nginx.ingress.kubernetes.io/ssl-redirect: "true"nginx.ingress.kubernetes.io/use-regex: "true"nginx.ingress.kubernetes.io/rate-limit: "100"nginx.ingress.kubernetes.io/rate-limit-window: "1m"cert-manager.io/cluster-issuer: "letsencrypt-prod"

spec:tls:- hosts:- mcp-api.company.comsecretName: mcp-tls-secretrules:- host: mcp-api.company.comhttp:paths:- path: /api/v1/mcppathType: Prefixbackend:service:name: mcp-server-serviceport:number: 802.3 配置管理和密钥管理

# k8s/configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: mcp-confignamespace: mcp-production

data:app.yaml: |server:port: 8080timeout: 30000logging:level: infoformat: jsonfeatures:rateLimiting: truecaching: truemetrics: true---

# k8s/secret.yaml

apiVersion: v1

kind: Secret

metadata:name: mcp-secretsnamespace: mcp-production

type: Opaque

data:db-host: cG9zdGdyZXNxbC1zZXJ2aWNl # base64 encodeddb-password: c3VwZXJfc2VjcmV0X3Bhc3N3b3Jk # base64 encodedjwt-secret: and0X3NlY3JldF9rZXlfZm9yX2F1dGg= # base64 encoded3. CI/CD流水线配置与自动化测试

3.1 GitLab CI/CD配置

# .gitlab-ci.yml

stages:- test- build- security-scan- deploy-staging- integration-test- deploy-productionvariables:DOCKER_REGISTRY: registry.company.comIMAGE_NAME: mcp-serverKUBERNETES_NAMESPACE_STAGING: mcp-stagingKUBERNETES_NAMESPACE_PRODUCTION: mcp-production# 单元测试阶段

unit-test:stage: testimage: node:18-alpinecache:paths:- node_modules/script:- npm ci- npm run test:unit- npm run test:coveragecoverage: '/Lines\s*:\s*(\d+\.\d+)%/'artifacts:reports:coverage_report:coverage_format: coberturapath: coverage/cobertura-coverage.xmlpaths:- coverage/expire_in: 1 weekonly:- merge_requests- main- develop# 代码质量检查

code-quality:stage: testimage: node:18-alpinescript:- npm ci- npm run lint- npm run type-check- npm audit --audit-level moderateartifacts:reports:codequality: gl-code-quality-report.jsononly:- merge_requests- main# 构建Docker镜像

build-image:stage: buildimage: docker:20.10.16services:- docker:20.10.16-dindvariables:DOCKER_TLS_CERTDIR: "/certs"before_script:- echo $CI_REGISTRY_PASSWORD | docker login -u $CI_REGISTRY_USER --password-stdin $CI_REGISTRYscript:- docker build -t $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA .- docker build -t $CI_REGISTRY_IMAGE:latest .- docker push $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA- docker push $CI_REGISTRY_IMAGE:latestonly:- main- develop# 安全扫描

security-scan:stage: security-scanimage: name: aquasec/trivy:latestentrypoint: [""]script:- trivy image --exit-code 0 --format template --template "@contrib/sarif.tpl" -o gl-sast-report.json $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA- trivy image --exit-code 1 --severity HIGH,CRITICAL $CI_REGISTRY_IMAGE:$CI_COMMIT_SHAartifacts:reports:sast: gl-sast-report.jsononly:- main- develop# 部署到测试环境

deploy-staging:stage: deploy-stagingimage: bitnami/kubectl:latestenvironment:name: stagingurl: https://mcp-staging.company.comscript:- kubectl config use-context $KUBE_CONTEXT_STAGING- kubectl set image deployment/mcp-server mcp-server=$CI_REGISTRY_IMAGE:$CI_COMMIT_SHA -n $KUBERNETES_NAMESPACE_STAGING- kubectl rollout status deployment/mcp-server -n $KUBERNETES_NAMESPACE_STAGING --timeout=300sonly:- develop# 集成测试

integration-test:stage: integration-testimage: node:18-alpineservices:- postgres:13-alpine- redis:6-alpinevariables:POSTGRES_DB: mcp_testPOSTGRES_USER: test_userPOSTGRES_PASSWORD: test_passwordREDIS_URL: redis://redis:6379script:- npm ci- npm run test:integration- npm run test:e2eartifacts:reports:junit: test-results.xmlonly:- develop- main# 生产环境部署

deploy-production:stage: deploy-productionimage: bitnami/kubectl:latestenvironment:name: productionurl: https://mcp-api.company.comscript:- kubectl config use-context $KUBE_CONTEXT_PRODUCTION- kubectl set image deployment/mcp-server mcp-server=$CI_REGISTRY_IMAGE:$CI_COMMIT_SHA -n $KUBERNETES_NAMESPACE_PRODUCTION- kubectl rollout status deployment/mcp-server -n $KUBERNETES_NAMESPACE_PRODUCTION --timeout=600swhen: manualonly:- main3.2 自动化测试策略

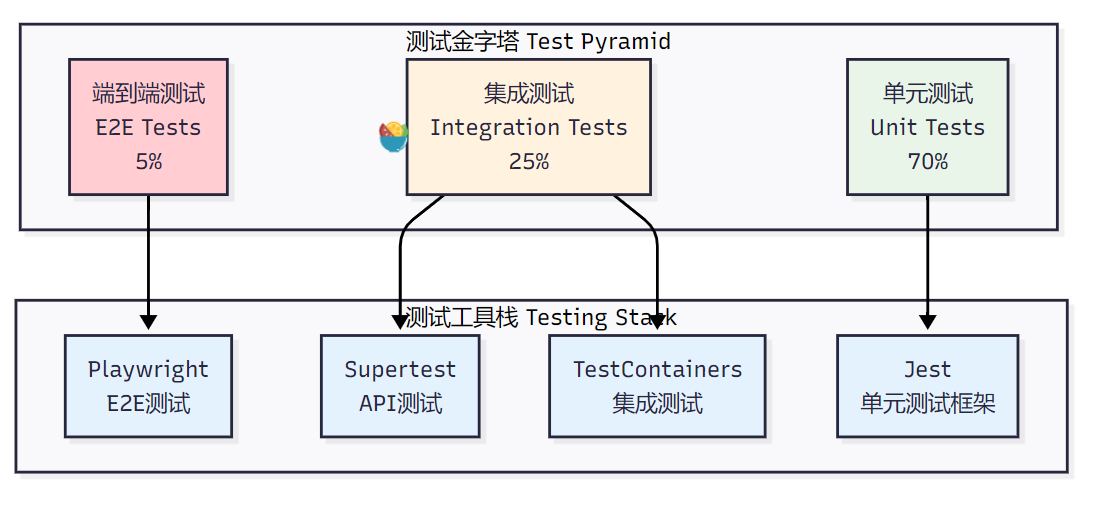

3.2.1 测试金字塔实现

图2:自动化测试金字塔架构图

3.2.2 单元测试配置

// tests/unit/mcp-server.test.ts

import { MCPServer } from '../../src/server/mcp-server';

import { MockToolProvider } from '../mocks/tool-provider.mock';describe('MCPServer', () => {let server: MCPServer;let mockToolProvider: MockToolProvider;beforeEach(() => {mockToolProvider = new MockToolProvider();server = new MCPServer({port: 8080,toolProviders: [mockToolProvider]});});afterEach(async () => {await server.close();});describe('Tool Execution', () => {it('should execute tool successfully', async () => {// Arrangeconst toolName = 'test-tool';const toolArgs = { input: 'test-input' };const expectedResult = { output: 'test-output' };mockToolProvider.mockTool(toolName, expectedResult);// Actconst result = await server.executeTool(toolName, toolArgs);// Assertexpect(result).toEqual(expectedResult);expect(mockToolProvider.getCallCount(toolName)).toBe(1);});it('should handle tool execution errors', async () => {// Arrangeconst toolName = 'failing-tool';const error = new Error('Tool execution failed');mockToolProvider.mockToolError(toolName, error);// Act & Assertawait expect(server.executeTool(toolName, {})).rejects.toThrow('Tool execution failed');});});describe('Resource Management', () => {it('should list available resources', async () => {// Arrangeconst expectedResources = [{ uri: 'file://test.txt', name: 'Test File' },{ uri: 'db://users', name: 'Users Database' }];mockToolProvider.mockResources(expectedResources);// Actconst resources = await server.listResources();// Assertexpect(resources).toEqual(expectedResources);});});

});3.2.3 集成测试配置

// tests/integration/api.integration.test.ts

import request from 'supertest';

import { TestContainers, StartedTestContainer } from 'testcontainers';

import { PostgreSqlContainer } from '@testcontainers/postgresql';

import { RedisContainer } from '@testcontainers/redis';

import { createApp } from '../../src/app';describe('MCP API Integration Tests', () => {let app: any;let postgresContainer: StartedTestContainer;let redisContainer: StartedTestContainer;beforeAll(async () => {// 启动测试容器postgresContainer = await new PostgreSqlContainer().withDatabase('mcp_test').withUsername('test_user').withPassword('test_password').start();redisContainer = await new RedisContainer().start();// 创建应用实例app = createApp({database: {host: postgresContainer.getHost(),port: postgresContainer.getPort(),database: 'mcp_test',username: 'test_user',password: 'test_password'},redis: {host: redisContainer.getHost(),port: redisContainer.getPort()}});}, 60000);afterAll(async () => {await postgresContainer.stop();await redisContainer.stop();});describe('POST /api/v1/mcp/tools/execute', () => {it('should execute tool successfully', async () => {const response = await request(app).post('/api/v1/mcp/tools/execute').send({name: 'file-reader',arguments: {path: '/test/file.txt'}}).expect(200);expect(response.body).toHaveProperty('result');expect(response.body.success).toBe(true);});it('should return error for invalid tool', async () => {const response = await request(app).post('/api/v1/mcp/tools/execute').send({name: 'non-existent-tool',arguments: {}}).expect(404);expect(response.body.error).toContain('Tool not found');});});describe('GET /api/v1/mcp/resources', () => {it('should list available resources', async () => {const response = await request(app).get('/api/v1/mcp/resources').expect(200);expect(response.body).toHaveProperty('resources');expect(Array.isArray(response.body.resources)).toBe(true);});});

});3.3 性能测试和负载测试

3.3.1 性能测试配置

// tests/performance/load-test.js

import http from 'k6/http';

import { check, sleep } from 'k6';

import { Rate } from 'k6/metrics';// 自定义指标

const errorRate = new Rate('errors');export const options = {stages: [{ duration: '2m', target: 100 }, // 预热阶段{ duration: '5m', target: 100 }, // 稳定负载{ duration: '2m', target: 200 }, // 增加负载{ duration: '5m', target: 200 }, // 高负载稳定{ duration: '2m', target: 0 }, // 降负载],thresholds: {http_req_duration: ['p(95)<500'], // 95%的请求响应时间小于500mshttp_req_failed: ['rate<0.1'], // 错误率小于10%errors: ['rate<0.1'], // 自定义错误率小于10%},

};export default function () {const payload = JSON.stringify({name: 'test-tool',arguments: {input: 'performance test data'}});const params = {headers: {'Content-Type': 'application/json','Authorization': 'Bearer test-token'},};const response = http.post('http://mcp-staging.company.com/api/v1/mcp/tools/execute',payload,params);const result = check(response, {'status is 200': (r) => r.status === 200,'response time < 500ms': (r) => r.timings.duration < 500,'response has result': (r) => r.json('result') !== undefined,});errorRate.add(!result);sleep(1);

}4. 生产环境监控与运维管理

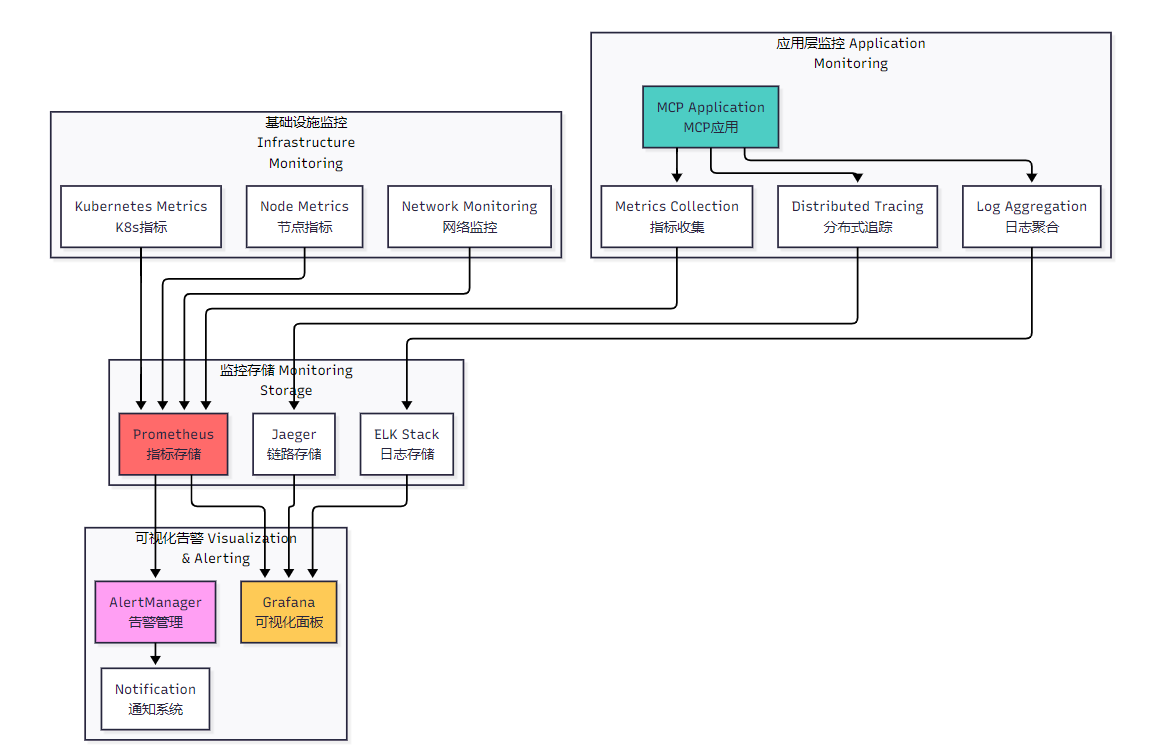

4.1 监控体系架构

图3:生产环境监控体系架构图

4.2 Prometheus监控配置

4.2.1 监控指标定义

// src/monitoring/metrics.ts

import { register, Counter, Histogram, Gauge } from 'prom-client';export class MCPMetrics {// 请求计数器private requestCounter = new Counter({name: 'mcp_requests_total',help: 'Total number of MCP requests',labelNames: ['method', 'status', 'endpoint']});// 请求持续时间直方图private requestDuration = new Histogram({name: 'mcp_request_duration_seconds',help: 'Duration of MCP requests in seconds',labelNames: ['method', 'endpoint'],buckets: [0.1, 0.5, 1, 2, 5, 10]});// 活跃连接数private activeConnections = new Gauge({name: 'mcp_active_connections',help: 'Number of active MCP connections'});// 工具执行指标private toolExecutions = new Counter({name: 'mcp_tool_executions_total',help: 'Total number of tool executions',labelNames: ['tool_name', 'status']});// 资源访问指标private resourceAccess = new Counter({name: 'mcp_resource_access_total',help: 'Total number of resource accesses',labelNames: ['resource_type', 'operation']});constructor() {register.registerMetric(this.requestCounter);register.registerMetric(this.requestDuration);register.registerMetric(this.activeConnections);register.registerMetric(this.toolExecutions);register.registerMetric(this.resourceAccess);}// 记录请求指标recordRequest(method: string, endpoint: string, status: string, duration: number) {this.requestCounter.inc({ method, endpoint, status });this.requestDuration.observe({ method, endpoint }, duration);}// 记录工具执行recordToolExecution(toolName: string, status: string) {this.toolExecutions.inc({ tool_name: toolName, status });}// 记录资源访问recordResourceAccess(resourceType: string, operation: string) {this.resourceAccess.inc({ resource_type: resourceType, operation });}// 更新活跃连接数setActiveConnections(count: number) {this.activeConnections.set(count);}// 获取指标端点async getMetrics(): Promise<string> {return register.metrics();}

}4.2.2 Prometheus配置文件

# prometheus.yml

global:scrape_interval: 15sevaluation_interval: 15srule_files:- "mcp_rules.yml"alerting:alertmanagers:- static_configs:- targets:- alertmanager:9093scrape_configs:# MCP服务器监控- job_name: 'mcp-server'static_configs:- targets: ['mcp-server:8080']metrics_path: '/metrics'scrape_interval: 10sscrape_timeout: 5s# Kubernetes监控- job_name: 'kubernetes-apiservers'kubernetes_sd_configs:- role: endpointsscheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenrelabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]action: keepregex: default;kubernetes;https# 节点监控- job_name: 'kubernetes-nodes'kubernetes_sd_configs:- role: nodescheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenrelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)# Pod监控- job_name: 'kubernetes-pods'kubernetes_sd_configs:- role: podrelabel_configs:- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]action: keepregex: true- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]action: replacetarget_label: __metrics_path__regex: (.+)4.3 告警规则配置

# mcp_rules.yml

groups:- name: mcp_alertsrules:# 高错误率告警- alert: MCPHighErrorRateexpr: rate(mcp_requests_total{status=~"5.."}[5m]) / rate(mcp_requests_total[5m]) > 0.05for: 2mlabels:severity: criticalannotations:summary: "MCP服务器错误率过高"description: "MCP服务器在过去5分钟内错误率超过5%,当前值:{{ $value | humanizePercentage }}"# 响应时间过长告警- alert: MCPHighLatencyexpr: histogram_quantile(0.95, rate(mcp_request_duration_seconds_bucket[5m])) > 1for: 5mlabels:severity: warningannotations:summary: "MCP服务器响应时间过长"description: "MCP服务器95%分位响应时间超过1秒,当前值:{{ $value }}s"# 服务不可用告警- alert: MCPServiceDownexpr: up{job="mcp-server"} == 0for: 1mlabels:severity: criticalannotations:summary: "MCP服务器不可用"description: "MCP服务器 {{ $labels.instance }} 已停止响应超过1分钟"# 内存使用率过高告警- alert: MCPHighMemoryUsageexpr: (container_memory_usage_bytes{pod=~"mcp-server-.*"} / container_spec_memory_limit_bytes) > 0.85for: 5mlabels:severity: warningannotations:summary: "MCP服务器内存使用率过高"description: "Pod {{ $labels.pod }} 内存使用率超过85%,当前值:{{ $value | humanizePercentage }}"# CPU使用率过高告警- alert: MCPHighCPUUsageexpr: rate(container_cpu_usage_seconds_total{pod=~"mcp-server-.*"}[5m]) > 0.8for: 5mlabels:severity: warningannotations:summary: "MCP服务器CPU使用率过高"description: "Pod {{ $labels.pod }} CPU使用率超过80%,当前值:{{ $value | humanizePercentage }}"4.4 Grafana仪表板配置

{"dashboard": {"id": null,"title": "MCP服务器监控仪表板","tags": ["mcp", "monitoring"],"timezone": "browser","panels": [{"id": 1,"title": "请求速率","type": "graph","targets": [{"expr": "rate(mcp_requests_total[5m])","legendFormat": "总请求速率"},{"expr": "rate(mcp_requests_total{status=~\"2..\"}[5m])","legendFormat": "成功请求速率"},{"expr": "rate(mcp_requests_total{status=~\"5..\"}[5m])","legendFormat": "错误请求速率"}],"yAxes": [{"label": "请求/秒","min": 0}],"gridPos": {"h": 8,"w": 12,"x": 0,"y": 0}},{"id": 2,"title": "响应时间分布","type": "graph","targets": [{"expr": "histogram_quantile(0.50, rate(mcp_request_duration_seconds_bucket[5m]))","legendFormat": "50th percentile"},{"expr": "histogram_quantile(0.95, rate(mcp_request_duration_seconds_bucket[5m]))","legendFormat": "95th percentile"},{"expr": "histogram_quantile(0.99, rate(mcp_request_duration_seconds_bucket[5m]))","legendFormat": "99th percentile"}],"yAxes": [{"label": "秒","min": 0}],"gridPos": {"h": 8,"w": 12,"x": 12,"y": 0}},{"id": 3,"title": "错误率","type": "singlestat","targets": [{"expr": "rate(mcp_requests_total{status=~\"5..\"}[5m]) / rate(mcp_requests_total[5m]) * 100","legendFormat": "错误率"}],"valueName": "current","format": "percent","thresholds": "1,5","colorBackground": true,"gridPos": {"h": 4,"w": 6,"x": 0,"y": 8}},{"id": 4,"title": "活跃连接数","type": "singlestat","targets": [{"expr": "mcp_active_connections","legendFormat": "活跃连接"}],"valueName": "current","format": "short","gridPos": {"h": 4,"w": 6,"x": 6,"y": 8}}],"time": {"from": "now-1h","to": "now"},"refresh": "5s"}

}4.5 日志管理与分析

4.5.1 结构化日志配置

// src/logging/logger.ts

import winston from 'winston';

import { ElasticsearchTransport } from 'winston-elasticsearch';export class MCPLogger {private logger: winston.Logger;constructor() {const esTransport = new ElasticsearchTransport({level: 'info',clientOpts: {node: process.env.ELASTICSEARCH_URL || 'http://elasticsearch:9200'},index: 'mcp-logs',indexTemplate: {name: 'mcp-logs-template',pattern: 'mcp-logs-*',settings: {number_of_shards: 1,number_of_replicas: 1},mappings: {properties: {'@timestamp': { type: 'date' },level: { type: 'keyword' },message: { type: 'text' },service: { type: 'keyword' },traceId: { type: 'keyword' },userId: { type: 'keyword' },toolName: { type: 'keyword' },duration: { type: 'float' }}}}});this.logger = winston.createLogger({level: process.env.LOG_LEVEL || 'info',format: winston.format.combine(winston.format.timestamp(),winston.format.errors({ stack: true }),winston.format.json()),defaultMeta: {service: 'mcp-server',version: process.env.APP_VERSION || '1.0.0'},transports: [new winston.transports.Console({format: winston.format.combine(winston.format.colorize(),winston.format.simple())}),esTransport]});}info(message: string, meta?: any) {this.logger.info(message, meta);}error(message: string, error?: Error, meta?: any) {this.logger.error(message, { error: error?.stack, ...meta });}warn(message: string, meta?: any) {this.logger.warn(message, meta);}debug(message: string, meta?: any) {this.logger.debug(message, meta);}// 记录工具执行日志logToolExecution(toolName: string, userId: string, duration: number, success: boolean, traceId?: string) {this.info('Tool execution completed', {toolName,userId,duration,success,traceId,type: 'tool_execution'});}// 记录资源访问日志logResourceAccess(resourceUri: string, operation: string, userId: string, traceId?: string) {this.info('Resource accessed', {resourceUri,operation,userId,traceId,type: 'resource_access'});}

}4.5.2 分布式链路追踪

// src/tracing/tracer.ts

import { NodeSDK } from '@opentelemetry/sdk-node';

import { JaegerExporter } from '@opentelemetry/exporter-jaeger';

import { Resource } from '@opentelemetry/resources';

import { SemanticResourceAttributes } from '@opentelemetry/semantic-conventions';

import { trace, context, SpanStatusCode } from '@opentelemetry/api';export class MCPTracer {private sdk: NodeSDK;private tracer: any;constructor() {const jaegerExporter = new JaegerExporter({endpoint: process.env.JAEGER_ENDPOINT || 'http://jaeger:14268/api/traces',});this.sdk = new NodeSDK({resource: new Resource({[SemanticResourceAttributes.SERVICE_NAME]: 'mcp-server',[SemanticResourceAttributes.SERVICE_VERSION]: process.env.APP_VERSION || '1.0.0',}),traceExporter: jaegerExporter,});this.sdk.start();this.tracer = trace.getTracer('mcp-server');}// 创建工具执行跨度async traceToolExecution<T>(toolName: string,operation: () => Promise<T>,attributes?: Record<string, string | number>): Promise<T> {return this.tracer.startActiveSpan(`tool.${toolName}`, async (span: any) => {try {span.setAttributes({'tool.name': toolName,'operation.type': 'tool_execution',...attributes});const result = await operation();span.setStatus({ code: SpanStatusCode.OK });return result;} catch (error) {span.setStatus({code: SpanStatusCode.ERROR,message: error instanceof Error ? error.message : 'Unknown error'});span.recordException(error as Error);throw error;} finally {span.end();}});}// 创建资源访问跨度async traceResourceAccess<T>(resourceUri: string,operation: string,handler: () => Promise<T>): Promise<T> {return this.tracer.startActiveSpan(`resource.${operation}`, async (span: any) => {try {span.setAttributes({'resource.uri': resourceUri,'resource.operation': operation,'operation.type': 'resource_access'});const result = await handler();span.setStatus({ code: SpanStatusCode.OK });return result;} catch (error) {span.setStatus({code: SpanStatusCode.ERROR,message: error instanceof Error ? error.message : 'Unknown error'});span.recordException(error as Error);throw error;} finally {span.end();}});}// 获取当前跟踪IDgetCurrentTraceId(): string | undefined {const activeSpan = trace.getActiveSpan();return activeSpan?.spanContext().traceId;}

}5. 运维自动化与故障处理

5.1 自动化运维脚本

#!/bin/bash

# scripts/deploy.sh - 自动化部署脚本set -e# 配置变量

NAMESPACE=${NAMESPACE:-"mcp-production"}

IMAGE_TAG=${IMAGE_TAG:-"latest"}

KUBECTL_TIMEOUT=${KUBECTL_TIMEOUT:-"300s"}# 颜色输出

RED='\033[0;31m'

GREEN='\033[0;32m'

YELLOW='\033[1;33m'

NC='\033[0m' # No Colorlog_info() {echo -e "${GREEN}[INFO]${NC} $1"

}log_warn() {echo -e "${YELLOW}[WARN]${NC} $1"

}log_error() {echo -e "${RED}[ERROR]${NC} $1"

}# 检查前置条件

check_prerequisites() {log_info "检查部署前置条件..."# 检查kubectlif ! command -v kubectl &> /dev/null; thenlog_error "kubectl 未安装"exit 1fi# 检查集群连接if ! kubectl cluster-info &> /dev/null; thenlog_error "无法连接到Kubernetes集群"exit 1fi# 检查命名空间if ! kubectl get namespace $NAMESPACE &> /dev/null; thenlog_warn "命名空间 $NAMESPACE 不存在,正在创建..."kubectl create namespace $NAMESPACEfilog_info "前置条件检查完成"

}# 部署配置

deploy_configs() {log_info "部署配置文件..."kubectl apply -f k8s/configmap.yaml -n $NAMESPACEkubectl apply -f k8s/secret.yaml -n $NAMESPACElog_info "配置文件部署完成"

}# 部署应用

deploy_application() {log_info "部署MCP服务器..."# 更新镜像标签sed -i.bak "s|image: mcp-server:.*|image: mcp-server:$IMAGE_TAG|g" k8s/deployment.yaml# 应用部署配置kubectl apply -f k8s/deployment.yaml -n $NAMESPACEkubectl apply -f k8s/service.yaml -n $NAMESPACEkubectl apply -f k8s/ingress.yaml -n $NAMESPACE# 等待部署完成log_info "等待部署完成..."kubectl rollout status deployment/mcp-server -n $NAMESPACE --timeout=$KUBECTL_TIMEOUT# 恢复原始文件mv k8s/deployment.yaml.bak k8s/deployment.yamllog_info "应用部署完成"

}# 健康检查

health_check() {log_info "执行健康检查..."# 检查Pod状态READY_PODS=$(kubectl get pods -n $NAMESPACE -l app=mcp-server -o jsonpath='{.items[*].status.conditions[?(@.type=="Ready")].status}' | grep -o True | wc -l)TOTAL_PODS=$(kubectl get pods -n $NAMESPACE -l app=mcp-server --no-headers | wc -l)if [ "$READY_PODS" -eq "$TOTAL_PODS" ] && [ "$TOTAL_PODS" -gt 0 ]; thenlog_info "健康检查通过: $READY_PODS/$TOTAL_PODS pods ready"elselog_error "健康检查失败: $READY_PODS/$TOTAL_PODS pods ready"exit 1fi# 检查服务端点SERVICE_IP=$(kubectl get service mcp-server-service -n $NAMESPACE -o jsonpath='{.spec.clusterIP}')if curl -f http://$SERVICE_IP/health &> /dev/null; thenlog_info "服务端点健康检查通过"elselog_warn "服务端点健康检查失败,但继续部署"fi

}# 回滚函数

rollback() {log_warn "执行回滚操作..."kubectl rollout undo deployment/mcp-server -n $NAMESPACEkubectl rollout status deployment/mcp-server -n $NAMESPACE --timeout=$KUBECTL_TIMEOUTlog_info "回滚完成"

}# 主函数

main() {log_info "开始MCP服务器部署流程..."check_prerequisitesdeploy_configsdeploy_application# 健康检查失败时自动回滚if ! health_check; thenlog_error "部署失败,执行回滚..."rollbackexit 1filog_info "MCP服务器部署成功完成!"# 显示部署信息echo ""echo "部署信息:"echo "- 命名空间: $NAMESPACE"echo "- 镜像标签: $IMAGE_TAG"echo "- Pod状态:"kubectl get pods -n $NAMESPACE -l app=mcp-serverecho ""echo "- 服务状态:"kubectl get services -n $NAMESPACE -l app=mcp-server

}# 错误处理

trap 'log_error "部署过程中发生错误,退出码: $?"' ERR# 执行主函数

main "$@"5.2 故障自动恢复

# scripts/auto_recovery.py - 自动故障恢复脚本

import time

import logging

import requests

import subprocess

from typing import Dict, List

from dataclasses import dataclass

from enum import Enumclass HealthStatus(Enum):HEALTHY = "healthy"DEGRADED = "degraded"UNHEALTHY = "unhealthy"@dataclass

class HealthCheck:name: strurl: strtimeout: int = 5retries: int = 3expected_status: int = 200class AutoRecoveryManager:def __init__(self, config: Dict):self.config = configself.logger = self._setup_logging()self.health_checks = self._load_health_checks()self.recovery_actions = self._load_recovery_actions()def _setup_logging(self) -> logging.Logger:logging.basicConfig(level=logging.INFO,format='%(asctime)s - %(name)s - %(levelname)s - %(message)s')return logging.getLogger('auto_recovery')def _load_health_checks(self) -> List[HealthCheck]:checks = []for check_config in self.config.get('health_checks', []):checks.append(HealthCheck(**check_config))return checksdef _load_recovery_actions(self) -> Dict:return self.config.get('recovery_actions', {})def check_health(self, check: HealthCheck) -> bool:"""执行单个健康检查"""for attempt in range(check.retries):try:response = requests.get(check.url, timeout=check.timeout)if response.status_code == check.expected_status:return Trueexcept requests.RequestException as e:self.logger.warning(f"健康检查失败 {check.name} (尝试 {attempt + 1}/{check.retries}): {e}")if attempt < check.retries - 1:time.sleep(2 ** attempt) # 指数退避return Falsedef get_system_health(self) -> HealthStatus:"""获取系统整体健康状态"""failed_checks = 0total_checks = len(self.health_checks)for check in self.health_checks:if not self.check_health(check):failed_checks += 1self.logger.error(f"健康检查失败: {check.name}")if failed_checks == 0:return HealthStatus.HEALTHYelif failed_checks < total_checks / 2:return HealthStatus.DEGRADEDelse:return HealthStatus.UNHEALTHYdef execute_recovery_action(self, action_name: str) -> bool:"""执行恢复操作"""action = self.recovery_actions.get(action_name)if not action:self.logger.error(f"未找到恢复操作: {action_name}")return Falsetry:self.logger.info(f"执行恢复操作: {action_name}")if action['type'] == 'kubectl':result = subprocess.run(action['command'].split(),capture_output=True,text=True,timeout=action.get('timeout', 60))if result.returncode == 0:self.logger.info(f"恢复操作成功: {action_name}")return Trueelse:self.logger.error(f"恢复操作失败: {result.stderr}")return Falseelif action['type'] == 'http':response = requests.post(action['url'],json=action.get('payload', {}),timeout=action.get('timeout', 30))if response.status_code in [200, 201, 202]:self.logger.info(f"恢复操作成功: {action_name}")return Trueelse:self.logger.error(f"恢复操作失败: HTTP {response.status_code}")return Falseexcept Exception as e:self.logger.error(f"执行恢复操作时发生异常: {e}")return Falsedef run_recovery_cycle(self):"""运行一次恢复周期"""health_status = self.get_system_health()self.logger.info(f"系统健康状态: {health_status.value}")if health_status == HealthStatus.HEALTHY:return# 根据健康状态执行相应的恢复操作if health_status == HealthStatus.DEGRADED:recovery_actions = ['restart_unhealthy_pods', 'clear_cache']else: # UNHEALTHYrecovery_actions = ['restart_deployment', 'scale_up', 'notify_oncall']for action in recovery_actions:if self.execute_recovery_action(action):# 等待恢复操作生效time.sleep(30)# 重新检查健康状态if self.get_system_health() == HealthStatus.HEALTHY:self.logger.info("系统已恢复健康状态")returnself.logger.warning("自动恢复操作完成,但系统仍未完全恢复")def start_monitoring(self, interval: int = 60):"""启动持续监控"""self.logger.info(f"启动自动恢复监控,检查间隔: {interval}秒")while True:try:self.run_recovery_cycle()time.sleep(interval)except KeyboardInterrupt:self.logger.info("监控已停止")breakexcept Exception as e:self.logger.error(f"监控过程中发生异常: {e}")time.sleep(interval)# 配置示例

config = {"health_checks": [{"name": "mcp_server_health","url": "http://mcp-server-service/health","timeout": 5,"retries": 3},{"name": "mcp_server_ready","url": "http://mcp-server-service/ready","timeout": 5,"retries": 2}],"recovery_actions": {"restart_unhealthy_pods": {"type": "kubectl","command": "kubectl delete pods -l app=mcp-server,status=unhealthy -n mcp-production","timeout": 60},"restart_deployment": {"type": "kubectl","command": "kubectl rollout restart deployment/mcp-server -n mcp-production","timeout": 120},"scale_up": {"type": "kubectl","command": "kubectl scale deployment/mcp-server --replicas=5 -n mcp-production","timeout": 60},"clear_cache": {"type": "http","url": "http://mcp-server-service/admin/cache/clear","timeout": 30},"notify_oncall": {"type": "http","url": "https://alerts.company.com/webhook","payload": {"severity": "critical","message": "MCP服务器自动恢复失败,需要人工干预"},"timeout": 10}}

}if __name__ == "__main__":manager = AutoRecoveryManager(config)manager.start_monitoring()5.3 性能调优与容量规划

5.3.1 资源使用分析

# scripts/capacity_planning.py - 容量规划分析

import pandas as pd

import numpy as np

from datetime import datetime, timedelta

import matplotlib.pyplot as plt

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import PolynomialFeaturesclass CapacityPlanner:def __init__(self, prometheus_url: str):self.prometheus_url = prometheus_urlself.metrics_data = {}def fetch_metrics(self, query: str, start_time: datetime, end_time: datetime) -> pd.DataFrame:"""从Prometheus获取指标数据"""# 这里简化实现,实际应该调用Prometheus API# 模拟数据生成time_range = pd.date_range(start_time, end_time, freq='5min')data = {'timestamp': time_range,'value': np.random.normal(50, 10, len(time_range)) # 模拟CPU使用率}return pd.DataFrame(data)def analyze_resource_trends(self, days: int = 30) -> dict:"""分析资源使用趋势"""end_time = datetime.now()start_time = end_time - timedelta(days=days)# 获取各项指标cpu_data = self.fetch_metrics('mcp_cpu_usage', start_time, end_time)memory_data = self.fetch_metrics('mcp_memory_usage', start_time, end_time)request_data = self.fetch_metrics('mcp_requests_rate', start_time, end_time)# 趋势分析trends = {}for name, data in [('cpu', cpu_data), ('memory', memory_data), ('requests', request_data)]:X = np.arange(len(data)).reshape(-1, 1)y = data['value'].values# 线性回归model = LinearRegression()model.fit(X, y)# 预测未来30天future_X = np.arange(len(data), len(data) + 8640).reshape(-1, 1) # 30天的5分钟间隔future_y = model.predict(future_X)trends[name] = {'current_avg': np.mean(y[-288:]), # 最近24小时平均值'trend_slope': model.coef_[0],'predicted_30d': future_y[-1],'growth_rate': (future_y[-1] - np.mean(y[-288:])) / np.mean(y[-288:]) * 100}return trendsdef calculate_capacity_requirements(self, target_growth: float = 50) -> dict:"""计算容量需求"""trends = self.analyze_resource_trends()recommendations = {}# CPU容量规划current_cpu = trends['cpu']['current_avg']predicted_cpu = current_cpu * (1 + target_growth / 100)if predicted_cpu > 70: # CPU使用率阈值cpu_scale_factor = predicted_cpu / 70recommendations['cpu'] = {'action': 'scale_up','current_usage': f"{current_cpu:.1f}%",'predicted_usage': f"{predicted_cpu:.1f}%",'recommended_scale': f"{cpu_scale_factor:.1f}x",'new_replicas': int(np.ceil(3 * cpu_scale_factor)) # 当前3个副本}else:recommendations['cpu'] = {'action': 'maintain','current_usage': f"{current_cpu:.1f}%",'predicted_usage': f"{predicted_cpu:.1f}%"}# 内存容量规划current_memory = trends['memory']['current_avg']predicted_memory = current_memory * (1 + target_growth / 100)if predicted_memory > 80: # 内存使用率阈值memory_scale_factor = predicted_memory / 80recommendations['memory'] = {'action': 'increase_limits','current_usage': f"{current_memory:.1f}%",'predicted_usage': f"{predicted_memory:.1f}%",'recommended_memory': f"{int(2 * memory_scale_factor)}Gi" # 当前2Gi}else:recommendations['memory'] = {'action': 'maintain','current_usage': f"{current_memory:.1f}%",'predicted_usage': f"{predicted_memory:.1f}%"}# 请求量容量规划current_rps = trends['requests']['current_avg']predicted_rps = current_rps * (1 + target_growth / 100)if predicted_rps > 1000: # RPS阈值rps_scale_factor = predicted_rps / 1000recommendations['throughput'] = {'action': 'scale_out','current_rps': f"{current_rps:.0f}",'predicted_rps': f"{predicted_rps:.0f}",'recommended_replicas': int(np.ceil(3 * rps_scale_factor))}else:recommendations['throughput'] = {'action': 'maintain','current_rps': f"{current_rps:.0f}",'predicted_rps': f"{predicted_rps:.0f}"}return recommendationsdef generate_capacity_report(self) -> str:"""生成容量规划报告"""recommendations = self.calculate_capacity_requirements()report = f"""

# MCP服务器容量规划报告

生成时间: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}## 当前资源使用情况### CPU使用率

- 当前平均使用率: {recommendations['cpu']['current_usage']}

- 预测使用率: {recommendations['cpu']['predicted_usage']}

- 建议操作: {recommendations['cpu']['action']}### 内存使用率

- 当前平均使用率: {recommendations['memory']['current_usage']}

- 预测使用率: {recommendations['memory']['predicted_usage']}

- 建议操作: {recommendations['memory']['action']}### 请求吞吐量

- 当前平均RPS: {recommendations['throughput']['current_rps']}

- 预测RPS: {recommendations['throughput']['predicted_rps']}

- 建议操作: {recommendations['throughput']['action']}## 扩容建议"""if recommendations['cpu']['action'] == 'scale_up':report += f"- **CPU扩容**: 建议将副本数扩展到 {recommendations['cpu']['new_replicas']} 个\n"if recommendations['memory']['action'] == 'increase_limits':report += f"- **内存扩容**: 建议将内存限制提升到 {recommendations['memory']['recommended_memory']}\n"if recommendations['throughput']['action'] == 'scale_out':report += f"- **吞吐量扩容**: 建议将副本数扩展到 {recommendations['throughput']['recommended_replicas']} 个\n"report += """

## 实施建议1. **监控告警**: 设置资源使用率告警阈值

2. **自动扩缩容**: 配置HPA (Horizontal Pod Autoscaler)

3. **定期评估**: 每月进行一次容量规划评估

4. **成本优化**: 在非高峰期适当缩容以节省成本## 风险评估- **高风险**: CPU/内存使用率超过80%

- **中风险**: 请求响应时间超过500ms

- **低风险**: 资源使用率在正常范围内

"""return report# 使用示例

if __name__ == "__main__":planner = CapacityPlanner("http://prometheus:9090")report = planner.generate_capacity_report()print(report)# 保存报告with open(f"capacity_report_{datetime.now().strftime('%Y%m%d')}.md", "w") as f:f.write(report)6. 安全加固与合规性

6.1 安全扫描与漏洞管理

# .github/workflows/security-scan.yml

name: Security Scanon:push:branches: [ main, develop ]pull_request:branches: [ main ]schedule:- cron: '0 2 * * 1' # 每周一凌晨2点jobs:dependency-scan:runs-on: ubuntu-lateststeps:- uses: actions/checkout@v3- name: Run Snyk to check for vulnerabilitiesuses: snyk/actions/node@masterenv:SNYK_TOKEN: ${{ secrets.SNYK_TOKEN }}with:args: --severity-threshold=high- name: Upload result to GitHub Code Scanninguses: github/codeql-action/upload-sarif@v2with:sarif_file: snyk.sarifcontainer-scan:runs-on: ubuntu-lateststeps:- uses: actions/checkout@v3- name: Build Docker imagerun: docker build -t mcp-server:scan .- name: Run Trivy vulnerability scanneruses: aquasecurity/trivy-action@masterwith:image-ref: 'mcp-server:scan'format: 'sarif'output: 'trivy-results.sarif'- name: Upload Trivy scan resultsuses: github/codeql-action/upload-sarif@v2with:sarif_file: 'trivy-results.sarif'code-scan:runs-on: ubuntu-lateststeps:- uses: actions/checkout@v3- name: Initialize CodeQLuses: github/codeql-action/init@v2with:languages: javascript- name: Autobuilduses: github/codeql-action/autobuild@v2- name: Perform CodeQL Analysisuses: github/codeql-action/analyze@v26.2 网络安全策略

# k8s/network-policy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:name: mcp-server-network-policynamespace: mcp-production

spec:podSelector:matchLabels:app: mcp-serverpolicyTypes:- Ingress- Egressingress:# 允许来自API网关的流量- from:- namespaceSelector:matchLabels:name: api-gatewayports:- protocol: TCPport: 8080# 允许来自监控系统的流量- from:- namespaceSelector:matchLabels:name: monitoringports:- protocol: TCPport: 8080egress:# 允许访问数据库- to:- namespaceSelector:matchLabels:name: databaseports:- protocol: TCPport: 5432# 允许访问Redis- to:- namespaceSelector:matchLabels:name: cacheports:- protocol: TCPport: 6379# 允许DNS查询- to: []ports:- protocol: UDPport: 53# 允许HTTPS出站流量- to: []ports:- protocol: TCPport: 443---

# Pod安全策略

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:name: mcp-server-psp

spec:privileged: falseallowPrivilegeEscalation: falserequiredDropCapabilities:- ALLvolumes:- 'configMap'- 'emptyDir'- 'projected'- 'secret'- 'downwardAPI'- 'persistentVolumeClaim'runAsUser:rule: 'MustRunAsNonRoot'seLinux:rule: 'RunAsAny'fsGroup:rule: 'RunAsAny'7. 成本优化与资源管理

7.1 资源配额管理

# k8s/resource-quota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:name: mcp-production-quotanamespace: mcp-production

spec:hard:requests.cpu: "10"requests.memory: 20Gilimits.cpu: "20"limits.memory: 40Gipersistentvolumeclaims: "10"pods: "20"services: "10"secrets: "20"configmaps: "20"---

apiVersion: v1

kind: LimitRange

metadata:name: mcp-production-limitsnamespace: mcp-production

spec:limits:- default:cpu: "1000m"memory: "2Gi"defaultRequest:cpu: "500m"memory: "1Gi"type: Container- max:cpu: "2000m"memory: "4Gi"min:cpu: "100m"memory: "128Mi"type: Container7.2 自动扩缩容配置

# k8s/hpa.yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:name: mcp-server-hpanamespace: mcp-production

spec:scaleTargetRef:apiVersion: apps/v1kind: Deploymentname: mcp-serverminReplicas: 2maxReplicas: 10metrics:- type: Resourceresource:name: cputarget:type: UtilizationaverageUtilization: 70- type: Resourceresource:name: memorytarget:type: UtilizationaverageUtilization: 80- type: Podspods:metric:name: mcp_requests_per_secondtarget:type: AverageValueaverageValue: "100"behavior:scaleDown:stabilizationWindowSeconds: 300policies:- type: Percentvalue: 50periodSeconds: 60scaleUp:stabilizationWindowSeconds: 60policies:- type: Percentvalue: 100periodSeconds: 60- type: Podsvalue: 2periodSeconds: 60---

# 垂直扩缩容配置

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:name: mcp-server-vpanamespace: mcp-production

spec:targetRef:apiVersion: apps/v1kind: Deploymentname: mcp-serverupdatePolicy:updateMode: "Auto"resourcePolicy:containerPolicies:- containerName: mcp-servermaxAllowed:cpu: "2"memory: "4Gi"minAllowed:cpu: "100m"memory: "128Mi"总结

作为博主摘星,通过深入研究和实践企业级MCP部署的完整DevOps流程,我深刻认识到这不仅是一个技术实施过程,更是一个系统性的工程管理实践。在当今数字化转型的浪潮中,MCP作为AI应用的核心基础设施,其部署质量直接决定了企业AI能力的上限和业务创新的速度。从我多年的项目经验来看,成功的企业级MCP部署需要在架构设计、容器化实施、CI/CD流水线、监控运维等多个维度上精心规划和执行。本文详细介绍的从开发到生产的完整流程,不仅涵盖了技术实现的各个环节,更重要的是体现了现代DevOps理念在AI基础设施建设中的最佳实践。通过标准化的容器化部署、自动化的CI/CD流水线、全方位的监控体系和智能化的运维管理,我们能够构建出既稳定可靠又高效灵活的MCP服务平台。特别值得强调的是,安全性和合规性在企业级部署中的重要性不容忽视,从网络隔离到数据加密,从访问控制到审计日志,每一个环节都需要严格把控。同时,成本优化和资源管理也是企业级部署中必须考虑的现实问题,通过合理的资源配额、智能的自动扩缩容和有效的容量规划,我们可以在保证服务质量的前提下最大化资源利用效率。展望未来,随着AI技术的不断演进和企业数字化程度的持续提升,MCP部署的复杂性和重要性还将进一步增加,这也为我们技术人员提供了更多的挑战和机遇。我相信,通过持续的技术创新、流程优化和经验积累,我们能够构建出更加智能、安全、高效的企业级AI基础设施,为企业的数字化转型和智能化升级提供强有力的技术支撑,最终推动整个行业向更高水平发展。

参考资料

- Kubernetes官方文档

- Docker最佳实践指南

- GitLab CI/CD文档

- Prometheus监控指南

- Grafana可视化文档

- 企业级DevOps实践

- 云原生安全最佳实践

- Kubernetes安全加固指南

本文由博主摘星原创,专注于AI基础设施与DevOps实践的深度分析。如有技术问题或合作需求,欢迎通过评论区或私信联系。

🌈 我是摘星!如果这篇文章在你的技术成长路上留下了印记:

👁️ 【关注】与我一起探索技术的无限可能,见证每一次突破

👍 【点赞】为优质技术内容点亮明灯,传递知识的力量

🔖 【收藏】将精华内容珍藏,随时回顾技术要点

💬 【评论】分享你的独特见解,让思维碰撞出智慧火花

🗳️ 【投票】用你的选择为技术社区贡献一份力量

技术路漫漫,让我们携手前行,在代码的世界里摘取属于程序员的那片星辰大海!