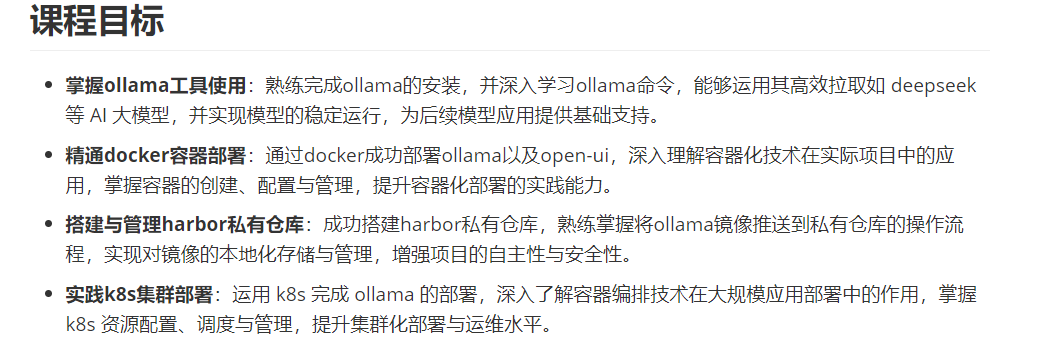

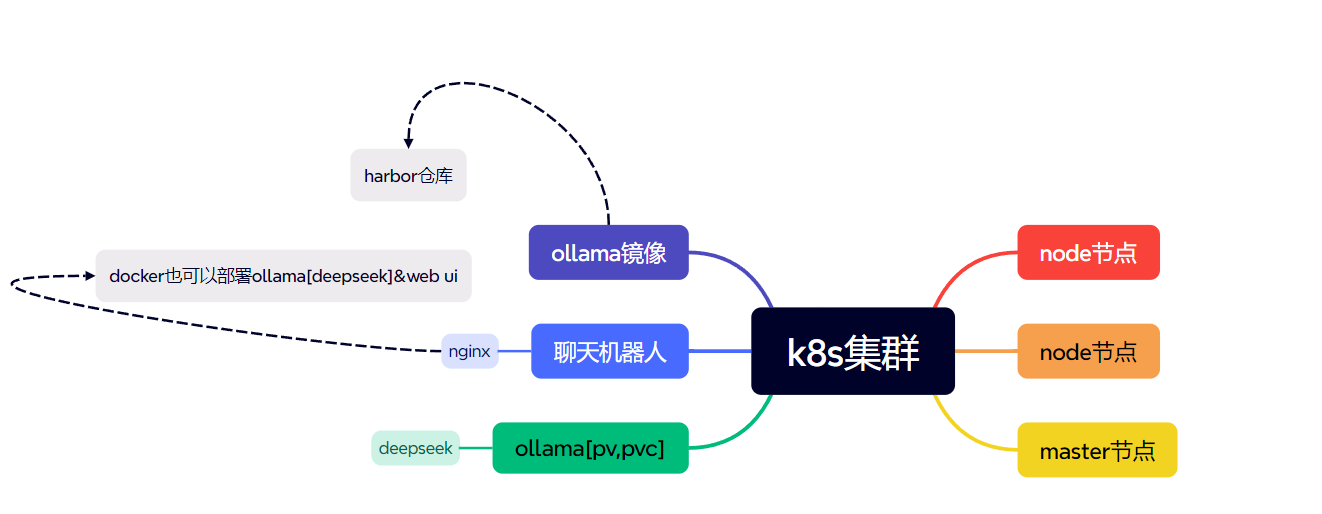

项目七.AI大模型部署

环境准备

- 此处使用的是rock linux8.9操作系统

- k8s集群三个设备,使用centos7.9操作系统

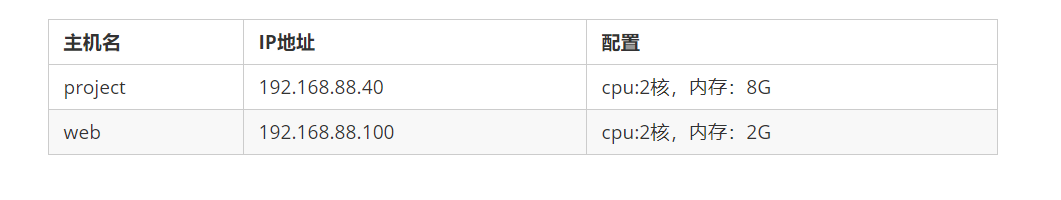

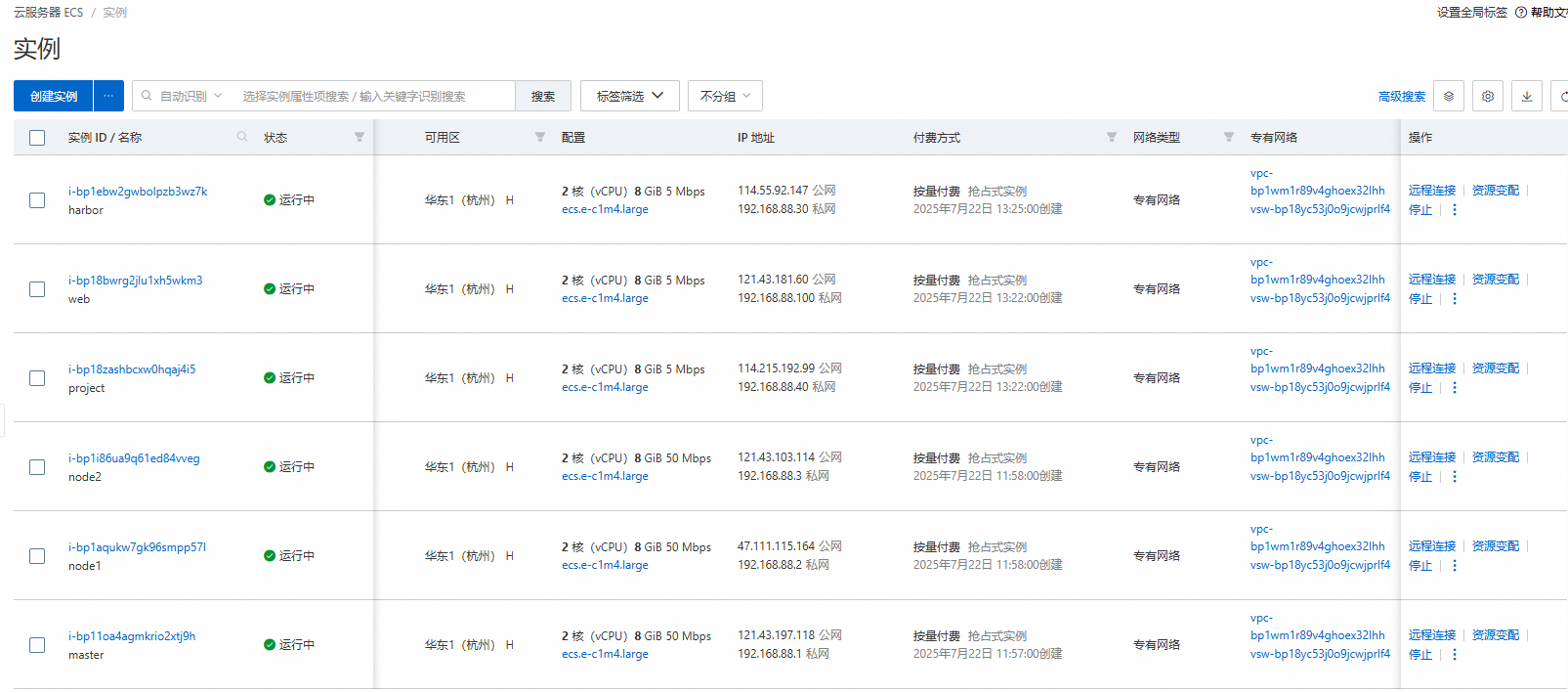

设备配置

##上传ollama工具的压缩包

[root@project ~]# ll

total 1497732

-rw-r--r-- 1 root root 1533674176 Jul 21 11:27 ollama-linux-amd64.tgz

[root@project ~]# tar -C /usr -xzf ollama-linux-amd64.tgz

##前台运行ollama工具

[root@project ~]# ollama start

[GIN] 2025/07/21 - 11:32:09 | 200 | 36.969µs | 127.0.0.1 | HEAD "/"

[GIN] 2025/07/21 - 11:32:09 | 200 | 119.106µs | 127.0.0.1 | GET "/api/ps"

[GIN] 2025/07/21 - 11:32:14 | 200 | 33.533µs | 127.0.0.1 | HEAD "/"

[GIN] 2025/07/21 - 11:32:14 | 200 | 181.97µs | 127.0.0.1 | GET "/api/tags"

^C

##后台运行

[root@project ~]# ollama start &

[1] 1976

##查看端口

[root@project ~]# ss -antlup | grep ollama

tcp LISTEN 0 2048 127.0.0.1:11434 0.0.0.0:* users:(("ollama",pid=1800,fd=3))

##前台运行时可另起一个窗口查看

#列出大模型的源文件

[root@project ~]# ollama ls

NAME ID SIZE MODIFIED

#列出正在运行的大模型

[root@project ~]# ollama ps

NAME ID SIZE PROCESSOR UNTIL/*

[root@project ~]# ollama start/serve #前台运行

[root@project ~]# ollama start/serve & #后台运行

[root@project ~]# OLLAMA_HOST=0.0.0.0 ollama start/serve #设置参数运行

*/##部署DeepSeek模型

#拉取

[root@project ollama]# ollama pull deepseek-r1:1.5b

[GIN] 2025/07/21 - 13:18:53 | 200 | 26.127?s | 127.0.0.1 | HEAD "/"

pulling manifest ? time=2025-07-21T13:18:56.050+08:00 level=INFO source=download.go:176 msg="downloading aabd4debf0c8 in 12 100 MB part(s)"

pulling manifest

pulling aabd4debf0c8... 100%

...

pulling manifest

pulling aabd4debf0c8... 100%

verifying sha256 digest

writing manifest

success

#运行大模型

[root@project ~]# ollama run deepseek-r1:1.5b

...

[GIN] 2025/07/21 - 13:55:57 | 200 | 1.316049658s | 127.0.0.1 | POST "/api/generate"

>>> 你是谁? ##测试:通过后台交互

<think></think>您好!我是由中国的深度求索(DeepSeek)公司开发的智能助手DeepSeek-R1。如您有任何任何问题,我会尽我所能为您提供帮助。[GIN] 2025/07/21 - 13:56:21 | 200 | 6.750316892s | 127.0.0.1 | POST "/api/chat">>> Send a message (/? for help)

【ctrl+d】退出模型终端##查看内存使用

[root@project ~]# free -htotal used free shared buff/cache available

Mem: 7.2Gi 260Mi 1.4Gi 1.0Mi 5.6Gi 6.7Gi

Swap: 0B 0B 0B

##列出所以模型

[root@project ~]# ollama ls

NAME ID SIZE MODIFIED

deepseek-r1:1.5b e0979632db5a 1.1 GB 4 minutes ago

##查看正在运行的模型

[root@project ~]# ollama ps

NAME ID SIZE PROCESSOR UNTIL

deepseek-r1:1.5b e0979632db5a 1.6 GB 100% CPU 4 minutes from now##查看模型信息

[root@project ~]# ollama show deepseek-r1:1.5bModelarchitecture qwen2 parameters 1.8B context length 131072 embedding length 1536 quantization Q4_K_M Parametersstop "<|begin▁of▁sentence|>" stop "<|end▁of▁sentence|>" stop "<|User|>" stop "<|Assistant|>" LicenseMIT License Copyright (c) 2023 DeepSeek##停止ollama工具

[root@project ~]# killall ollama

##查看;停止运行模型

[root@project ~]# ollama ps

NAME ID SIZE PROCESSOR UNTIL

deepseek-r1:1.5b e0979632db5a 1.6 GB 100% CPU 4 minutes from now

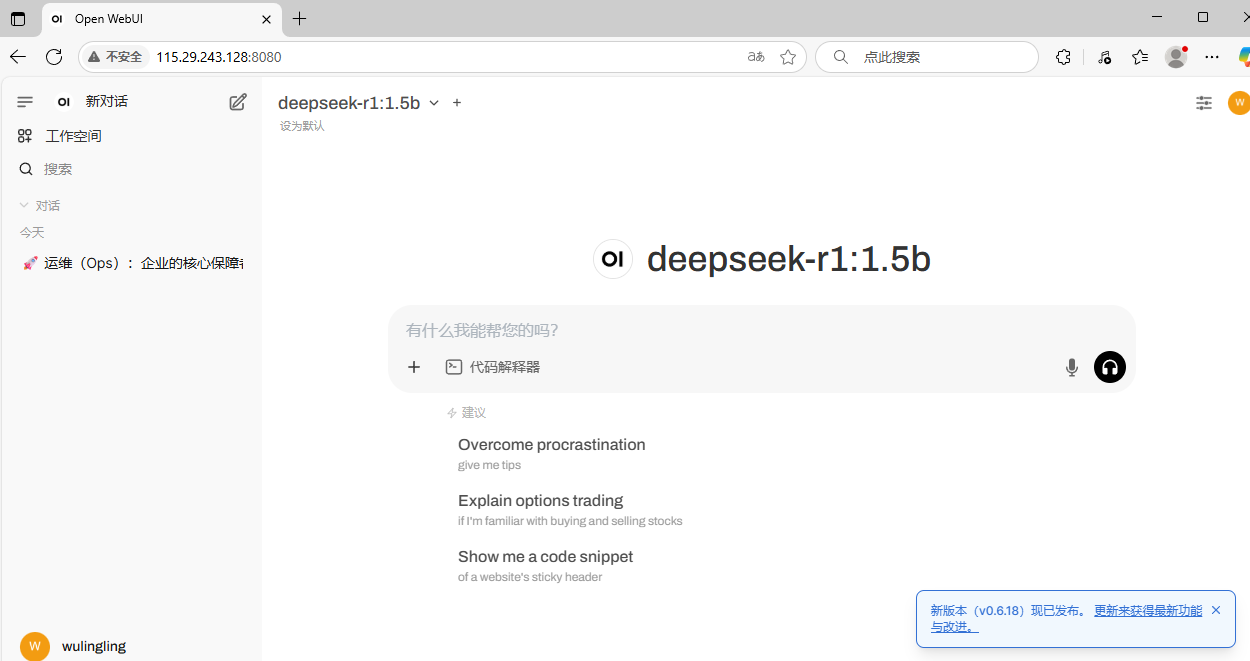

[root@project ~]# ollama stop deepseek-r1:1.5bopenwebui部署

- 发布前台供用户使用

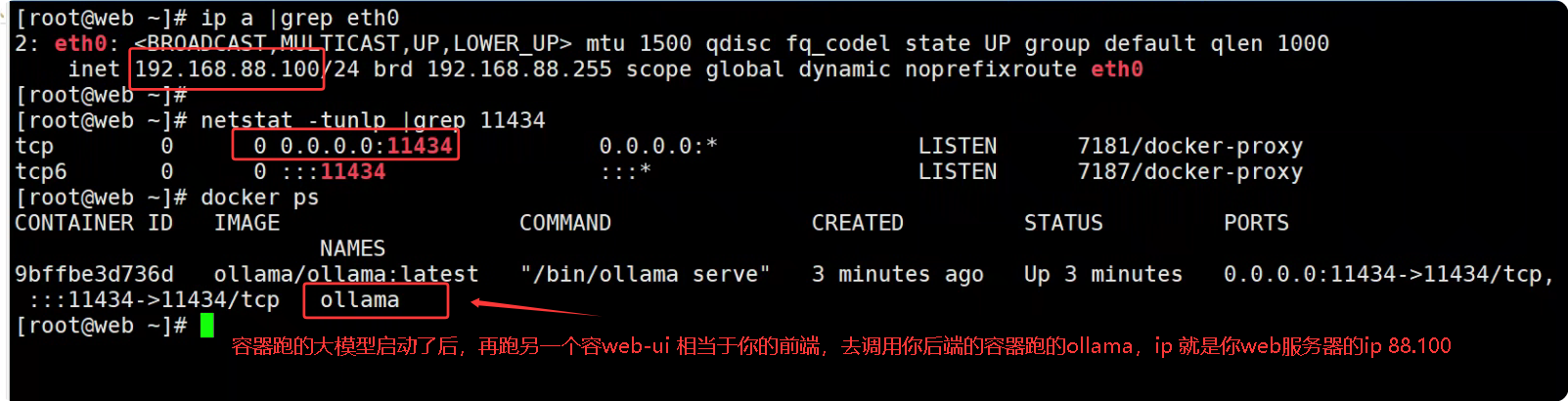

webui 去调用你本机容器跑的ollama的大模型,[ip就是你的web服务器的ip],等容器的deepseek跑起来再用webui去调你容器里的ollama 。 所以说Project服务当作后端也可以。哪么你的docker 去调你project的服务器上的大模型就行了。project上面的ollama删了,所以现在用docker 跑起来。调它docker跑的ollama也可以

##安装docker-ce

[root@web ~]# yum install -y yum-utils

[root@web ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@web ~]# yum install docker-ce -y

[root@web ~]# systemctl enable docker --now

##上传镜像文件

[root@web ~]# ll

total 7817064

-rw-r--r-- 1 root root 3484484096 Jul 21 16:26 ollama.tar.gz

-rw-r--r-- 1 root root 4520183296 Jul 21 16:30 open-webui.tar.gz

##导入镜像文件

[root@web ~]# docker load -i ollama.tar.gz

[root@web ~]# docker load -i open-webui.tar.gz

[root@web ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ollama/ollama latest 676364f65510 4 months ago 3.48GB

ghcr.io/open-webui/open-webui main ab78641aa87a 5 months ago 4.46GB

##运行ollama工具

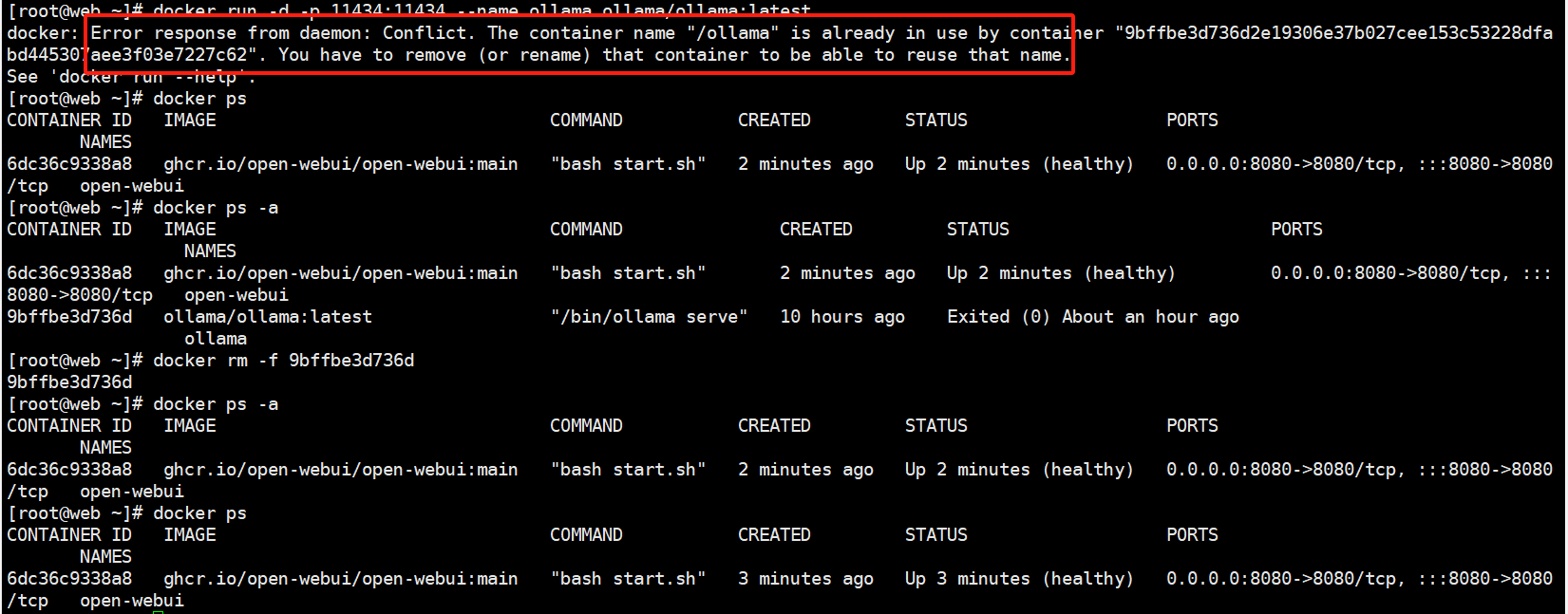

[root@web ~]# docker run -d -p 11434:11434 --name ollama ollama/ollama:latest

##先运行 Ollama 容器中的 DeepSeek 模型

[root@web ~]# docker exec -it ollama ollama run deepseek-r1:1.5b

pulling manifest

pulling aabd4debf0c8... 100% ▕████████████████████████████████████████████████████▏ 1.1 GB

pulling c5ad996bda6e... 100% ▕████████████████████████████████████████████████████▏ 556 B

pulling 6e4c38e1172f... 100% ▕████████████████████████████████████████████████████▏ 1.1 KB

pulling f4d24e9138dd... 100% ▕████████████████████████████████████████████████████▏ 148 B

pulling a85fe2a2e58e... 100% ▕████████████████████████████████████████████████████▏ 487 B

verifying sha256 digest

writing manifest

success

>>> 完全没事

<think></think>嗯,听起来你有点放松的心态哦!如果有什么需要帮助的,尽管告诉我哦~ 😊

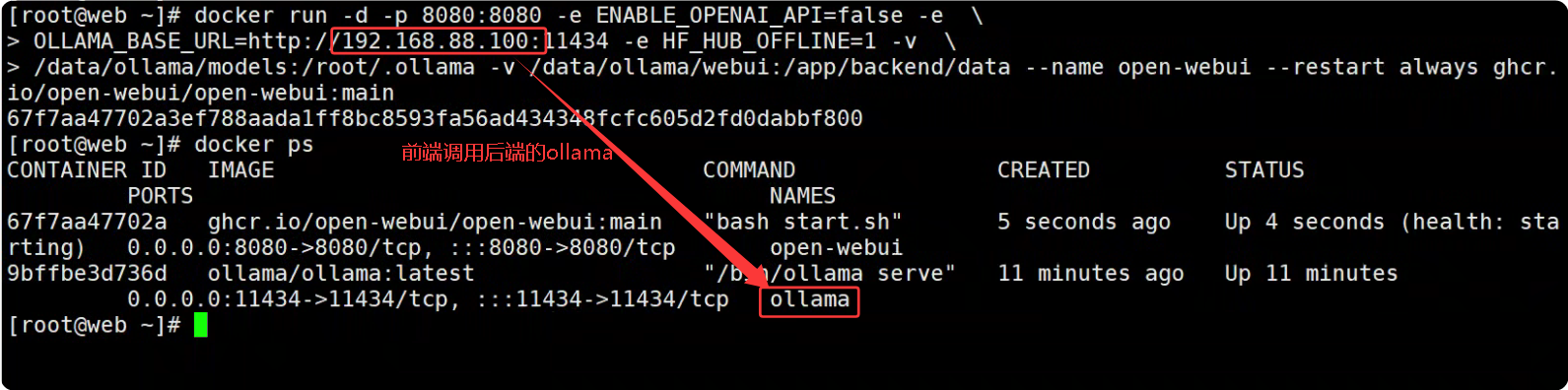

##再运行 Open WebUI

[root@web ~]# ls /data/ollama/webui/

cache uploads vector_db webui.db

[root@web ~]# rm -rf /data/ollama/webui/*

[root@web ~]# ls /data/ollama/models/

[root@web ~]#

[root@web ~]# ip a |grep eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000inet 192.168.88.100/24 brd 192.168.88.255 scope global dynamic noprefixroute eth0

[root@web ~]#

[root@web ~]# docker run -d -p 8080:8080 -e ENABLE_OPENAI_API=false -e \

> OLLAMA_BASE_URL=http://192.168.88.100:11434 -e HF_HUB_OFFLINE=1 -v \

> /data/ollama/models:/root/.ollama -v /data/ollama/webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

67f7aa47702a3ef788aada1ff8bc8593fa56ad434348fcfc605d2fd0dabbf800

[root@web ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

67f7aa47702a ghcr.io/open-webui/open-webui:main "bash start.sh" 5 seconds ago Up 4 seconds (health: starting) 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp open-webui

9bffbe3d736d ollama/ollama:latest "/bin/ollama serve" 11 minutes ago Up 11 minutes 0.0.0.0:11434->11434/tcp, :::11434->11434/tcp ollama

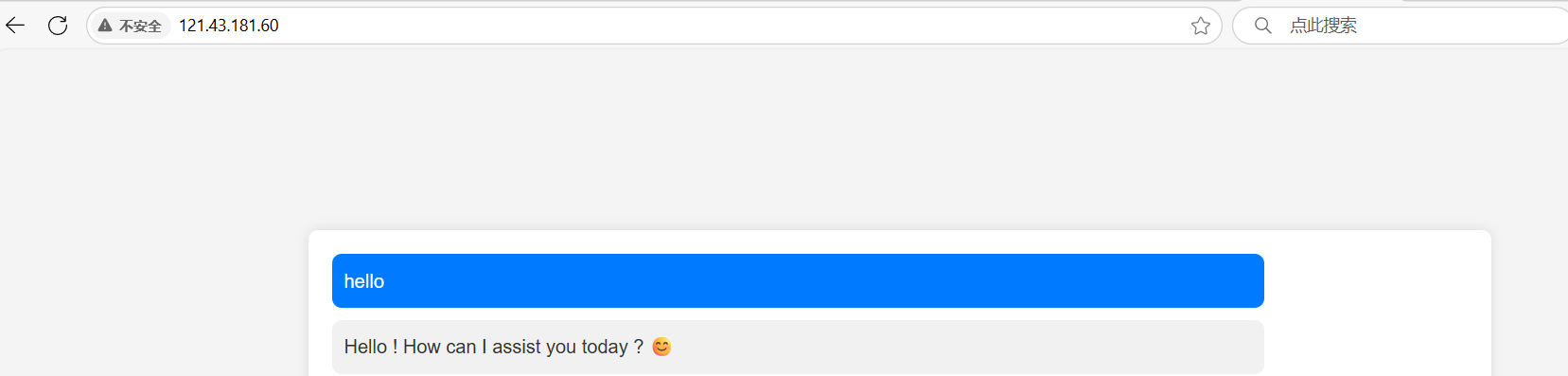

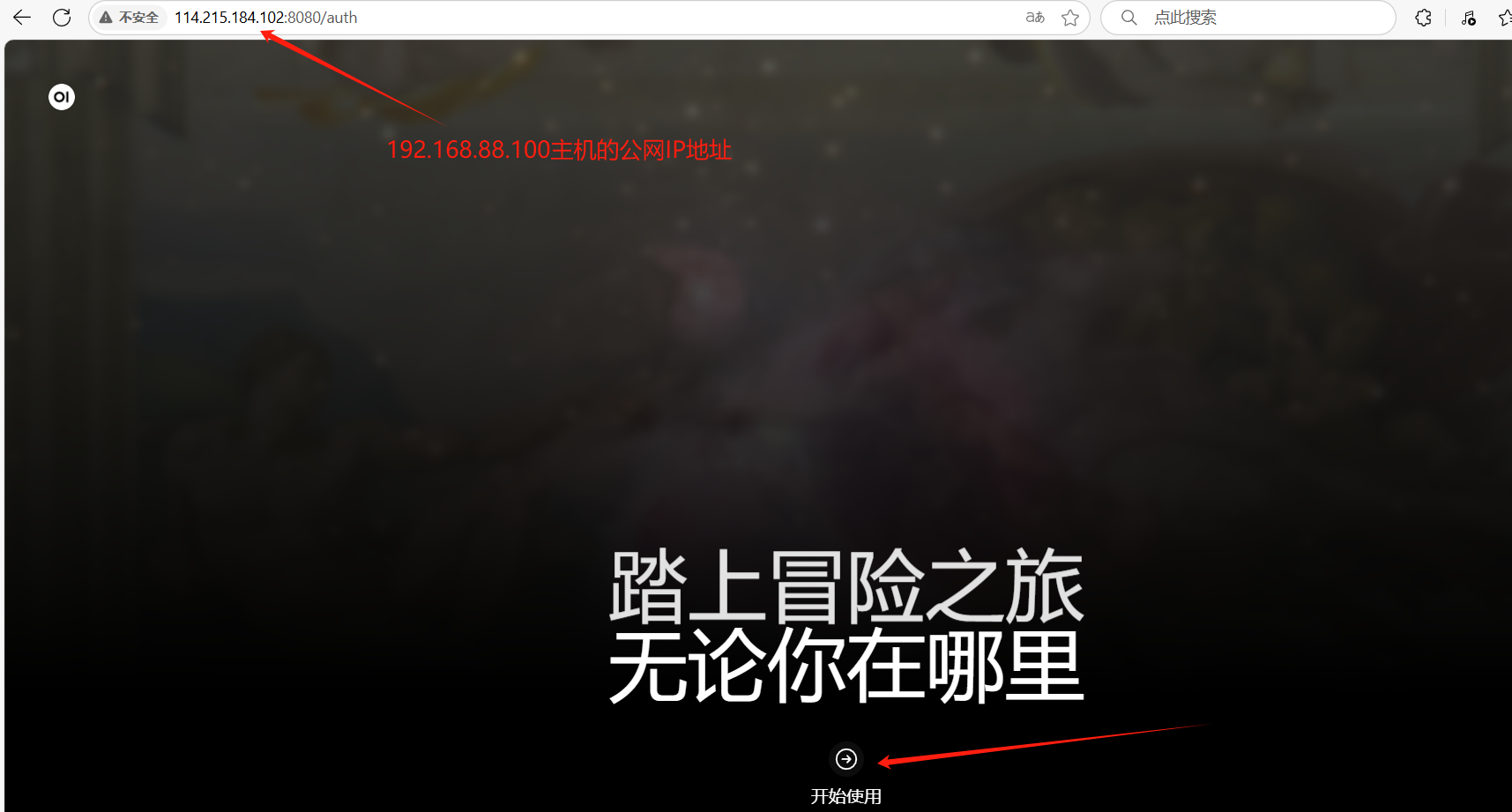

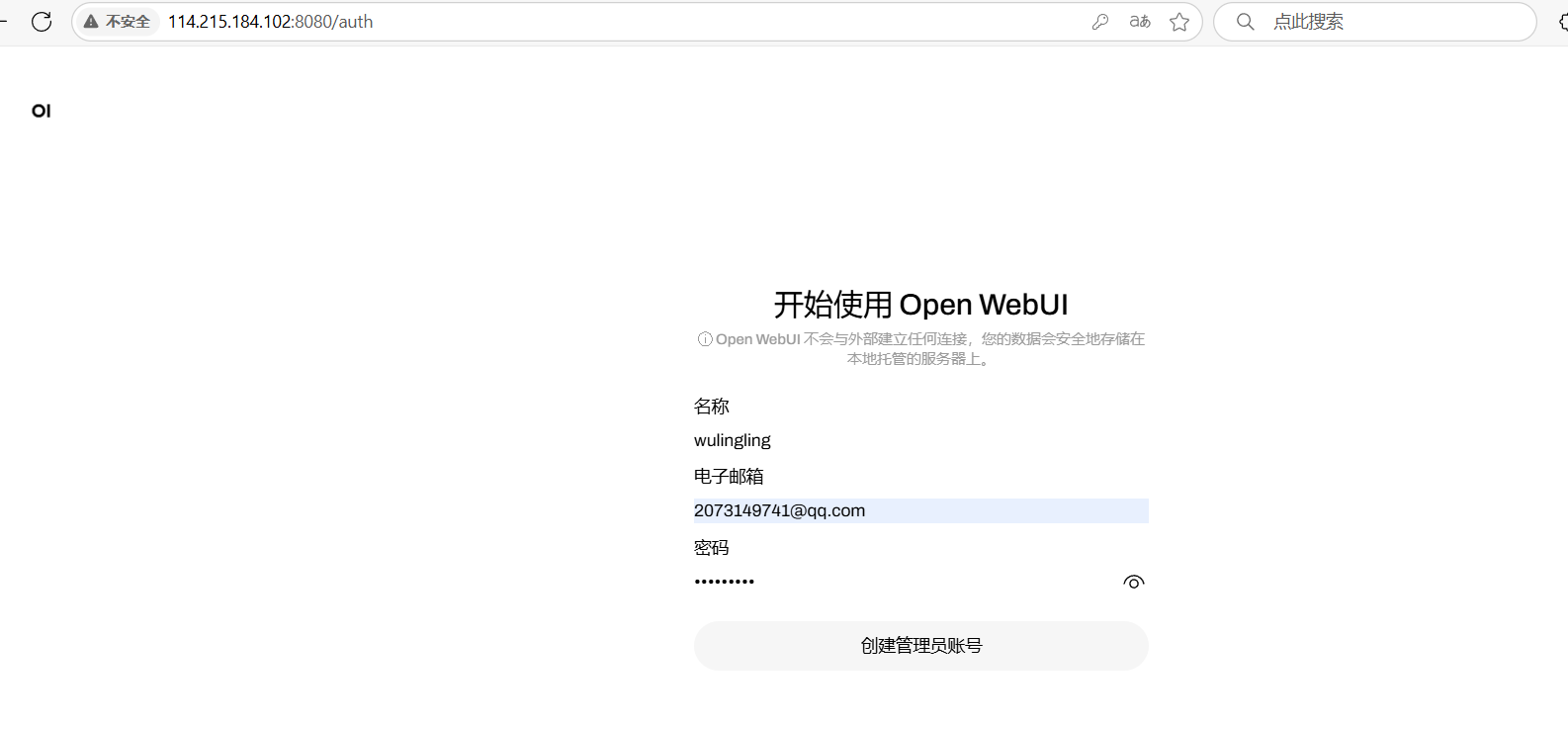

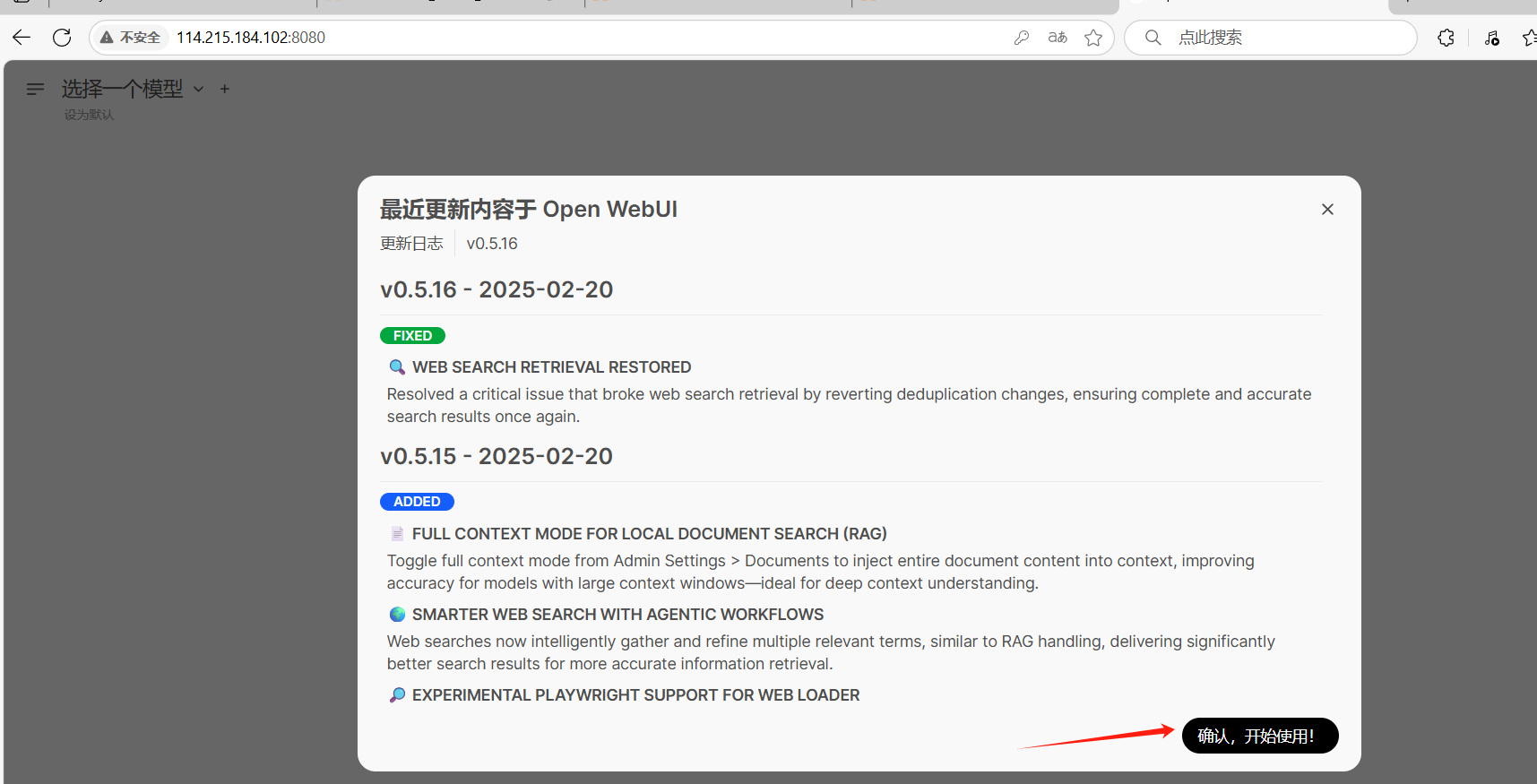

测试访问

- http://192.168.88.100:8080 --以下IP均为88.100的公网IP地址

ollama工具启动并允许所有设备连接

##重新启动ollama工具;设置参数[允许所有主机连接]

[root@project ~]# killall ollama

[root@project ~]# OLLAMA_HOST=0.0.0.0 ollama start &

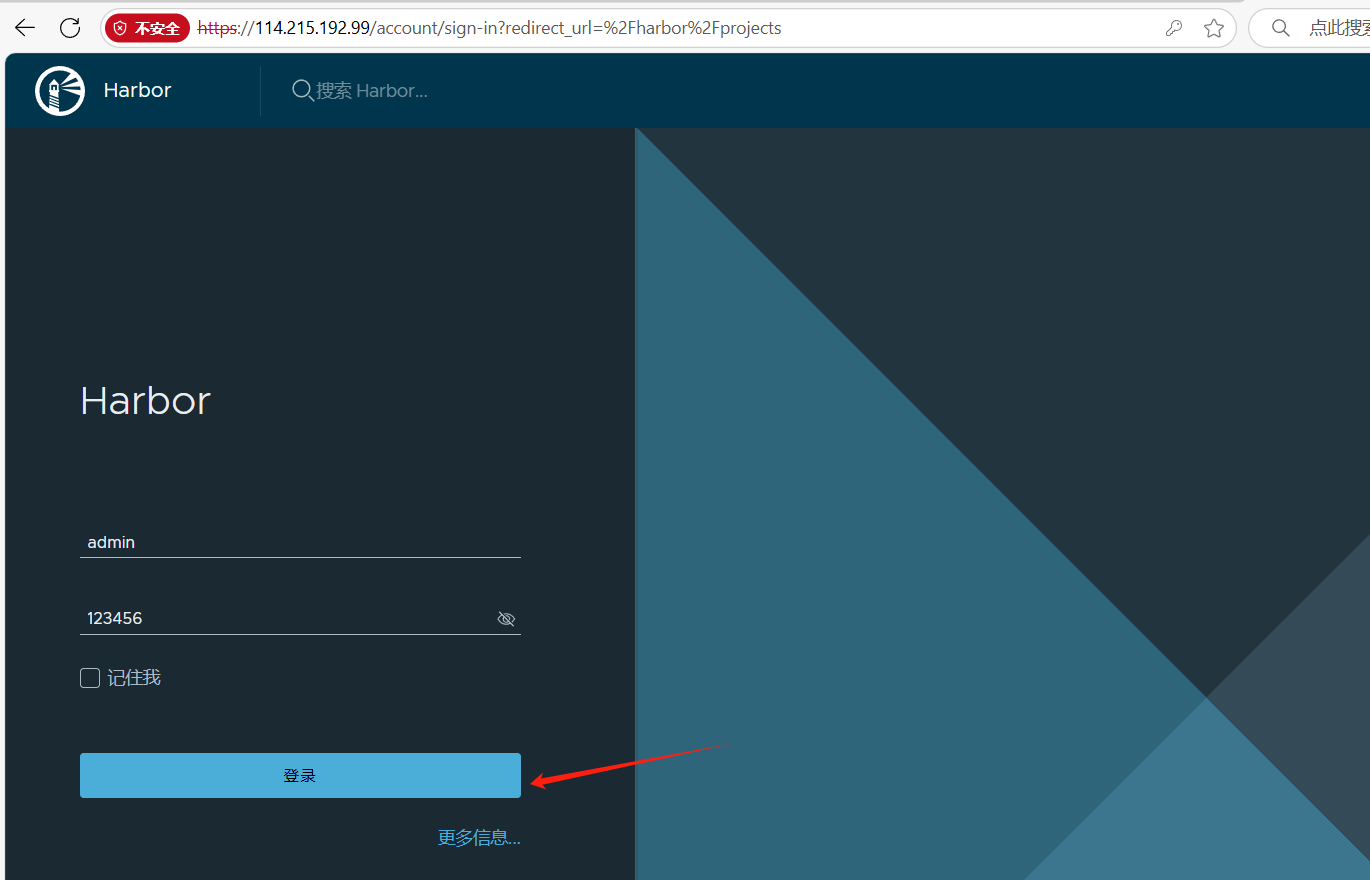

[1] 33739安装harbor

##安装harbor

[root@web ~]# yum install -y yum-utils

[root@web ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@web ~]# yum install docker-ce

[root@web ~]# systemctl enable docker --now

[root@harbor ~]# wget https://github.com/docker/compose/releases/download/1.25.0/docker-compose-Linux-x86_64

##上传文件

[root@harbor ~]# ll

total 16636

-rw-r--r-- 1 root root 17031320 Jul 21 19:08 docker-compose-Linux-x86_64

[root@harbor ~]# mv docker-compose-Linux-x86_64 /usr/bin/docker-compose

[root@harbor ~]# chmod +x /usr/bin/docker-compose

[root@harbor ~]# docker-compose version

docker-compose version 1.25.0, build 0a186604

docker-py version: 4.1.0

CPython version: 3.7.4

OpenSSL version: OpenSSL 1.1.0l 10 Sep 2019

##上传文件;安装harbor服务器

##[root@harbor ~]# wget https://github.com/goharbor/harbor/releases/download/v2.5.3/harbor-offline-installer-v2.5.3.tgz

[root@harbor ~]# ll

total 644548

-rw-r--r-- 1 root root 660014621 Jul 21 19:11 harbor-offline-installer-v2.5.3.tgz

[root@harbor ~]# tar -xf harbor-offline-installer-v2.5.3.tgz

[root@harbor ~]# cd harbor/

[root@harbor harbor]# ll

total 647840

-rw-r--r-- 1 root root 3361 Jul 7 2022 common.sh

-rw-r--r-- 1 root root 663348871 Jul 7 2022 harbor.v2.5.3.tar.gz

-rw-r--r-- 1 root root 9917 Jul 7 2022 harbor.yml.tmpl

-rwxr-xr-x 1 root root 2500 Jul 7 2022 install.sh

-rw-r--r-- 1 root root 11347 Jul 7 2022 LICENSE

-rwxr-xr-x 1 root root 1881 Jul 7 2022 prepare

[root@harbor harbor]# docker load -i harbor.v2.5.3.tar.gz

# 创建 https 证书

[root@harbor harbor]# mkdir tls

[root@harbor harbor]# openssl genrsa -out tls/cert.key 2048

[root@harbor harbor]# openssl req -new -x509 -days 3650 \-key tls/cert.key -out tls/cert.crt \-subj "/C=CN/ST=BJ/L=BJ/O=Tedu/OU=NSD/CN=harbor"[root@harbor harbor]# mv harbor.yml.tmpl harbor.yml

[root@harbor harbor]# vim harbor.yml

hostname: harborhttps:# https port for harbor, default is 443port: 443# The path of cert and key files for nginxcertificate: /root/harbor/tls/cert.crtprivate_key: /root/harbor/tls/cert.keyharbor_admin_password: 123456

[root@harbor harbor]# ./prepare #检查配置是否正常

Successfully called func: create_root_cert

Generated configuration file: /compose_location/docker-compose.yml

Clean up the input dir

# 创建并启动项目

[root@harbor harbor]# docker compose -f docker-compose.yml up -d

[root@harbor harbor]# docker ps

[root@harbor harbor]# chmod 0755 /etc/rc.d/rc.local

[root@harbor harbor]# echo "/usr/bin/docker compose -p harbor start" >>/etc/rc.d/rc.local 新建项目

新建项目

推送ollama镜像到harbor仓库

推送ollama镜像到harbor仓库

[root@web ~]# vim /etc/hosts

[root@web ~]# tail -1 /etc/hosts

192.168.88.30 harbor

[root@web ~]# vim /etc/docker/daemon.json

[root@web ~]# cat /etc/docker/daemon.json

{"registry-mirrors": ["https://harbor:443"],"insecure-registries":["harbor:443"]

}

[root@web ~]# systemctl restart docker

[root@web ~]# docker login harbor:443

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded

[root@web ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ollama/ollama latest 676364f65510 4 months ago 3.48GB

ghcr.io/open-webui/open-webui main ab78641aa87a 5 months ago 4.46GB

[root@web ~]# docker tag ollama/ollama:latest harbor:443/ollama/ollama:latest

[root@web ~]# docker push harbor:443/ollama/ollama:latest

The push refers to repository [harbor:443/ollama/ollama]

d4300076d0eb: Pushed

1fdc8c80e260: Pushed

ae8280b0d22b: Pushed

fffe76c64ef2: Pushed

latest: digest: sha256:2831b76e76d2dff975e98ff02d0b2bcca50d62605585e048fba3aaa5cdf88d84 size: 1167查看

打开k8s集群三个主机 【k8s集群安装,参考k8s文档】

-----------------------k8s部署ollama

##查看节点运行情况

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane 5m46s v1.28.15

node1 Ready <none> 4m32s v1.28.15

node2 Ready <none> 4m17s v1.28.15

##所有节点,均修改hosts文件

[root@node1 ~]# tail -1f /etc/hosts

192.168.88.30 harbor

##所有节点,在容器中修该harbor认证

[root@master ~]# vim /etc/containerd/config.toml

154 行新插入:[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor:443"]endpoint = ["https://harbor:443"][plugins."io.containerd.grpc.v1.cri".registry.configs."harbor:443".tls]insecure_skip_verify = true

[root@master ~]# systemctl restart containerd

##创建ollama的pod

[root@master ~]# mkdir ollama

[root@master ~]# cd ollama

[root@master ollama]# vim ollama-pv.yaml

[root@master ollama]# vim ollama-pvc.yaml

[root@master ollama]# vim ollama.yaml

[root@master ollama]# cat ollama-pv.yaml

---

kind: PersistentVolume

apiVersion: v1

metadata:name: ollama-pv

spec:volumeMode: FilesystemaccessModes:- ReadWriteOncecapacity:storage: 30GipersistentVolumeReclaimPolicy: RetainhostPath:path: /data/ollamatype: DirectoryOrCreate

[root@master ollama]# cat ollama-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: ollama-pvc

spec:accessModes:- ReadWriteOnceresources:requests:storage: 20G

[root@master ollama]# cat ollama.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: ollamalabels:app: ollama

spec:replicas: 1selector:matchLabels:app: ollamatemplate:metadata:labels:app: ollamaspec:volumes:- name: model-storagepersistentVolumeClaim:claimName: ollama-pvccontainers:- name: ollamaimage: harbor:443/ollama/ollama:latestports:- containerPort: 11434volumeMounts:- mountPath: /modelsname: model-storage[root@master ollama]# kubectl apply -f ollama-pv.yaml

persistentvolume/ollama-pv created

[root@master ollama]# kubectl apply -f ollama-pvc.yaml

persistentvolumeclaim/ollama-pvc created

[root@master ollama]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ollama-pvc Bound ollama-pv 30Gi RWO 99s

[root@master ollama]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ollama-pv 30Gi RWO Retain Bound default/ollama-pvc 8m8s

[root@master ollama]# kubectl apply -f ollama.yaml

deployment.apps/ollama created

[root@master ollama]# kubectl get pod

NAME READY STATUS RESTARTS AGE

ollama-5dfc6997f7-lz7ms 1/1 Running 0 68s##创建ollama的service

[root@master ~]# vim ollama_svc.yaml

[root@master ~]# cat ollama_svc.yaml

apiVersion: v1

kind: Service

metadata:name: ollamalabels:app: ollama

spec:type: NodePortports:- port: 11434targetPort: 11434protocol: TCPname: httpselector:app: ollama

[root@master ~]# kubectl apply -f ollama_svc.yaml

service/ollama created

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 172.0.0.1 <none> 443/TCP 7h51m

ollama NodePort 172.8.89.171 <none> 11434:30948/TCP 6s[root@master ollama]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ollama-5dfc6997f7-lz7ms 1/1 Running 0 4m11s 172.10.104.4 node2 <none> <none>

[root@master ollama]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 172.0.0.1 <none> 443/TCP 8h <none>

ollama NodePort 172.8.89.171 <none> 11434:30948/TCP 23m app=ollama

##88.1为master的IP地址

[root@master ollama]# curl 192.168.88.1:30948

Ollama is running

##列出可用的模型

[root@master ollama]# curl http://192.168.88.1:30948/api/tags

{"models":[]}

##拉取模型

[root@master ~]# curl -X POST http://192.168.88.1:30948/api/pull -H 'Content-Type: application/json' -d '{ "name": "deepseek-r1:1.5b"}'{"status":"verifying sha256 digest"}

{"status":"writing manifest"}

{"status":"success"}

##列出可用的模型

[root@master ollama]# curl http://192.168.88.1:30948/api/tags

...

{"models":[{"name":"deepseek-r1:1.5b","model":"deepseek-r1:1.5b","modified_at":"2025-07-22T16:53:58.774122157Z","size":1117322768,"digest":"e0979632db5a88d1a53884cb2a941772d10ff5d055aabaa6801c4e36f3a6c2d7","details":{"parent_model":"","format":"gguf","family":"qwen2","families":["qwen2"],"parameter_size":"1.8B","quantization_level":"Q4_K_M"}}]}

...

##运行模型

[root@master ollama]# curl -X POST http://192.168.88.1:30948/api/generate \

> -H 'Content-Type: application/json' \

> -d '{

> "model": "deepseek-r1:1.5b",

> "prompt": "你是谁",

> "stream": false

> }'

...

{"model":"deepseek-r1:1.5b","created_at":"2025-07-22T16:55:59.791737844Z","response":"\u003cthink\u003e\n\n\u003c/think\u003e\n\n您好!我是由中国的深度求索(DeepSeek)公司开发的智能助手DeepSeek-R1。如您有任何任何问题,我会尽我所能为您提供帮助。","done":true,"done_reason":"stop","context":[151644,105043,100165,151645,151648,271,151649,271,111308,6313,104198,67071,105538,102217,30918,50984,9909,33464,39350,7552,73218,100013,9370,100168,110498,33464,39350,10911,16,1773,29524,87026,110117,99885,86119,3837,105351,99739,35946,111079,113445,100364,1773],"total_duration":9225927714,"load_duration":1599434223,"prompt_eval_count":5,"prompt_eval_duration":579000000,"eval_count":40,"eval_duration":7035000000}

...

##在docker中运行ollama

[root@master ollama]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 172.0.0.1 <none> 443/TCP 9h

ollama NodePort 172.8.89.171 <none> 11434:30948/TCP 94m

[root@web html]# rm -rf /data/ollama/models

[root@web html]# rm -rf /data/ollama/webui

##这里的ip和端口均为master的IP地址和端口

[root@web html]# docker run -d -p 8080:8080 -e ENABLE_OPENAI_API=false -e \

> OLLAMA_BASE_URL=http://192.168.88.1:30948:11434 -e HF_HUB_OFFLINE=1 -v \

> /data/ollama/models:/root/.ollama -v /data/ollama/webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

6dc36c9338a891230cc20588b495c8a61f4fec7bc836515dad6e4ebd8eb91e29

[root@web html]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6dc36c9338a8 ghcr.io/open-webui/open-webui:main "bash start.sh" 6 seconds ago Up 5 seconds (health: starting) 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp open-webui--------------------聊天机器人

[root@web ~]# cd /etc/nginx/

[root@web nginx]# \cp nginx.conf.default nginx.conf

[root@web nginx]# cd /usr/share/nginx/html/

[root@web html]# ll

total 20

-rw-r--r-- 1 root root 3332 Jun 10 2021 404.html

-rw-r--r-- 1 root root 3404 Jun 10 2021 50x.html

-rw-r--r-- 1 root root 3429 Jun 10 2021 index.html

-rw-r--r-- 1 root root 368 Jun 10 2021 nginx-logo.png

-rw-r--r-- 1 root root 1800 Jun 10 2021 poweredby.png

##把index.html,styles.css, script.js文件上传

[root@web html]# ll

total 28

-rw-r--r-- 1 root root 3332 Jun 10 2021 404.html

-rw-r--r-- 1 root root 3404 Jun 10 2021 50x.html

-rw-r--r-- 1 root root 860 Jul 23 00:59 index.html

-rw-r--r-- 1 root root 368 Jun 10 2021 nginx-logo.png

-rw-r--r-- 1 root root 1800 Jun 10 2021 poweredby.png

-rw-r--r-- 1 root root 3474 Jul 23 00:59 script.js

-rw-r--r-- 1 root root 2718 Jul 23 00:59 styles.css

[root@web html]# vim /etc/nginx/nginx.conf

[root@web html]# egrep -v "^#|^$|^\s+#" /etc/nginx/nginx.conf

worker_processes 1;

events {worker_connections 1024;

}

http {include mime.types;default_type application/octet-stream;sendfile on;keepalive_timeout 65;server {listen 80;server_name localhost;location /api/ {proxy_pass http://192.168.88.1:30948/;proxy_set_header Host $host;proxy_set_header X-Real-IP $remote_addr;proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;proxy_set_header X-Forwarded-Proto $scheme;}error_page 500 502 503 504 /50x.html;location = /50x.html {root html;}}

}

[root@web html]# systemctl restart nginx.service测试

web主机IP

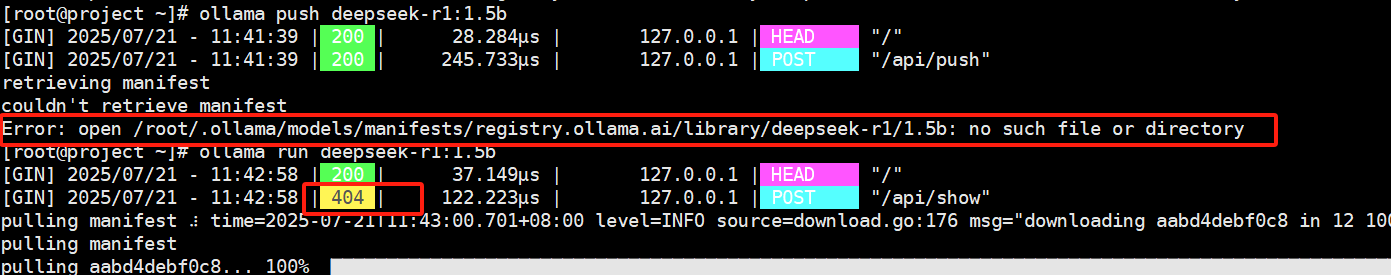

报错

拉取大模型或运行大模型报错

网络问题,稍后重试

- pod已存在