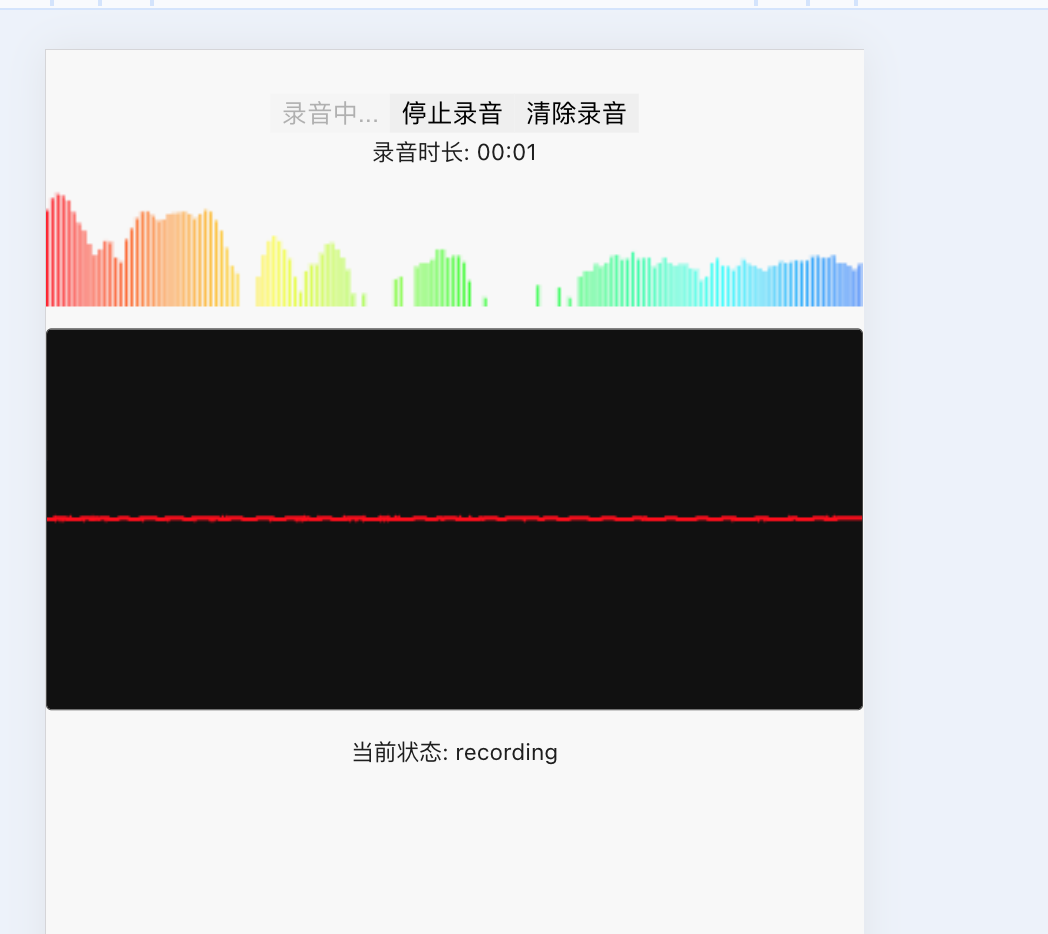

react 录音功能

在这有两种录音时的动态效果

1.使用react-media-recorder库实现基础录音功能

2.添加录音计时器显示录音时长

3.实现音频可视化效果,通过Canvas绘制频谱图

4.处理麦克风权限状态检测

5.提供开始/停止录音和清除录音的控制按钮

先添加依赖

npm install react-media-recorder

// export default AudioRecorder;

import React, { useState, useEffect, useRef } from 'react';

import { useReactMediaRecorder } from 'react-media-recorder';

import ECGVisualizer from './component/ECGV'

import './index.less'

const EnhancedAudioRecorder = () => {const [recordingTime, setRecordingTime] = useState(0);const timerRef = useRef(null);const canvasRef = useRef(null);const audioContextRef = useRef(null);const analyserRef = useRef(null);const animationRef = useRef(null);const [permissionStatus, setPermissionStatus] = useState<'unknown' | 'granted' | 'denied'>('unknown');const {status,startRecording,stopRecording,mediaBlobUrl,previewAudioStream,clearBlobUrl,} = useReactMediaRecorder({audio: true,onStart: () => setPermissionStatus('granted'),onStop: (blobUrl, blob) => {clearInterval(timerRef.current);cancelAnimationFrame(animationRef.current);// setRecordingTime(0);}});// 检查初始权限状态useEffect(() => {const checkPermission = async () => {try {if (navigator.permissions) {const status = await navigator.permissions.query({ name: 'microphone' });setPermissionStatus(status.state);status.onchange = () => setPermissionStatus(status.state);}} catch (e) {console.log('Permissions API not supported');}};checkPermission();return () => clearInterval(timerRef.current);}, []);// 音频可视化处理useEffect(() => {if (previewAudioStream && status === 'recording') {timerRef.current = setInterval(() => {setRecordingTime(prev => prev + 1);}, 1000);setupAudioVisualizer();}return () => {cancelAnimationFrame(animationRef.current);clearInterval(timerRef.current);};}, [previewAudioStream, status]);const setupAudioVisualizer = () => {audioContextRef.current = new (window.AudioContext || window.webkitAudioContext)();analyserRef.current = audioContextRef.current.createAnalyser();const source = audioContextRef.current.createMediaStreamSource(previewAudioStream);source.connect(analyserRef.current);analyserRef.current.fftSize = 512;const bufferLength = analyserRef.current.frequencyBinCount;const dataArray = new Uint8Array(bufferLength);const draw = () => {animationRef.current = requestAnimationFrame(draw);analyserRef.current.getByteFrequencyData(dataArray);const canvas = canvasRef.current;if (!canvas) return;const ctx = canvas.getContext('2d');const width = canvas.width;const height = canvas.height;ctx.clearRect(0, 0, width, height);const barWidth = (width / bufferLength) * 0.8;let x = 0;for (let i = 0; i < bufferLength; i++) {const barHeight = (Math.pow(dataArray[i]/255, 0.5)) * height;const hue = i / bufferLength * 360;ctx.fillStyle = `hsl(${hue}, 100%, 50%)`;ctx.fillRect(x, height - barHeight, barWidth, barHeight);x += barWidth + 1;}};draw();};const formatTime = (seconds) => {const mins = Math.floor(seconds / 60).toString().padStart(2, '0');const secs = (seconds % 60).toString().padStart(2, '0');return `${mins}:${secs}`;};// 处理开始录音逻辑const handleStartRecording = async () => {if (permissionStatus === 'denied') {alert('请在浏览器设置中允许麦克风权限后刷新页面');return;}try {await startRecording();} catch (err) {// 出错时清除定时器if (timerRef.current) {clearInterval(timerRef.current);}console.error('录音错误:', err);}};const handleClear=()=>{clearBlobUrl(); setRecordingTime(0);setupAudioVisualizer()}return (<div className="enhanced-recorder"><div className="recorder-controls"><buttononClick={handleStartRecording}disabled={status === 'recording'}>{status === 'recording' ? '录音中...' : '开始录音'}</button><buttononClick={stopRecording}disabled={status !== 'recording'}>停止录音</button><buttononClick={handleClear}>清除录音</button><div className="recording-time">录音时长: {formatTime(recordingTime)}</div></div><div className="visualizer-container"><canvasref={canvasRef}height={50}style={{ width:'100%'}}/></div><ECGVisualizer previewAudioStream={previewAudioStream} status={status} />{mediaBlobUrl && (<div className="playback-section"><h3>录音回放{mediaBlobUrl}</h3><audio src={mediaBlobUrl} controls /><ahref={mediaBlobUrl}download={`recording-${new Date().toISOString()}.wav`}className="download-btn">下载录音</a></div>)}<div className="status-info"><p>当前状态: <span className={status}>{status}</span></p></div></div>);

};export default EnhancedAudioRecorder;

第二种动态我做成了组件

import React, { useRef, useEffect } from 'react';

import { StatusMessages } from 'react-media-recorder';

import './ECGV.less'

interface ECGVisualizerProps {previewAudioStream: MediaStream | null;status: StatusMessages;

}const ECGVisualizer: React.FC<ECGVisualizerProps> = ({ previewAudioStream, status

}) => {const canvasRef = useRef<HTMLCanvasElement>(null);const animationRef = useRef<number>();const audioContextRef = useRef<AudioContext>();const analyserRef = useRef<AnalyserNode>();const dataArrayRef = useRef<Uint8Array>();// 设置音频上下文和分析器const setupAudioContext = () => {if (!previewAudioStream || !canvasRef.current) return;audioContextRef.current = new (window.AudioContext || (window as any).webkitAudioContext)();analyserRef.current = audioContextRef.current.createAnalyser();const source = audioContextRef.current.createMediaStreamSource(previewAudioStream);source.connect(analyserRef.current);analyserRef.current.fftSize = 2048;const bufferLength = analyserRef.current.frequencyBinCount;dataArrayRef.current = new Uint8Array(bufferLength);drawECG();};// 绘制心电图效果const drawECG = () => {if (!analyserRef.current || !canvasRef.current || !dataArrayRef.current) return;const canvas = canvasRef.current;const ctx = canvas.getContext('2d');if (!ctx) return;const width = canvas.width;const height = canvas.height;analyserRef.current.getByteTimeDomainData(dataArrayRef.current);ctx.clearRect(0, 0, width, height);ctx.lineWidth = 2;ctx.strokeStyle = '#ff0000';ctx.beginPath();const sliceWidth = width / dataArrayRef.current.length;let x = 0;let lastY = height / 2;// 添加基线ctx.moveTo(0, height / 2);ctx.lineTo(width, height / 2);ctx.strokeStyle = 'rgba(255, 0, 0, 0.2)';ctx.stroke();ctx.beginPath();ctx.strokeStyle = '#ff0000';// 绘制心电图波形for (let i = 0; i < dataArrayRef.current.length; i++) {const v = dataArrayRef.current[i] / 128.0;const y = v * height / 2;if (i === 0) {ctx.moveTo(x, y);} else {if (Math.abs(y - lastY) > height / 4) {ctx.lineTo(x, y);ctx.stroke();ctx.beginPath();ctx.moveTo(x, y);} else {ctx.lineTo(x, y);}}lastY = y;x += sliceWidth;}ctx.stroke();animationRef.current = requestAnimationFrame(drawECG);};useEffect(() => {if (status === 'recording' && previewAudioStream) {setupAudioContext();}return () => {if (animationRef.current) {cancelAnimationFrame(animationRef.current);}if (audioContextRef.current && audioContextRef.current.state !== 'closed') {audioContextRef.current.close();}};}, [status, previewAudioStream]);return (<div className="ecg-container"><canvas ref={canvasRef} width={600} height={200}style={{background: '#111',display: status === 'recording' ? 'block' : 'none'}}/></div>);

};export default ECGVisualizer;

.ecg-recorder {max-width: 800px;margin: 0 auto;padding: 20px;background: #222;color: white;border-radius: 10px;font-family: 'Arial', sans-serif;

}.controls {display: flex;gap: 15px;align-items: center;margin-bottom: 20px;

}.record-btn {background: #e53935;padding: 10px 20px;border: none;border-radius: 5px;color: white;font-weight: bold;cursor: pointer;

}.record-btn:hover {background: #c62828;

}.record-btn:disabled {background: #555;cursor: not-allowed;

}.stop-btn {background: #1e88e5;padding: 10px 20px;border: none;border-radius: 5px;color: white;font-weight: bold;cursor: pointer;

}.stop-btn:hover {background: #1565c0;

}.stop-btn:disabled {background: #555;cursor: not-allowed;

}.timer {margin-left: auto;font-family: monospace;font-size: 1.2em;

}.ecg-container {margin: 20px 0;border: 1px solid #444;border-radius: 5px;overflow: hidden;

}.playback {margin-top: 20px;padding: 15px;background: #333;border-radius: 5px;

}.download-btn {display: inline-block;margin-top: 10px;padding: 8px 16px;background: #43a047;color: white;text-decoration: none;border-radius: 4px;

}.download-btn:hover {background: #2e7d32;

}audio {width: 100%;margin-top: 10px;

}