Mac M3 Pro安装Hadoop-3.3.6

1、下载Hadoop安装包

可以到官方网站下载,也可以使用网盘下载

官网下载地址:Hadoop官网下载地址

网盘地址:https://pan.baidu.com/s/1p4BXq2mvby2B76lmpiEjnA?pwd=r62r提取码: r62r

2、解压并添加环境变量

# 将安装包移动到指定目录

mv ~/Download/hadoop-3.3.6.tar.gz /opt/module

# 进入目录

cd /opt/module

# 解压安装包

tar -zxvf hadoop-3.3.6.tar.gz

# 修改目录名

mv hadoop-3.3.6 hadoop# 创建目录

mkdir -p /opt/module/hadoop/data/dfs/name

mkdir -p /opt/module/hadoop/data/dfs/data

mkdir -p /opt/module/hadoop/data/hadoop/edits

mkdir -p /opt/module/hadoop/data/hadoop/snn/checkpoint

mkdir -p /opt/module/hadoop/data/hadoop/snn/edits

mkdir -p /opt/module/hadoop/data/hadoop/tmp

mkdir -p /opt/module/hadoop/logs# 添加环境变量

sudo vim /etc/profileexport JAVA8_HOME="/Library/Java/JavaVirtualMachines/jdk8/Contents/Home"

export MAVEN_HOME="/Library/Java/apache-maven-3.8.8"

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export HADOOP_HOME=/opt/module/hadoop

export JAVA_LIBRARY_PATH="$HADOOP_HOME/lib/native"

export HADOOP_COMMON_LIB_NATIVE_DIR="$HADOOP_HOME/lib/native"

export HADOOP_LOG_DIR=$HADOOP_HOME/logs

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_USER_NAME=hdfsexport PATH="$JAVA_HOME/bin:$MYSQL_HOME/bin:$MAVEN_HOME:/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$MAVEN_HOME/bin:$PATH:."3、修改配置

vim etc/hadoop/core-site.xml<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

--><!-- Put site-specific property overrides in this file. --><configuration><property><name>fs.defaultFS</name><value>hdfs://0.0.0.0:9000</value></property><property><name>hadoop.tmp.dir</name><value>/opt/module/hadoop/data</value></property><property><name>hadoop.http.staticuser.user</name><value>hdfs</value></property><!-- 缓冲区大小,根据服务器性能动态调整 --><property><name>io.file.buffer.size</name><value>4096</value></property><!-- 开启垃圾桶机制,删除掉的数据可以从垃圾桶中回收,单位分钟 --><property><name>fs.trash.interval</name><value>10080</value></property><property><name>dfs.permissions</name><value>false</value></property><property><name>hadoop.proxyuser.hdfs.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.hdfs.groups</name><value>*</value></property>

</configuration> vim etc/hadoop/hdfs-site.xml<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

--><!-- Put site-specific property overrides in this file. --><configuration><property><name>dfs.namenode.http-address</name><value>0.0.0.0:9870</value>

</property><property><name>dfs.namenode.secondary.http-address</name><value>0.0.0.0:9868</value>

</property><!-- 数据存储位置,多个目录用英文逗号隔开<property><name>dfs.namenode.name.dir</name><value>/opt/module/hadoop/data/dfs/name</value></property><property><name>dfs.datanode.data.dir</name><value>/opt/module/hadoop/data/dfs/data</value></property> --><!-- 元数据操作日志、检查点日志存储目录 <property><name>dfs.namenode.edits.dir</name><value>/opt/module/hadoop/data/hadoop/edits</value></property><property><name>dfs.namenode.checkpoint.dir</name><value>/opt/module/hadoop/data/hadoop/snn/checkpoint</value></property><property><name>dfs.namenode.checkpoint.edits.dir</name><value>/opt/module/hadoop/data/hadoop/snn/edits</value></property> --><!-- 临时文件目录<property><name>dfs.tmp.dir</name><value>/opt/module/hadoop/data/hadoop/tmp</value></property> --><!-- 文件切片的副本个数 --><property><name>dfs.replication</name><value>1</value></property><!-- HDFS 的文件权限 --><property><name>dfs.permissions.enabled</name><value>false</value></property><!-- 每个 Block 的大小为128 MB,单位:KB --><property><name>dfs.blocksize</name><value>134217728</value></property>

</configuration>

vim etc/hadoop/mapred-site.xml<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

--><!-- Put site-specific property overrides in this file. --><configuration><property><name>mapreduce.framework.name</name><value>yarn</value>

</property><!-- 设置历史任务的主机和端口,0.0.0.0 支持来自服务器外部的访问 --><property><name>mapreduce.jobhistory.address</name><value>0.0.0.0:10020</value></property><!-- 设置网页端的历史任务的主机和端口 --><property><name>mapreduce.jobhistory.webapp.address</name><value>0.0.0.0:19888</value></property><property><name>mapreduce.application.classpath</name><value>/opt/module/hadoop/etc/hadoop:/opt/module/hadoop/share/hadoop/common/lib/*:/opt/module/hadoop/share/hadoop/common/*:/opt/module/hadoop/share/hadoop/hdfs:/opt/module/hadoop/share/hadoop/hdfs/lib/*:/opt/module/hadoop/share/hadoop/hdfs/*:/opt/module/hadoop/share/hadoop/mapreduce/*:/opt/module/hadoop/share/hadoop/yarn:/opt/module/hadoop/share/hadoop/yarn/lib/*:/opt/module/hadoop/share/hadoop/yarn/*</value></property>

</configuration>

vim etc/hadoop/yarn-site.xml<?xml version="1.0"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

-->

<configuration><!-- Site specific YARN configuration properties --><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value>

</property>

<!-- 支持来自服务器外部的访问 -->

<property><name>yarn.resourcemanager.hostname</name><value>0.0.0.0</value>

</property><property><name>yarn.resourcemanager.webapp.address</name><value>0.0.0.0:8088</value>

</property><property><name>yarn.log.server.url</name><value>http://0.0.0.0:19888/jobhistory/logs</value></property><property><name>yarn.application.classpath</name><value>/opt/module/hadoop/etc/hadoop:/opt/module/hadoop/share/hadoop/common/lib/*:/opt/module/hadoop/share/hadoop/common/*:/opt/module/hadoop/share/hadoop/hdfs:/opt/module/hadoop/share/hadoop/hdfs/lib/*:/opt/module/hadoop/share/hadoop/hdfs/*:/opt/module/hadoop/share/hadoop/mapreduce/*:/opt/module/hadoop/share/hadoop/yarn:/opt/module/hadoop/share/hadoop/yarn/lib/*:/opt/module/hadoop/share/hadoop/yarn/*</value></property><property><name>yarn.log-aggregation-enable</name><value>true</value></property><property><name>yarn.log-aggregation.retain-seconds</name><value>604800</value></property><property> <name>yarn.nodemanager.resource.memory-mb</name> <value>8192</value></property><property> <name>yarn.scheduler.minimum-allocation-mb</name><value>512</value></property><property><name>yarn.scheduler.maximum-allocation-mb</name><value>8192</value></property><property><name>yarn.nodemanager.pmem-check-enabled</name><value>false</value></property><property><name>yarn.nodemanager.vmem-check-enabled</name><value>false</value></property><property><name>yarn.nodemanager.vmem-pmem-ratio</name><value>2.1</value></property>

<property><name>yarn.nodemanager.env-whitelist</name><value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property></configuration>

4、格式化

bin/hdfs namenode -format5、启动

./sbin/start-dfs.sh

./sbin/start-yarn.sh

6、检查是否启动成功

# 查看是否有相应的进程

jps19494 DataNode

66060 ResourceManager

19631 SecondaryNameNode

19390 NameNode如果没有启动成功,可以打开日志目录按照时间倒序排序 查看最近的日志查看异常情况

日志地址/opt/module/hadoop/logs

7、启动成功后可以打开管理界面

http://127.0.0.1:9870/dfshealth.html#tab-overview

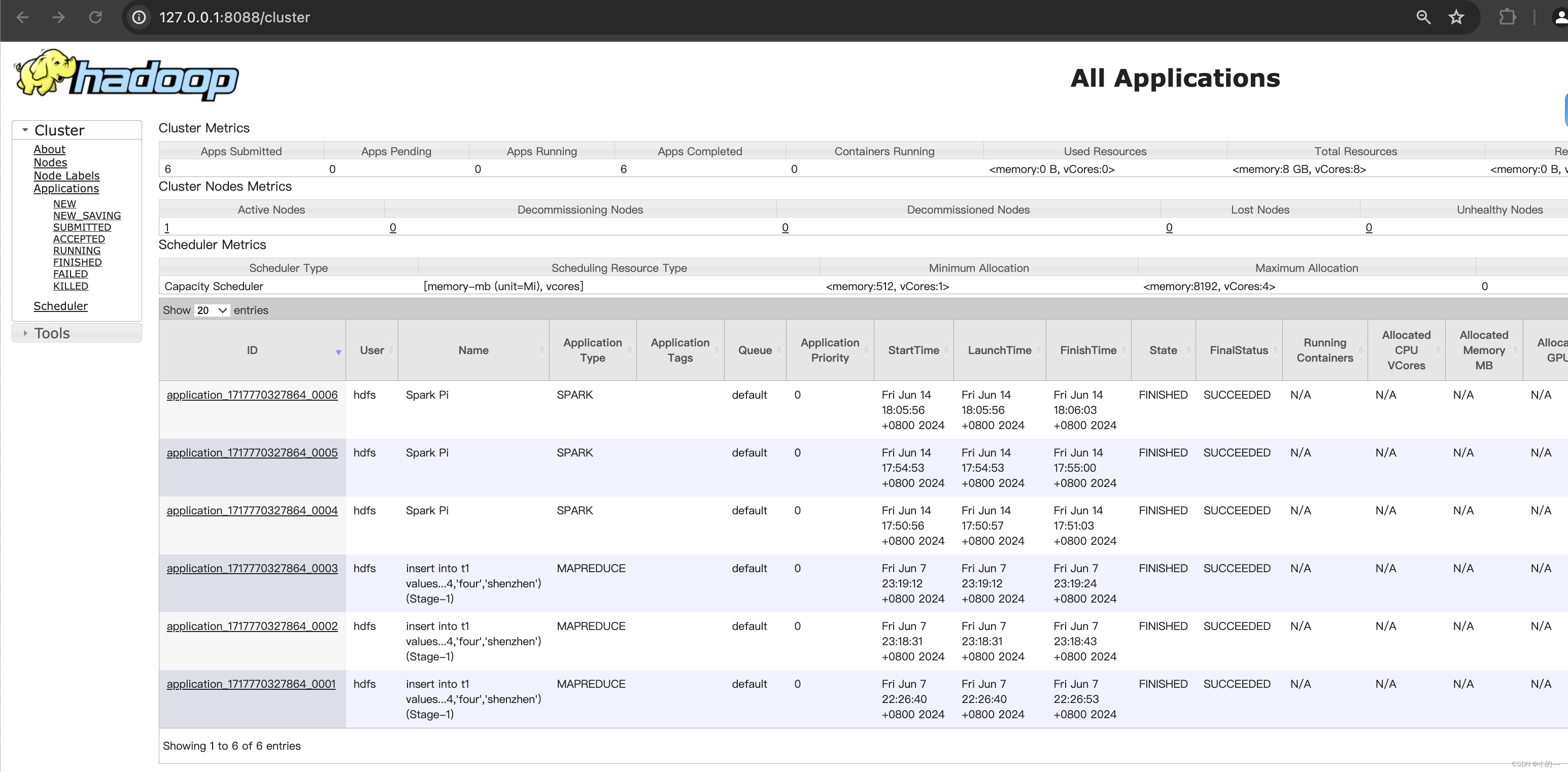

yarn控制台