MapReduce | 二次排序

1.需求

主播数据--按照观众人数降序排序,如果观众人数相同,按照直播时长降序

# 案例数据

用户id 观众人数 直播时长

团团 300 1000

小黑 200 2000

哦吼 400 7000

卢本伟 100 6000

八戒 250 5000

悟空 100 4000

唐僧 100 3000

# 期望结果

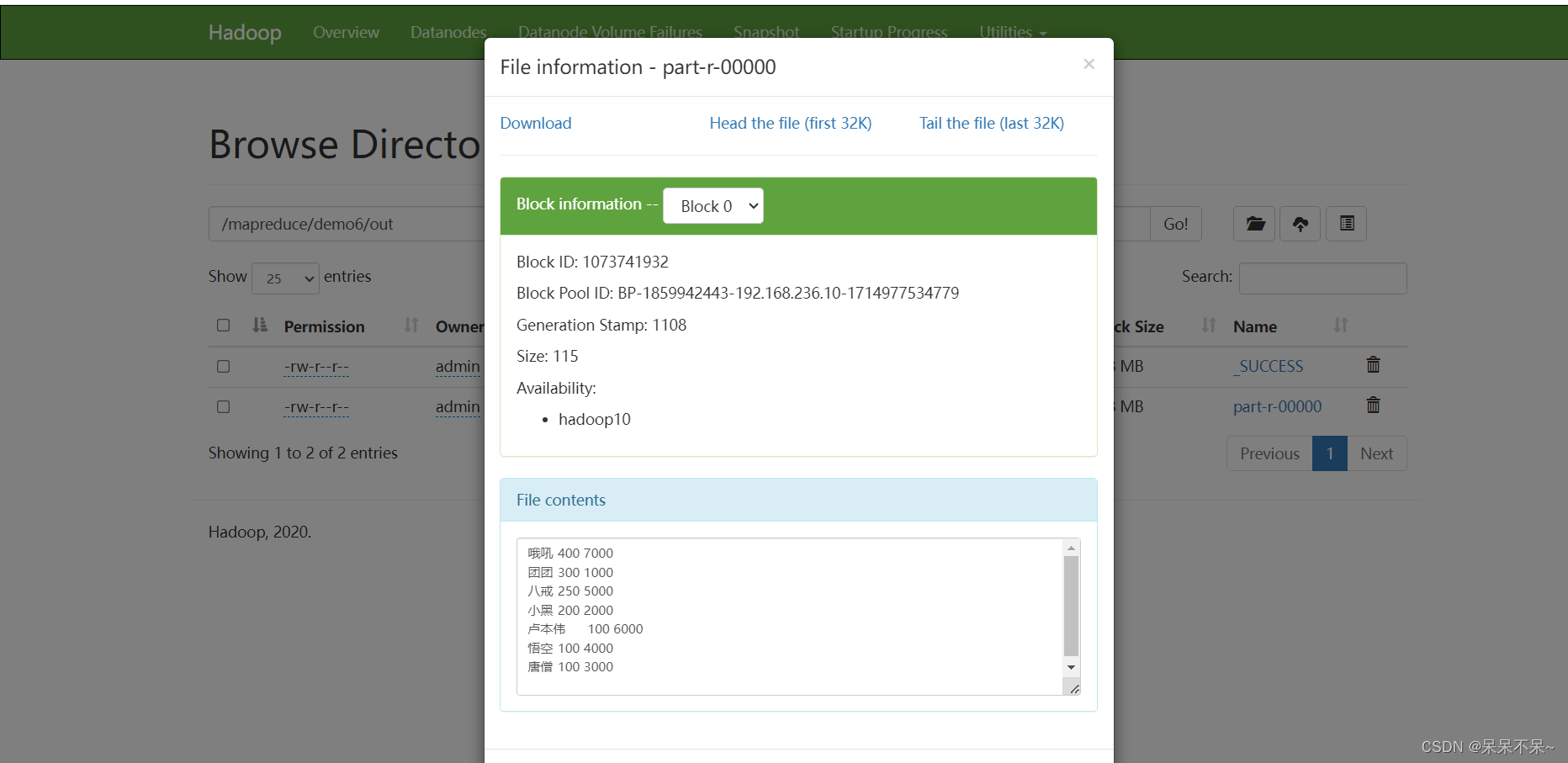

哦吼 400 7000

团团 300 1000

八戒 250 5000

小黑 200 2000

卢本伟 100 6000

悟空 100 4000

唐僧 100 3000

2.将数据上传到hdfs

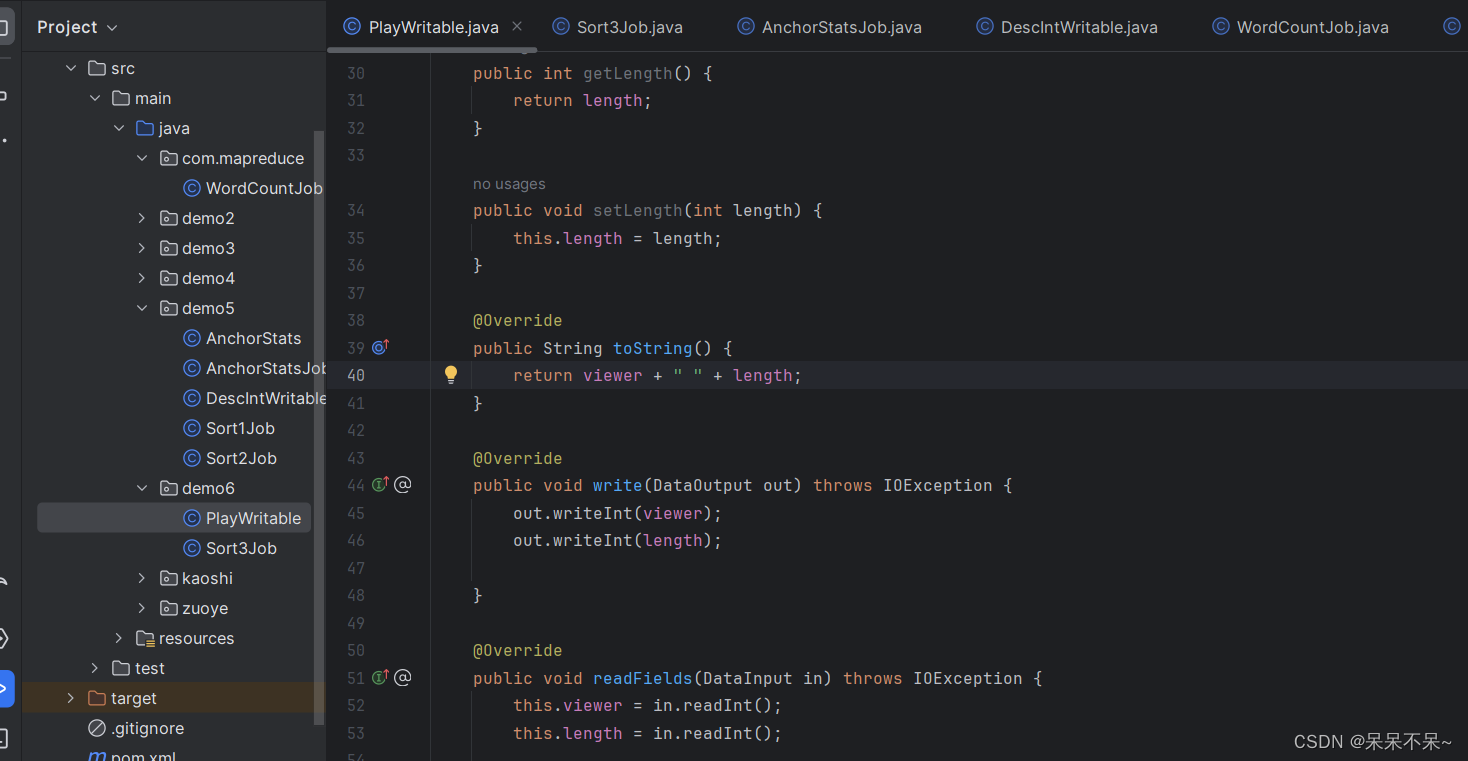

3.Idea代码

package demo6;import org.apache.hadoop.io.WritableComparable;import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;public class PlayWritable implements WritableComparable<PlayWritable> {private int viewer;private int length;public PlayWritable() {}public PlayWritable(int viewer, int length) {this.viewer = viewer;this.length = length;}public int getViewer() {return viewer;}public void setViewer(int viewer) {this.viewer = viewer;}public int getLength() {return length;}public void setLength(int length) {this.length = length;}@Overridepublic String toString() {return viewer + " " + length;}@Overridepublic void write(DataOutput out) throws IOException {out.writeInt(viewer);out.writeInt(length);}@Overridepublic void readFields(DataInput in) throws IOException {this.viewer = in.readInt();this.length = in.readInt();}@Overridepublic int compareTo(PlayWritable o) {if (this.viewer != o.viewer){return this.viewer > o.viewer ? -1 : 1;}return this.length > o.length ? -1 : (this.length == o.length ? 0 : 1);}

}

package demo6;import demo5.DescIntWritable;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.checkerframework.checker.units.qual.Length;import java.io.IOException;public class Sort3Job {public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException {Configuration conf = new Configuration();conf.set("fs.defaultFS","hdfs://hadoop10:8020");Job job = Job.getInstance(conf);job.setJarByClass(Sort3Job.class);job.setInputFormatClass(TextInputFormat.class);job.setOutputFormatClass(TextOutputFormat.class);TextInputFormat.addInputPath(job,new Path("/mapreduce/demo6/sort3.txt"));TextOutputFormat.setOutputPath(job,new Path("/mapreduce/demo6/out"));job.setMapperClass(Sort3Mapper.class);job.setReducerClass(Sort3Reducer.class);//map输出的键与值类型job.setMapOutputKeyClass(PlayWritable.class);job.setMapOutputValueClass(Text.class);//reducer输出的键与值类型job.setOutputKeyClass(Text.class);job.setOutputValueClass(PlayWritable.class);boolean b = job.waitForCompletion(true);System.out.println(b);}static class Sort3Mapper extends Mapper<LongWritable, Text, PlayWritable,Text> {@Overrideprotected void map(LongWritable key, Text value,Context context) throws IOException, InterruptedException {String[] arr = value.toString().split("\t");context.write(new PlayWritable(Integer.parseInt(arr[1]),Integer.parseInt(arr[2])),new Text(arr[0]));}}static class Sort3Reducer extends Reducer<PlayWritable,Text,Text,PlayWritable>{@Overrideprotected void reduce(PlayWritable key, Iterable<Text> values, Context context) throws IOException, InterruptedException {for (Text name : values) {context.write(name,key);}}}

}

4.在hdfs查看结果

请好好爱自己~ 想和你做朋友~