【pytest、playwright】allure报告生成视频和图片

目录

1、修改插件pytest_playwright

2、conftest.py配置

3、修改pytest.ini文件

4、运行case

5、注意事项

1、修改插件pytest_playwright

pytest_playwright.py内容如下:

pytest_playwright.py内容如下:

# Copyright (c) Microsoft Corporation.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.import shutil

import os

import sys

import warnings

from typing import Any, Callable, Dict, Generator, List, Optionalimport pytest

from playwright.sync_api import (Browser,BrowserContext,BrowserType,Error,Page,Playwright,sync_playwright,

)

from slugify import slugify

import tempfile

import allureartifacts_folder = tempfile.TemporaryDirectory(prefix="playwright-pytest-")@pytest.fixture(scope="session", autouse=True)

def delete_output_dir(pytestconfig: Any) -> None:output_dir = pytestconfig.getoption("--output")if os.path.exists(output_dir):try:shutil.rmtree(output_dir)except FileNotFoundError:# When running in parallel, another thread may have already deleted the filespassdef pytest_generate_tests(metafunc: Any) -> None:if "browser_name" in metafunc.fixturenames:browsers = metafunc.config.option.browser or ["chromium"]metafunc.parametrize("browser_name", browsers, scope="session")def pytest_configure(config: Any) -> None:config.addinivalue_line("markers", "skip_browser(name): mark test to be skipped a specific browser")config.addinivalue_line("markers", "only_browser(name): mark test to run only on a specific browser")# Making test result information available in plugins

# https://docs.pytest.org/en/latest/example/simple.html#making-test-result-information-available-in-fixtures

@pytest.hookimpl(tryfirst=True, hookwrapper=True)

def pytest_runtest_makereport(item: Any) -> Generator[None, Any, None]:# execute all other hooks to obtain the report objectoutcome = yieldrep = outcome.get_result()# set a report attribute for each phase of a call, which can# be "setup", "call", "teardown"setattr(item, "rep_" + rep.when, rep)def _get_skiplist(item: Any, values: List[str], value_name: str) -> List[str]:skipped_values: List[str] = []# Allowlistonly_marker = item.get_closest_marker(f"only_{value_name}")if only_marker:skipped_values = valuesskipped_values.remove(only_marker.args[0])# Denylistskip_marker = item.get_closest_marker(f"skip_{value_name}")if skip_marker:skipped_values.append(skip_marker.args[0])return skipped_valuesdef pytest_runtest_setup(item: Any) -> None:if not hasattr(item, "callspec"):returnbrowser_name = item.callspec.params.get("browser_name")if not browser_name:returnskip_browsers_names = _get_skiplist(item, ["chromium", "firefox", "webkit"], "browser")if browser_name in skip_browsers_names:pytest.skip("skipped for this browser: {}".format(browser_name))VSCODE_PYTHON_EXTENSION_ID = "ms-python.python"@pytest.fixture(scope="session")

def browser_type_launch_args(pytestconfig: Any) -> Dict:launch_options = {}headed_option = pytestconfig.getoption("--headed")if headed_option:launch_options["headless"] = Falseelif VSCODE_PYTHON_EXTENSION_ID in sys.argv[0] and _is_debugger_attached():# When the VSCode debugger is attached, then launch the browser headed by defaultlaunch_options["headless"] = Falsebrowser_channel_option = pytestconfig.getoption("--browser-channel")if browser_channel_option:launch_options["channel"] = browser_channel_optionslowmo_option = pytestconfig.getoption("--slowmo")if slowmo_option:launch_options["slow_mo"] = slowmo_optionreturn launch_optionsdef _is_debugger_attached() -> bool:pydevd = sys.modules.get("pydevd")if not pydevd or not hasattr(pydevd, "get_global_debugger"):return Falsedebugger = pydevd.get_global_debugger()if not debugger or not hasattr(debugger, "is_attached"):return Falsereturn debugger.is_attached()def _build_artifact_test_folder(pytestconfig: Any, request: pytest.FixtureRequest, folder_or_file_name: str

) -> str:output_dir = pytestconfig.getoption("--output")return os.path.join(output_dir, slugify(request.node.nodeid), folder_or_file_name)@pytest.fixture(scope="session")

def browser_context_args(pytestconfig: Any,playwright: Playwright,device: Optional[str],

) -> Dict:context_args = {}if device:context_args.update(playwright.devices[device])base_url = pytestconfig.getoption("--base-url")if base_url:context_args["base_url"] = base_urlvideo_option = pytestconfig.getoption("--video")capture_video = video_option in ["on", "retain-on-failure"]if capture_video:context_args["record_video_dir"] = artifacts_folder.namereturn context_args@pytest.fixture(scope="session")

def playwright() -> Generator[Playwright, None, None]:pw = sync_playwright().start()yield pwpw.stop()@pytest.fixture(scope="session")

def browser_type(playwright: Playwright, browser_name: str) -> BrowserType:return getattr(playwright, browser_name)@pytest.fixture(scope="session")

def launch_browser(browser_type_launch_args: Dict,browser_type: BrowserType,

) -> Callable[..., Browser]:def launch(**kwargs: Dict) -> Browser:launch_options = {**browser_type_launch_args, **kwargs}browser = browser_type.launch(**launch_options)return browserreturn launch@pytest.fixture(scope="session")

def browser(launch_browser: Callable[[], Browser]) -> Generator[Browser, None, None]:browser = launch_browser()yield browserbrowser.close()artifacts_folder.cleanup()@pytest.fixture()

def context(browser: Browser,browser_context_args: Dict,pytestconfig: Any,request: pytest.FixtureRequest,

) -> Generator[BrowserContext, None, None]:pages: List[Page] = []context = browser.new_context(**browser_context_args)context.on("page", lambda page: pages.append(page))tracing_option = pytestconfig.getoption("--tracing")capture_trace = tracing_option in ["on", "retain-on-failure"]if capture_trace:context.tracing.start(name=slugify(request.node.nodeid),screenshots=True,snapshots=True,sources=True,)yield context# If requst.node is missing rep_call, then some error happened during execution# that prevented teardown, but should still be counted as a failurefailed = request.node.rep_call.failed if hasattr(request.node, "rep_call") else Trueif capture_trace:retain_trace = tracing_option == "on" or (failed and tracing_option == "retain-on-failure")if retain_trace:trace_path = _build_artifact_test_folder(pytestconfig, request, "trace.zip")context.tracing.stop(path=trace_path)else:context.tracing.stop()screenshot_option = pytestconfig.getoption("--screenshot")capture_screenshot = screenshot_option == "on" or (failed and screenshot_option == "only-on-failure")if capture_screenshot:print("--------------------------111")for index, page in enumerate(pages):human_readable_status = "failed" if failed else "finished"screenshot_path = _build_artifact_test_folder(pytestconfig, request, f"test-{human_readable_status}-{index + 1}.png")print(f'-----------------{screenshot_path}')try:page.screenshot(timeout=5000, path=screenshot_path)allure.attach.file(screenshot_path,name=f"{request.node.name}-{human_readable_status}-{index + 1}",attachment_type=allure.attachment_type.PNG)except Error:passcontext.close()video_option = pytestconfig.getoption("--video")preserve_video = video_option == "on" or (failed and video_option == "retain-on-failure")if preserve_video:for page in pages:video = page.videoif not video:continuetry:video_path = video.path()file_name = os.path.basename(video_path)file_path = _build_artifact_test_folder(pytestconfig, request, file_name)video.save_as(path=file_path)# 放入视频allure.attach.file(file_path, name=f"{request.node.name}-{human_readable_status}-{index + 1}",attachment_type=allure.attachment_type.WEBM)except Error:# Silent catch empty videos.pass@pytest.fixture

def page(context: BrowserContext) -> Generator[Page, None, None]:page = context.new_page()yield page@pytest.fixture(scope="session")

def is_webkit(browser_name: str) -> bool:return browser_name == "webkit"@pytest.fixture(scope="session")

def is_firefox(browser_name: str) -> bool:return browser_name == "firefox"@pytest.fixture(scope="session")

def is_chromium(browser_name: str) -> bool:return browser_name == "chromium"@pytest.fixture(scope="session")

def browser_name(pytestconfig: Any) -> Optional[str]:# When using unittest.TestCase it won't use pytest_generate_tests# For that we still try to give the user a slightly less feature-rich experiencebrowser_names = pytestconfig.getoption("--browser")if len(browser_names) == 0:return "chromium"if len(browser_names) == 1:return browser_names[0]warnings.warn("When using unittest.TestCase specifying multiple browsers is not supported")return browser_names[0]@pytest.fixture(scope="session")

def browser_channel(pytestconfig: Any) -> Optional[str]:return pytestconfig.getoption("--browser-channel")@pytest.fixture(scope="session")

def device(pytestconfig: Any) -> Optional[str]:return pytestconfig.getoption("--device")def pytest_addoption(parser: Any) -> None:group = parser.getgroup("playwright", "Playwright")group.addoption("--browser",action="append",default=[],help="Browser engine which should be used",choices=["chromium", "firefox", "webkit"],)group.addoption("--headed",action="store_true",default=False,help="Run tests in headed mode.",)group.addoption("--browser-channel",action="store",default=None,help="Browser channel to be used.",)group.addoption("--slowmo",default=0,type=int,help="Run tests with slow mo",)group.addoption("--device", default=None, action="store", help="Device to be emulated.")group.addoption("--output",default="test-results",help="Directory for artifacts produced by tests, defaults to test-results.",)group.addoption("--tracing",default="off",choices=["on", "off", "retain-on-failure"],help="Whether to record a trace for each test.",)group.addoption("--video",default="off",choices=["on", "off", "retain-on-failure"],help="Whether to record video for each test.",)group.addoption("--screenshot",default="off",choices=["on", "off", "only-on-failure"],help="Whether to automatically capture a screenshot after each test.",)主要修改了原插件的哪些内容:

你们搜索这个文件内容,关于allure的内容,都是我们做了修改的~

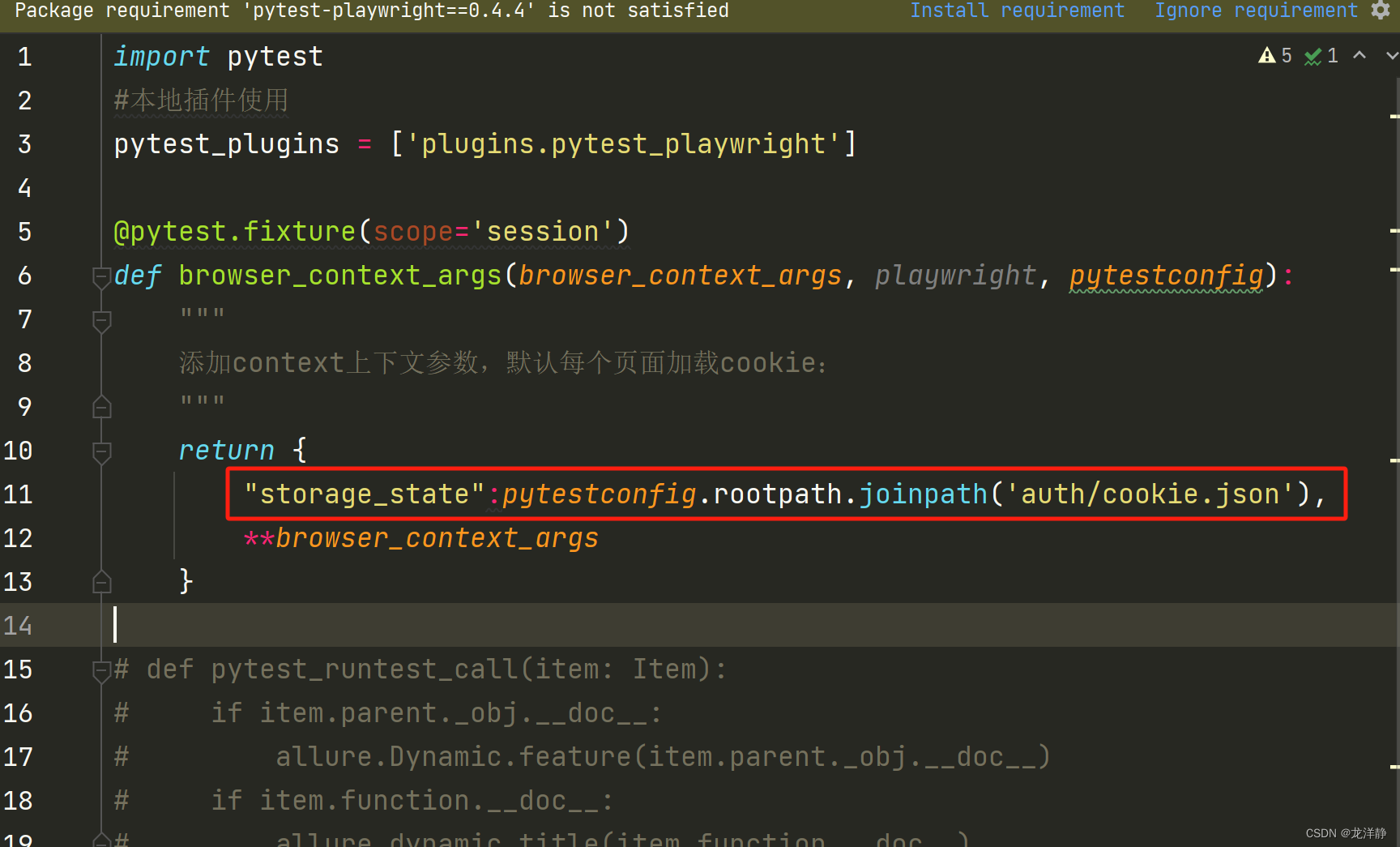

2、conftest.py配置

删除原本安装的pytest_playwright插件:

在settiings中找到pytest_playwright,点击减号就可以删除了~

3、修改pytest.ini文件

--tracing=retain-on-failure--screenshot=only-on-failure--video=retain-on-failure

4、运行case

此时运行case,当case失败时,我们可以看到这个目录:

里面就是截图,录屏,以及路由追踪~

5、注意事项

如果conftest.py中配置了如下内容:

要保证json文件中有值,否则会报错~