Omni3D目标检测

Omni3D是一个针对现实场景中的3D目标检测而构建的大型基准和模型体系。该项目旨在推动从单一图像中识别3D场景和物体的能力,这对于计算机视觉领域而言是一个长期的研究目标,并且在机器人、增强现实(AR)、虚拟现实(VR)以及其他需要精确定位和理解3D环境中物体的应用中尤为重要。

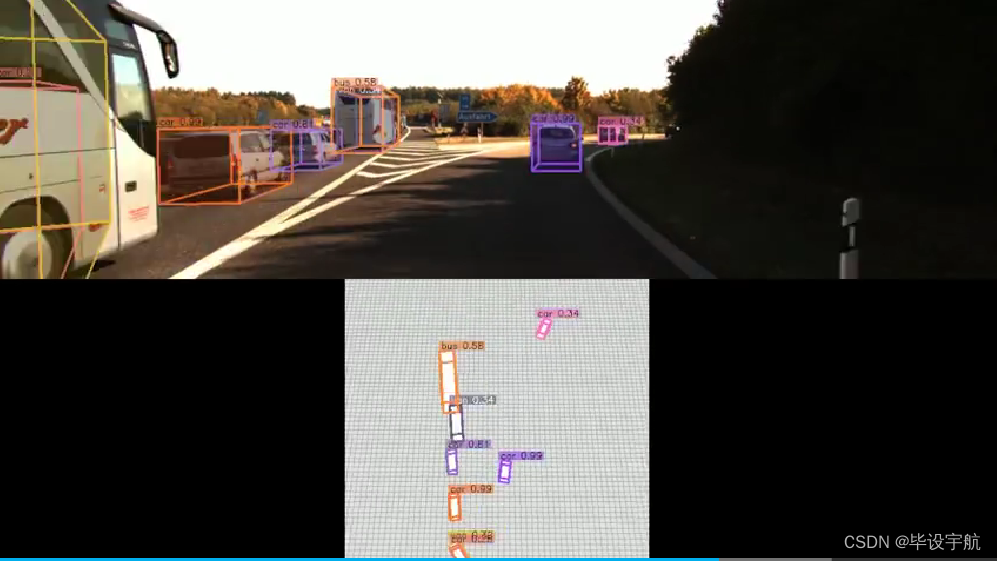

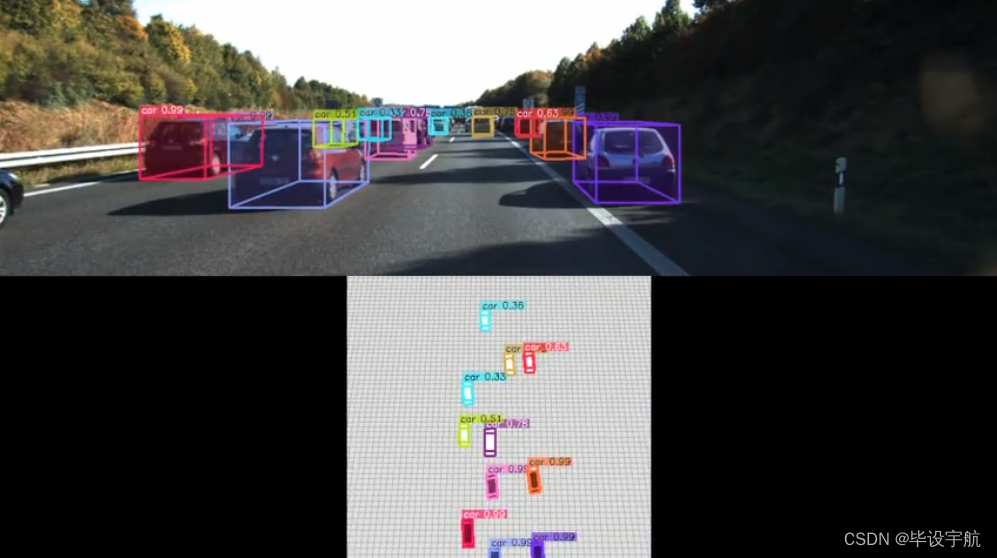

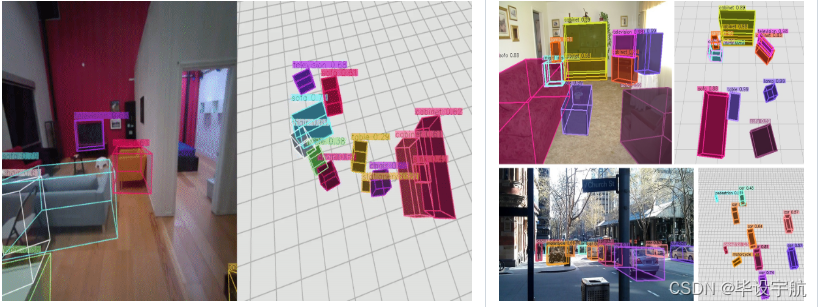

根据场景分为室内、室外、室内和室外统一模型:

关键特点:

-

综合性基准:Omni3D提供了一个广泛的基准测试集,覆盖了多种环境条件和场景类型,包括但不限于室内、室外、城市、乡村等,这有助于评估和比较不同3D目标检测算法的性能。

-

多样化数据:数据集中包含了丰富的标注信息,如3D边界框、类别标签、尺寸和姿态信息,使得研究人员能够在真实复杂场景下训练和测试他们的算法。

-

模型与算法:除了数据集,Omni3D可能还伴随着一些先进的3D目标检测模型,这些模型利用深度学习技术,在统一的框架下展示最新的研究成果。例如,提及的“UniMODE”就是一个试图统一室内和室外单目3D目标检测的模型,它在Omni3D基准上展示了先进水平的性能。

-

促进研究与应用:通过提供这样一套标准化的工具和资源,Omni3D促进了3D视觉领域的研究交流,帮助研究者们快速迭代和优化算法,同时也为实际应用提供了可行的技术参考。

应用前景:

-

自动驾驶汽车:准确检测和识别道路上的障碍物对于自动驾驶安全至关重要。

-

无人机导航与监控:在执行搜索救援或环境监测任务时,无人机需要理解其周围环境的3D结构。

-

AR/VR内容创建:为了提供更加沉浸式的体验,AR/VR应用需要实时感知并理解用户周围的3D空间。

-

机器人操作与物流:在仓库自动化或家庭服务机器人场景中,3D目标检测可以提高物品抓取、搬运的精度和效率。

综上所述,Omni3D作为一个全面的3D目标检测平台,不仅推动了技术进步,也为跨领域的实际应用铺平了道路。

安装:

# setup new evironment

conda create -n cubercnn python=3.8

source activate cubercnn# main dependencies

conda install -c fvcore -c iopath -c conda-forge -c pytorch3d -c pytorch fvcore iopath pytorch3d pytorch=1.8 torchvision=0.9.1 cudatoolkit=10.1# OpenCV, COCO, detectron2

pip install cython opencv-python

pip install 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'

python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu101/torch1.8/index.html# other dependencies

conda install -c conda-forge scipy seaborn运行:

## for outdoor

python demo/demo.py \

--config-file ./configs/cubercnn_DLA34_FPN_out.yaml \

--input-folder "/home/spurs/dataset/2011_10_03/2011_10_03_drive_0047_sync/image_02/data" \

--threshold 0.25 --display \

MODEL.WEIGHTS ./cubercnn_DLA34_FPN_outdoor.pth \

OUTPUT_DIR output/demo## for indoor

python demo/demo.py \

--config-file ./configs/cubercnn_DLA34_FPN_in.yaml \

--input-folder "/home/spurs/dataset/2011_10_03/2011_10_03_drive_0047_sync/image_02/data" \

--threshold 0.25 --display \

MODEL.WEIGHTS ./cubercnn_DLA34_FPN_indoor.pth \

OUTPUT_DIR output/demo

安装:

# setup new evironment

conda create -n cubercnn python=3.8

source activate cubercnn# main dependencies

conda install -c fvcore -c iopath -c conda-forge -c pytorch3d -c pytorch fvcore iopath pytorch3d pytorch=1.8 torchvision=0.9.1 cudatoolkit=10.1# OpenCV, COCO, detectron2

pip install cython opencv-python

pip install 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'

python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu101/torch1.8/index.html# other dependencies

conda install -c conda-forge scipy seabornFor reference, we used and for our experiments. We expect that slight variations in versions are also compatible.cuda/10.1cudnn/v7.6.5.32\

示例:To run the Cube R-CNN demo on a folder of input images using our model trained on the full Omni3D dataset,DLA34

# Download example COCO images

sh demo/download_demo_COCO_images.sh# Run an example demo

python demo/demo.py \

--config-file cubercnn://omni3d/cubercnn_DLA34_FPN.yaml \

--input-folder "datasets/coco_examples" \

--threshold 0.25 --display \

MODEL.WEIGHTS cubercnn://omni3d/cubercnn_DLA34_FPN.pth \

OUTPUT_DIR output/demo We train on 48 GPUs using submitit which wraps the following training command,

python tools/train_net.py \--config-file configs/Base_Omni3D.yaml \OUTPUT_DIR output/omni3d_example_runNote that our provided configs specify hyperparameters tuned for 48 GPUs. You could train on 1 GPU (though with no guarantee of reaching the final performance) as follows,

python tools/train_net.py \--config-file configs/Base_Omni3D.yaml --num-gpus 1 \SOLVER.IMS_PER_BATCH 4 SOLVER.BASE_LR 0.0025 \SOLVER.MAX_ITER 5568000 SOLVER.STEPS (3340800, 4454400) \SOLVER.WARMUP_ITERS 174000 TEST.EVAL_PERIOD 1392000 \VIS_PERIOD 111360 OUTPUT_DIR output/omni3d_example_runThe evaluator relies on the detectron2 MetadataCatalog for keeping track of category names and contiguous IDs. Hence, it is important to set these variables appropriately.

# (list[str]) the category names in their contiguous order

MetadataCatalog.get('omni3d_model').thing_classes = ... # (dict[int: int]) the mapping from Omni3D category IDs to the contiguous order

MetadataCatalog.get('omni3d_model').thing_dataset_id_to_contiguous_id = ...In summary, the evaluator expects a list of image-level predictions in the format of:

{"image_id": <int> the unique image identifier from Omni3D,"K": <np.array> 3x3 intrinsics matrix for the image,"width": <int> image width,"height": <int> image height,"instances": [{"image_id": <int> the unique image identifier from Omni3D,"category_id": <int> the contiguous category prediction IDs, which can be mapped from Omni3D's category ID's usingMetadataCatalog.get('omni3d_model').thing_dataset_id_to_contiguous_id"bbox": [float] 2D box as [x1, y1, x2, y2] used for IoU2D,"score": <float> the confidence score for the object,"depth": <float> the depth of the center of the object,"bbox3D": list[list[float]] 8x3 corner vertices used for IoU3D,}...]

}Please use the following BibTeX entry if you use Omni3D and/or Cube R-CNN in your research or refer to our results.

@inproceedings{brazil2023omni3d,author = {Garrick Brazil and Abhinav Kumar and Julian Straub and Nikhila Ravi and Justin Johnson and Georgia Gkioxari},title = {{Omni3D}: A Large Benchmark and Model for {3D} Object Detection in the Wild},booktitle = {CVPR},address = {Vancouver, Canada},month = {June},year = {2023},organization = {IEEE},

}