【Machine Learning】Suitable Learning Rate in Machine Learning

一、The cases of different learning rates:

In the gradient descent algorithm model:

is the learning rate of the demand, how to determine the learning rate, and what impact does it have if it is too large or too small? We will analyze it through the following graph:

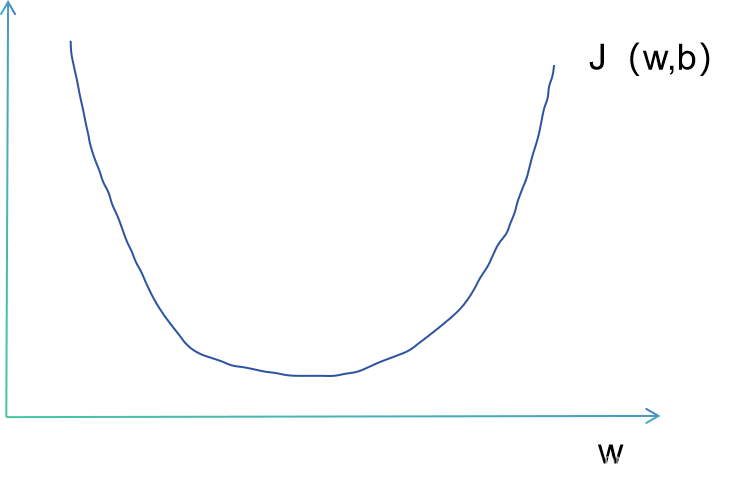

We can use the same method as before to understand this equation, so that b in J (w, b) is 0, and then we can create a two-dimensional coordinate graph:

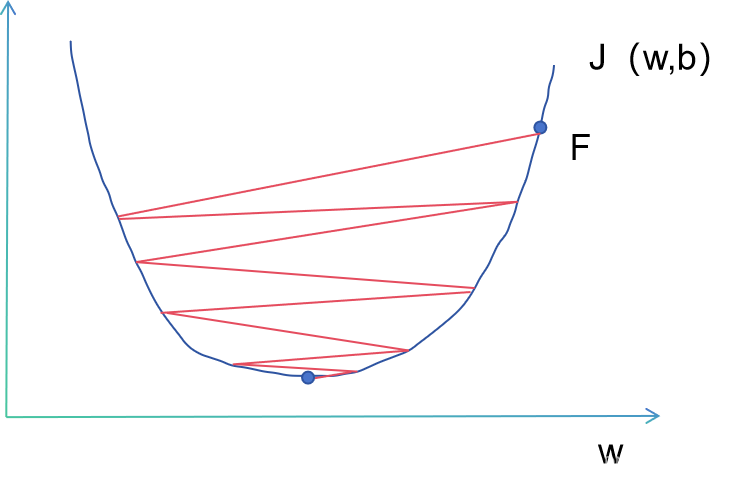

So let's first observe the case of a smaller learning rate (starting from F):

In this case, there is a high probability that the minimum point can be found, which means that it can eventually converge.

Then there are situations with high learning rates:

We can find that when the learning rate is high but within a certain limit, convergence can also be achieved. The reason for this can be started from the formula. Whenever a point drops to a point with a smaller slope, its learning rate remains unchanged, but the slope decreases, and it will eventually continue to decline until convergence. However, will this situation continue? We can take a look at the following situation:

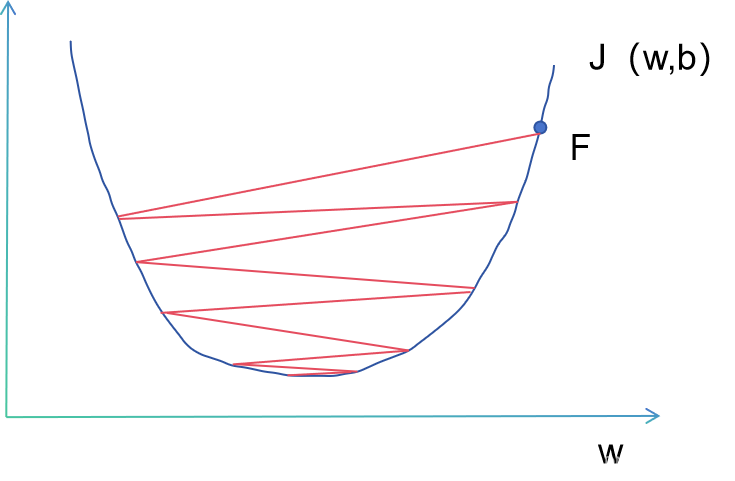

The difference between this and the above is that when descending, it may just skip the optimal point, which may result in the convergence value not being optimal.

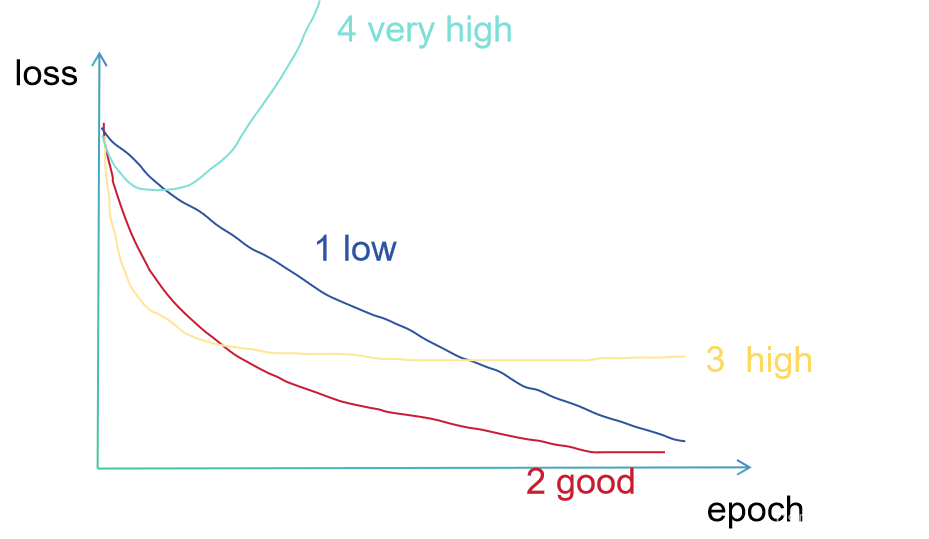

Finally, there is the case of divergence:

So the situation is roughly like these:

In the picture, loss is an indicator that measures the difference between the predicted results of the model and the actual labels, and epoch is a complete training process in the gradient descent algorithm, which includes multiple iterations of parameter updates.

二、How to choose the Suitable Learning Rate:

In algorithm design, we should adjust the learning rate in real time and determine the size of the adjustment by observing the fitted model. After each iteration, use the estimated model parameters to view the value of the error function. If the error rate decreases compared to the previous iteration, the learning rate can be increased. If the error rate increases compared to the previous iteration, the value of the previous iteration should be reset and the learning rate reduced to 50% of the previous iteration. Therefore, this is a method of adaptive learning rate adjustment. There are simple and direct methods for dynamically changing learning rates in deep learning frameworks such as Caffe and TensorFlow.

The commonly used learning rates are 0.00001, 0.0001, 0.001, 0.003, 0.01, 0.03, 0.1, 0.3, 1, 3, 10