Windows11(非WSL)安装Installing llama-cpp-python with GPU Support

直接安装,只支持CPU。想支持GPU,麻烦一些。

1. 安装CUDA Toolkit (NVIDIA CUDA Toolkit (available at https://developer.nvidia.com/cuda-downloads)

2. 安装如下物件:

- git

- python

- cmake

- Visual Studio Community (make sure you install this with the following settings)

- Desktop development with C++

- development

- Linux embedded development with C++

3. Clone git repository recursively to get llama.cpp submodule as well

git clone --recursive -j8 https://github.com/abetlen/llama-cpp-python.git

4. Open up a command Prompt and set the following environment variables.

set FORCE_CMAKE=1

set CMAKE_ARGS=-DLLAMA_CUBLAS=ON

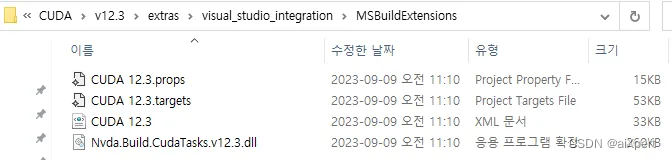

5. 复制文件从Cuda到VS:**

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.3\extras\visual_studio_integration\MSBuildExtensions下面有四个文件,全部copy。

然后复制到:

C:\Program Files\Microsoft Visual Studio\2022\Community\MSBuild\Microsoft\VC\v170\BuildCustomizations下面。

6. Compiling and installing

cd\llama-cpp-python

python -m pip install -e .

7. 检查成果:

>>> from llama_cpp import Llama

>>> llm = Llama(model_path="llama-2-7b-chat.Q8_0.gguf",n_gpu_layers=-1)

结果:

ggml_init_cublas: GGML_CUDA_FORCE_MMQ: no

ggml_init_cublas: CUDA_USE_TENSOR_CORES: yes

ggml_init_cublas: found 1 CUDA devices:Device 0: NVIDIA GeForce RTX 4090, compute capability 6.1, VMM: yes

llama_model_loader: loaded meta data with 19 key-value pairs and 291 tensors from llama-2-7b-chat.Q8_0.gguf (version GGUF V2)

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = llama

llama_model_loader: - kv 1: general.name str = LLaMA v2

llama_model_loader: - kv 2: llama.context_length u32 = 4096

llama_model_loader: - kv 3: llama.embedding_length u32 = 4096

llama_model_loader: - kv 4: llama.block_count u32 = 32

llama_model_loader: - kv 5: llama.feed_forward_length u32 = 11008

llama_model_loader: - kv 6: llama.rope.dimension_count u32 = 128

llama_model_loader: - kv 7: llama.attention.head_count u32 = 32

llama_model_loader: - kv 8: llama.attention.head_count_kv u32 = 32

llama_model_loader: - kv 9: llama.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 10: general.file_type u32 = 7

llama_model_loader: - kv 11: tokenizer.ggml.model str = llama

llama_model_loader: - kv 12: tokenizer.ggml.tokens arr[str,32000] = ["<unk>", "<s>", "</s>", "<0x00>", "<...

llama_model_loader: - kv 13: tokenizer.ggml.scores arr[f32,32000] = [0.000000, 0.000000, 0.000000, 0.0000...

llama_model_loader: - kv 14: tokenizer.ggml.token_type arr[i32,32000] = [2, 3, 3, 6, 6, 6, 6, 6, 6, 6, 6, 6, ...

llama_model_loader: - kv 15: tokenizer.ggml.bos_token_id u32 = 1

llama_model_loader: - kv 16: tokenizer.ggml.eos_token_id u32 = 2

llama_model_loader: - kv 17: tokenizer.ggml.unknown_token_id u32 = 0

llama_model_loader: - kv 18: general.quantization_version u32 = 2

llama_model_loader: - type f32: 65 tensors

llama_model_loader: - type q8_0: 226 tensors

llm_load_vocab: special tokens definition check successful ( 259/32000 ).

llm_load_print_meta: format = GGUF V2

llm_load_print_meta: arch = llama

llm_load_print_meta: vocab type = SPM

llm_load_print_meta: n_vocab = 32000

llm_load_print_meta: n_merges = 0

llm_load_print_meta: n_ctx_train = 4096

llm_load_print_meta: n_embd = 4096

llm_load_print_meta: n_head = 32

llm_load_print_meta: n_head_kv = 32

llm_load_print_meta: n_layer = 32

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 4096

llm_load_print_meta: n_embd_v_gqa = 4096

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-06

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 11008

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 4096

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = 7B

llm_load_print_meta: model ftype = Q8_0

llm_load_print_meta: model params = 6.74 B

llm_load_print_meta: model size = 6.67 GiB (8.50 BPW)

llm_load_print_meta: general.name = LLaMA v2

llm_load_print_meta: BOS token = 1 '<s>'

llm_load_print_meta: EOS token = 2 '</s>'

llm_load_print_meta: UNK token = 0 '<unk>'

llm_load_print_meta: LF token = 13 '<0x0A>'

显卡终于在列,可以玩儿了。