365天深度学习训练营 第P6周:好莱坞明星识别

- 🍨 本文为🔗365天深度学习训练营 内部限免文章(版权归 K同学啊 所有)

- 🍦 参考文章地址: 🔗第P6周:好莱坞明星识别 | 365天深度学习训练营

- 🍖 作者:K同学啊 | 接辅导、程序定制

文章目录

- 我的环境:

- 一、前期工作

- 1. 设置 GPU

- 2. 导入数据

- 3. 划分数据集

- 二、调用vgg-16模型

- 三、训练模型

- 1. 设置超参数

- 2. 编写训练函数

- 3. 编写测试函数

- 4. 正式训练

- 四、结果可视化

- 1.Loss 与 Accuracy 图

我的环境:

- 语言环境:Python 3.6.8

- 编译器:jupyter notebook

- 深度学习环境:

- torch==0.13.1、cuda==11.3

- torchvision==1.12.1、cuda==11.3

一、前期工作

1. 设置 GPU

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as transforms

from torchvision import transforms, datasetsif __name__=='__main__':''' 设置GPU '''device = torch.device("cuda" if torch.cuda.is_available() else "cpu")print("Using {} device\n".format(device))

Using cuda device

2. 导入数据

import os, PIL, pathlib

data_dir = 'D:/jupyter notebook/DL-100-days/datasets/48-data/'

data_dir = pathlib.Path(data_dir)

data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[5] for path in data_paths]

print(classeNames)

['Angelina Jolie','Brad Pitt','Denzel Washington','Hugh Jackman','Jennifer Lawrence','Johnny Depp','Kate Winslet','Leonardo DiCaprio','Megan Fox','Natalie Portman','Nicole Kidman','Robert Downey Jr','Sandra Bullock','Scarlett Johansson','Tom Cruise','Tom Hanks','Will Smith']

train_transforms = transforms.Compose([transforms.Resize([224,224]),# resize输入图片transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换成tensortransforms.Normalize(mean = [0.485, 0.456, 0.406],std = [0.229,0.224,0.225]) # 从数据集中随机抽样计算得到

])total_data = datasets.ImageFolder(data_dir,transform=train_transforms)

total_data

Dataset ImageFolderNumber of datapoints: 1800Root location: hlwStandardTransform

Transform: Compose(Resize(size=[224, 224], interpolation=PIL.Image.BILINEAR)ToTensor()Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]))

3. 划分数据集

train_size = int(0.8*len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data,[train_size,test_size])

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

二、调用vgg-16模型

from torchvision.models import vgg16model = vgg16(pretrained = True).to(device)

for param in model.parameters():param.requires_grad = Falsemodel.classifier._modules['6'] = nn.Linear(4096,len(classNames))model.to(device)

# 查看要训练的层

params_to_update = model.parameters()

# params_to_update = []

for name,param in model.named_parameters():if param.requires_grad == True:

# params_to_update.append(param)print("\t",name)

三、训练模型

1. 设置超参数

# 优化器设置

optimizer = torch.optim.Adam(params_to_update, lr=1e-4)#要训练什么参数/

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5, gamma=0.92)#学习率每5个epoch衰减成原来的1/10

loss_fn = nn.CrossEntropyLoss()

2. 编写训练函数

# 训练循环

def train(dataloader, model, loss_fn, optimizer):size = len(dataloader.dataset) # 训练集的大小,一共900张图片num_batches = len(dataloader) # 批次数目,29(900/32)train_loss, train_acc = 0, 0 # 初始化训练损失和正确率for X, y in dataloader: # 获取图片及其标签X, y = X.to(device), y.to(device)# 计算预测误差pred = model(X) # 网络输出loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失# 反向传播optimizer.zero_grad() # grad属性归零loss.backward() # 反向传播optimizer.step() # 每一步自动更新# 记录acc与losstrain_acc += (pred.argmax(1) == y).type(torch.float).sum().item()train_loss += loss.item()train_acc /= sizetrain_loss /= num_batchesreturn train_acc, train_loss

3. 编写测试函数

def test (dataloader, model, loss_fn):size = len(dataloader.dataset) # 测试集的大小,一共10000张图片num_batches = len(dataloader) # 批次数目,8(255/32=8,向上取整)test_loss, test_acc = 0, 0# 当不进行训练时,停止梯度更新,节省计算内存消耗with torch.no_grad():for imgs, target in dataloader:imgs, target = imgs.to(device), target.to(device)# 计算losstarget_pred = model(imgs)loss = loss_fn(target_pred, target)test_loss += loss.item()test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()test_acc /= sizetest_loss /= num_batchesreturn test_acc, test_loss

4. 正式训练

epochs = 20

train_loss = []

train_acc = []

test_loss = []

test_acc = []

best_acc = 0

filename='checkpoint.pth'for epoch in range(epochs):model.train()epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)scheduler.step()#学习率衰减model.eval()epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)# 保存最优模型if epoch_test_acc > best_acc:best_acc = epoch_train_accstate = {'state_dict': model.state_dict(),#字典里key就是各层的名字,值就是训练好的权重'best_acc': best_acc,'optimizer' : optimizer.state_dict(),}torch.save(state, filename)train_acc.append(epoch_train_acc)train_loss.append(epoch_train_loss)test_acc.append(epoch_test_acc)test_loss.append(epoch_test_loss)template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%,Test_loss:{:.3f}')print(template.format(epoch+1, epoch_train_acc*100, epoch_train_loss, epoch_test_acc*100, epoch_test_loss))

print('Done')

print('best_acc:',best_acc)

Epoch: 1, Train_acc:12.2%, Train_loss:2.701, Test_acc:13.9%,Test_loss:2.544

Epoch: 2, Train_acc:20.8%, Train_loss:2.386, Test_acc:20.6%,Test_loss:2.377

Epoch: 3, Train_acc:26.1%, Train_loss:2.228, Test_acc:22.5%,Test_loss:2.274

…

Epoch:19, Train_acc:51.6%, Train_loss:1.528, Test_acc:35.8%,Test_loss:1.864

Epoch:20, Train_acc:53.9%, Train_loss:1.499, Test_acc:35.3%,Test_loss:1.852

Done

best_acc: 0.37430555555555556

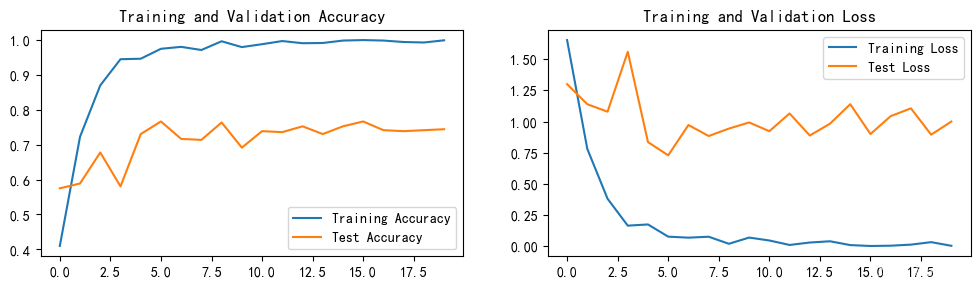

四、结果可视化

1.Loss 与 Accuracy 图

import matplotlib.pyplot as plt

#隐藏警告

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 #分辨率epochs_range = range(epochs)plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()