langchain 加载各种格式文件读取方法

参考:https://python.langchain.com/docs/modules/data_connection/document_loaders/

https://github.com/thomas-yanxin/LangChain-ChatGLM-Webui/blob/master/app.py

代码

可以支持pdf、md、doc、txt等格式

from langchain.document_loaders import UnstructuredFileLoader

import re

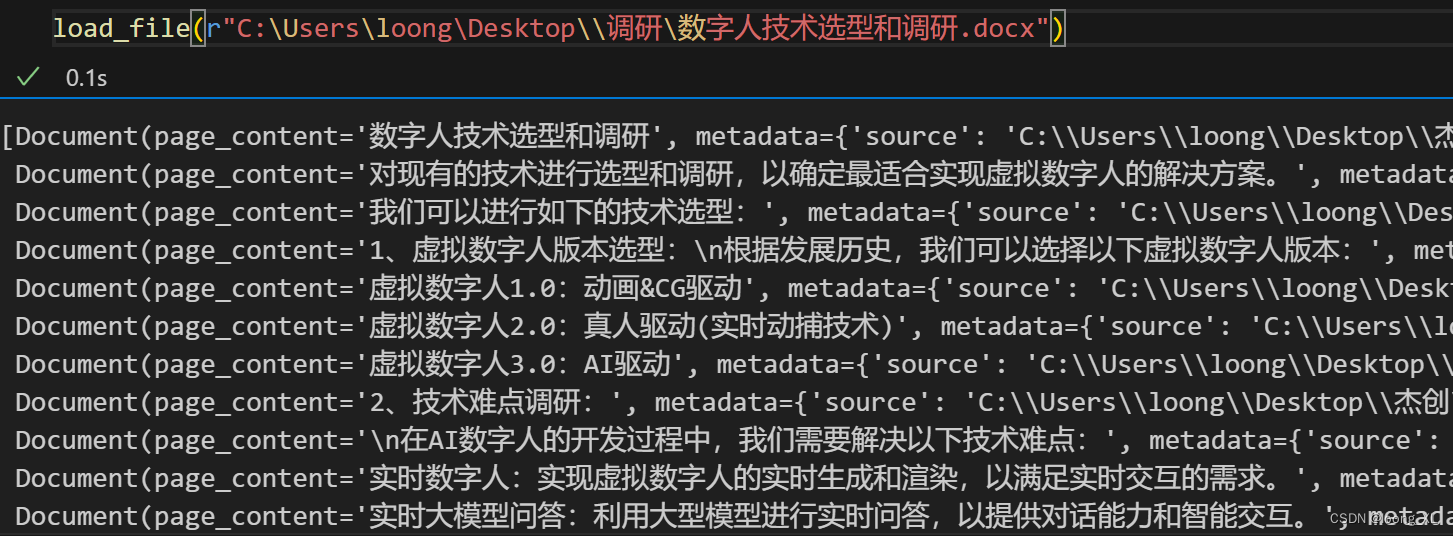

from typing import Listfrom langchain.text_splitter import CharacterTextSplitterclass ChineseTextSplitter(CharacterTextSplitter):def __init__(self, pdf: bool = False, **kwargs):super().__init__(**kwargs)self.pdf = pdfdef split_text(self, text: str) -> List[str]:if self.pdf:text = re.sub(r"\n{3,}", "\n", text)text = re.sub('\s', ' ', text)text = text.replace("\n\n", "")sent_sep_pattern = re.compile('([﹒﹔﹖﹗.。!?]["’”」』]{0,2}|(?=["‘“「『]{1,2}|$))') sent_list = []for ele in sent_sep_pattern.split(text):if sent_sep_pattern.match(ele) and sent_list:sent_list[-1] += eleelif ele:sent_list.append(ele)return sent_listdef load_file(filepath):if filepath.lower().endswith(".md"):loader = UnstructuredFileLoader(filepath, mode="elements")docs = loader.load()elif filepath.lower().endswith(".pdf"):loader = UnstructuredFileLoader(filepath)textsplitter = ChineseTextSplitter(pdf=True)docs = loader.load_and_split(textsplitter)else:loader = UnstructuredFileLoader(filepath, mode="elements")textsplitter = ChineseTextSplitter(pdf=False)docs = loader.load_and_split(text_splitter=textsplitter)return docs

继续把上面切分数据保存到FAISS

参考:https://blog.csdn.net/weixin_42357472/article/details/133778618?spm=1001.2014.3001.5502

from langchain.vectorstores import FAISS

from langchain.embeddings import HuggingFaceEmbeddingsembedding = HuggingFaceEmbeddings(model_name=r"C:\Users\loong\.cache\huggingface\hub\models--shibing624--text2vec-base-chinese\snapshots\2642****1812248") ##HuggingFace离线模型,用加载HuggingFaceEmbeddings##保存向量

docs = load_file(r"C:\Users\loong\D***资料.txt")

vector_store = FAISS.from_documents(docs, embedding)

vector_store.save_local('faiss_index')## query 向量库搜索测试query = "公司地址"

vector_store.similarity_search(query)