大模型综述论文笔记6-15

这里写自定义目录标题

- Keywords

- Backgroud for LLMs

- Technical Evolution of GPT-series Models

- Research of OpenAI on LLMs can be roughly divided into the following stages

- Early Explorations

- Capacity Leap

- Capacity Enhancement

- The Milestones of Language Models

- Resources

- Pre-training

- Data Collection

- Data Preprocessing

- Quality Filtering

- De-duplication

Keywords

GPT:Generative Pre-Training

Backgroud for LLMs

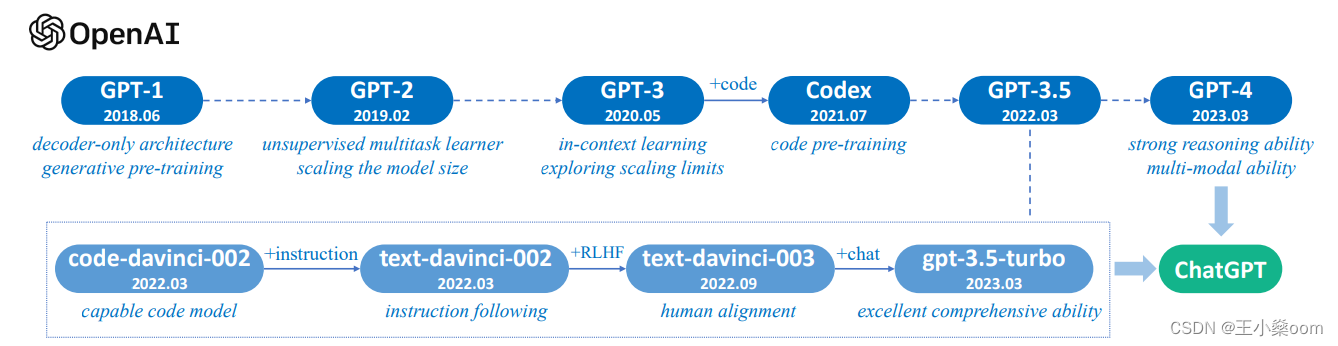

Technical Evolution of GPT-series Models

Two key points to GPT’s success are (I) training decoder-onlly Transformer language models that can accurately predict the next word and (II) scaling up the size of language models

Research of OpenAI on LLMs can be roughly divided into the following stages

Early Explorations

Capacity Leap

ICT

Capacity Enhancement

1.training on code data

Codex: a GPT model fine-tuned on a large corpus of GitHub

code

2.alignment with human preference

reinforcement learning from human feedback (RLHF) algorithm

Note that it seems that the wording of “instruction tuning” has seldom

been used in OpenAI’s paper and documentation, which is substituted by

supervised fine-tuning on human demonstrations (i.e., the first step

of the RLHF algorithm).

The Milestones of Language Models

chatGPT(based on gpt3.5 and gpt4) and GPT-4(multimodal)

Resources

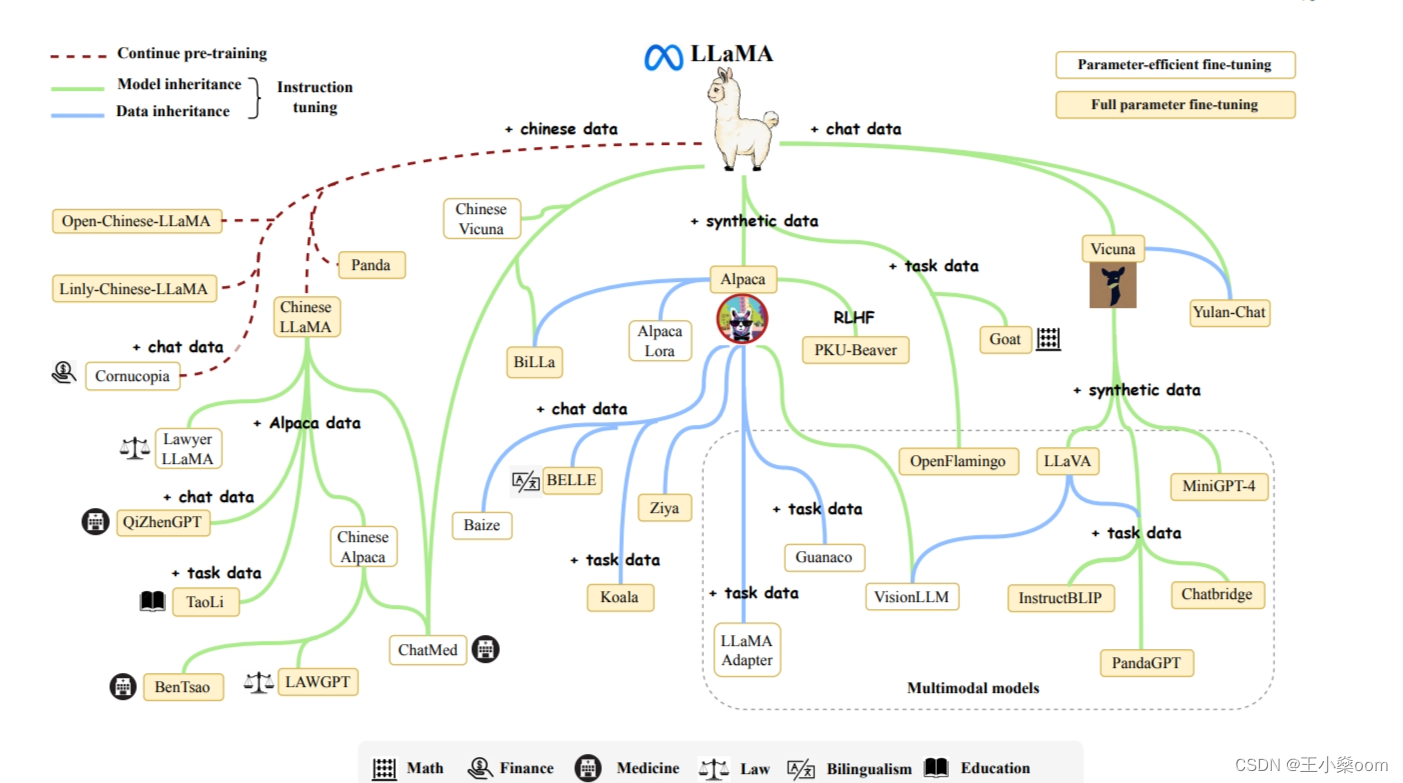

Stanford Alpaca is the first open instruct-following model fine-tuned based on LLaMA (7B).

Alpaca LoRA (a reproduction of Stanford Alpaca using LoRA)

model 、data、library

Pre-training

Data Collection

General Text Data:webpages, books, and conversational text

Specialized Text Data:Multilingual text, Scientific text, Code

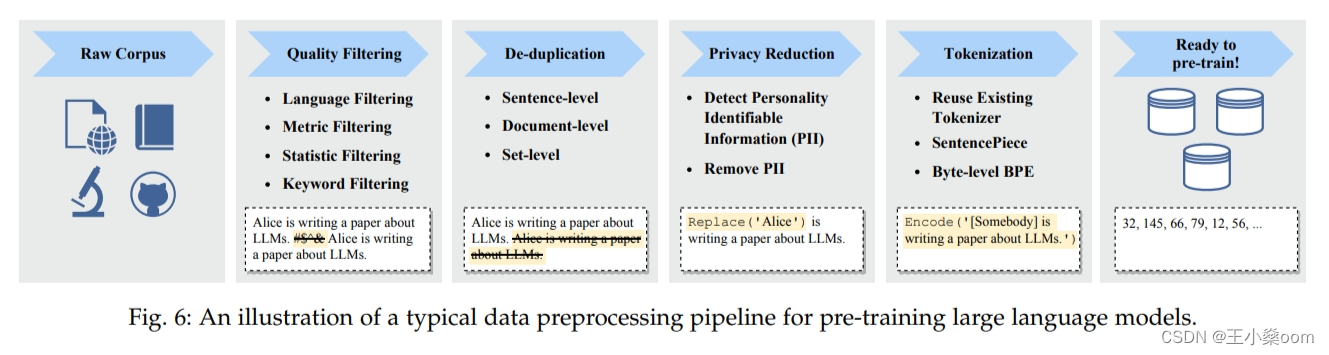

Data Preprocessing

Quality Filtering

- The former approach trains a selection classifier based on highquality texts and leverages it to identify and filter out low quality data.

- heuristic based approaches to eliminate low-quality texts through a set of well-designed rules: Language based filtering, Metric based filtering, Statistic based filtering, Keyword based filtering

De-duplication

Existing work has found that duplicate data in a corpus would reduce the diversity of language models, which may cause the training process to become unstable and thus affect the model performance.

- Privacy Redaction: (PII:personally identifiable information )

- Tokenization:(It aims to segment raw text into sequences of individual tokens, which are subsequently used as the inputs of LLMs.) Byte-Pair Encoding (BPE) tokenization; WordPiece tokenization; WordPiece tokenization