python、numpy、pytorch中的浅拷贝和深拷贝

1、Python中的浅拷贝和深拷贝

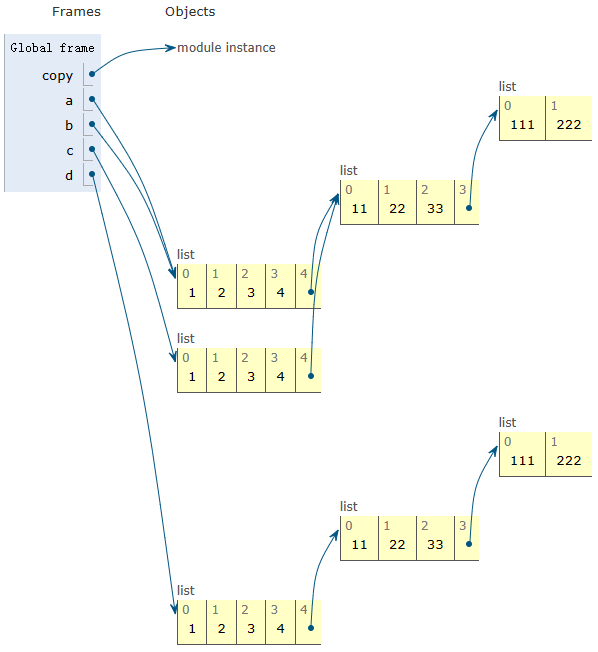

import copya = [1, 2, 3, 4, [11, 22, 33, [111, 222]]]

b = a

c = a.copy()

d = copy.deepcopy(a)print('before modify\r\n a=\r\n', a, '\r\n','b = a=\r\n', b, '\r\n','c = a.copy()=\r\n', c, '\r\n','d = copy.deepcopy(a)\r\n', d, '\r\n')

| before modify a= [1, 2, 3, 4, [11, 22, 33, [111, 222]]] b = a= [1, 2, 3, 4, [11, 22, 33, [111, 222]]] c = a.copy()= [1, 2, 3, 4, [11, 22, 33, [111, 222]]] d = copy.deepcopy(a) [1, 2, 3, 4, [11, 22, 33, [111, 222]]] |  |

注:图片网址Python Tutor code visualizer: Visualize code in Python, JavaScript, C, C++, and Java

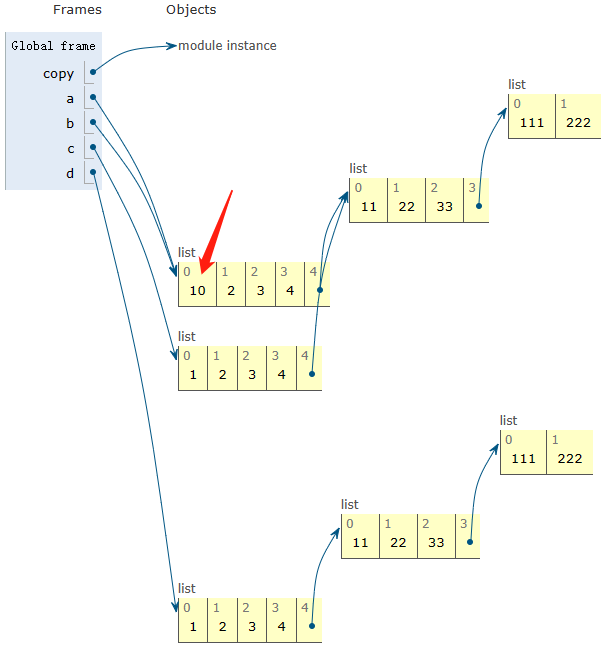

a[0] = 10

print('after a[0] = 10\r\n a=\r\n', a, '\r\n','b = a=\r\n', b, '\r\n','c = a.copy()=\r\n', c, '\r\n','d = copy.deepcopy(a)\r\n', d, '\r\n')| after a[0] = 10 a= [10, 2, 3, 4, [11, 22, 33, [111, 222]]] b = a= [10, 2, 3, 4, [11, 22, 33, [111, 222]]] c = a.copy()= [1, 2, 3, 4, [11, 22, 33, [111, 222]]] d = copy.deepcopy(a) [1, 2, 3, 4, [11, 22, 33, [111, 222]]] |  |

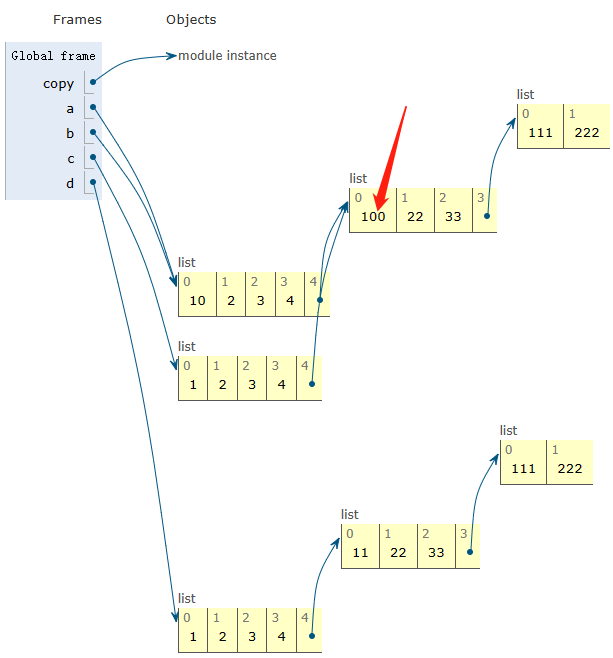

a[4][0] = 100

print('after a[4][0] = 100\r\n a=\r\n', a, '\r\n','b = a=\r\n', b, '\r\n','c = a.copy()=\r\n', c, '\r\n','d = copy.deepcopy(a)\r\n', d, '\r\n')| after a[4][0] = 100 a= [10, 2, 3, 4, [100, 22, 33, [111, 222]]] b = a= [10, 2, 3, 4, [100, 22, 33, [111, 222]]] c = a.copy()= [1, 2, 3, 4, [100, 22, 33, [111, 222]]] d = copy.deepcopy(a) [1, 2, 3, 4, [11, 22, 33, [111, 222]]] |  |

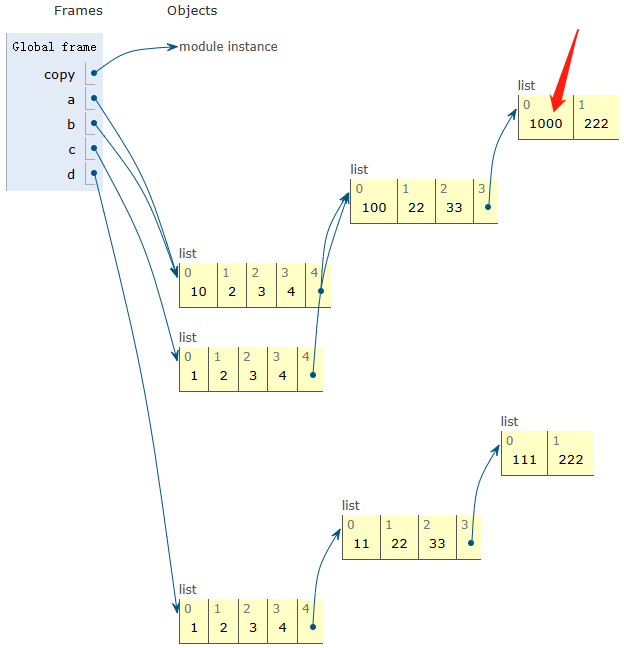

a[4][3][0] = 1000

print('after a[4][3][0] = 1000\r\n a=\r\n', a, '\r\n','b = a=\r\n', b, '\r\n','c = a.copy()=\r\n', c, '\r\n','d = copy.deepcopy(a)\r\n', d, '\r\n')| after a[4][3][0] = 1000 a= [10, 2, 3, 4, [100, 22, 33, [1000, 222]]] b = a= [10, 2, 3, 4, [100, 22, 33, [1000, 222]]] c = a.copy()= [1, 2, 3, 4, [100, 22, 33, [1000, 222]]] d = copy.deepcopy(a) [1, 2, 3, 4, [11, 22, 33, [111, 222]]] |  |

2、numpy中的浅拷贝和深拷贝

a1 = np.random.randn(2, 3)

b1 = a1

c1 = a1.copy()

d1 = copy.deepcopy(a1)print('before modify\r\n a1=\r\n', a1, '\r\n','b1 = a1=\r\n', b1, '\r\n','c1 = a1.copy()=\r\n', c1, '\r\n','d1 = copy.deepcopy(a1)=\r\n', d1, '\r\n')a1[0] = 10

print('after a1[0] = 10\r\n a1=\r\n', a1, '\r\n','b1 = a1=\r\n', b1, '\r\n','c1 = a1.copy()=\r\n', c1, '\r\n','d1 = copy.deepcopy(a1)=\r\n', d1, '\r\n')before modify

a1=

[[ 1.48618757 -0.90409826 2.05529475]

[ 0.14232255 2.93331428 0.88511785]]

b1 = a1=

[[ 1.48618757 -0.90409826 2.05529475]

[ 0.14232255 2.93331428 0.88511785]]

c1 = a1.copy()=

[[ 1.48618757 -0.90409826 2.05529475]

[ 0.14232255 2.93331428 0.88511785]]

d1 = copy.deepcopy(a1)=

[[ 1.48618757 -0.90409826 2.05529475]

[ 0.14232255 2.93331428 0.88511785]]

after a1[0] = 10

a1=

[[10. 10. 10. ]

[ 0.14232255 2.93331428 0.88511785]]

b1 = a1=

[[10. 10. 10. ]

[ 0.14232255 2.93331428 0.88511785]]

c1 = a1.copy()=

[[ 1.48618757 -0.90409826 2.05529475]

[ 0.14232255 2.93331428 0.88511785]]

d1 = copy.deepcopy(a1)=

[[ 1.48618757 -0.90409826 2.05529475]

[ 0.14232255 2.93331428 0.88511785]]

3、pytorch中的浅拷贝和深拷贝

a2 = torch.randn(2, 3)

b2 = torch.Tensor(a2)

bb2 = torch.tensor(a2)

c2 = a2.detach()

cc2 = a2.clone()

ccc2 = a2.clone().detach()

print('before modify\r\n a2=\r\n', a2, '\r\n','b2 = torch.Tensor(a2)=\r\n', b2, '\r\n','bb2 = torch.tensor(a2)=\r\n', bb2, '\r\n','c2 = a2.detach()=\r\n', c2, '\r\n','cc2 = a2.clone()=\r\n', cc2, '\r\n','ccc2 = a2.clone().detach()=\r\n', ccc2)

a2[0] = 0

print('after modify\r\n a2=\r\n', a2, '\r\n','b2 = torch.Tensor(a2)=\r\n', b2, '\r\n','bb2 = torch.tensor(a2)=\r\n', bb2, '\r\n','c2 = a2.detach()=\r\n', c2, '\r\n','cc2 = a2.clone()=\r\n', cc2, '\r\n','ccc2 = a2.clone().detach()=\r\n', ccc2)before modify

a2=

tensor([[-0.6472, 1.3437, -0.3386],

[ 0.8979, -0.4158, 1.1338]])

b2 = torch.Tensor(a2)=

tensor([[-0.6472, 1.3437, -0.3386],

[ 0.8979, -0.4158, 1.1338]])

bb2 = torch.tensor(a2)=

tensor([[-0.6472, 1.3437, -0.3386],

[ 0.8979, -0.4158, 1.1338]])

c2 = a2.detach()=

tensor([[-0.6472, 1.3437, -0.3386],

[ 0.8979, -0.4158, 1.1338]])

cc2 = a2.clone()=

tensor([[-0.6472, 1.3437, -0.3386],

[ 0.8979, -0.4158, 1.1338]])

ccc2 = a2.clone().detach()=

tensor([[-0.6472, 1.3437, -0.3386],

[ 0.8979, -0.4158, 1.1338]])

after modify

a2=

tensor([[ 0.0000, 0.0000, 0.0000],

[ 0.8979, -0.4158, 1.1338]])

b2 = torch.Tensor(a2)=

tensor([[ 0.0000, 0.0000, 0.0000],

[ 0.8979, -0.4158, 1.1338]])

bb2 = torch.tensor(a2)=

tensor([[-0.6472, 1.3437, -0.3386],

[ 0.8979, -0.4158, 1.1338]])

c2 = a2.detach()=

tensor([[ 0.0000, 0.0000, 0.0000],

[ 0.8979, -0.4158, 1.1338]])

cc2 = a2.clone()=

tensor([[-0.6472, 1.3437, -0.3386],

[ 0.8979, -0.4158, 1.1338]])

ccc2 = a2.clone().detach()=

tensor([[-0.6472, 1.3437, -0.3386],

[ 0.8979, -0.4158, 1.1338]])

参考

1、B站视频

十分钟!彻底弄懂Python深拷贝与浅拷贝机制_哔哩哔哩_bilibili

11、简书

NumPy之浅拷贝和深拷贝 - 简书 (jianshu.com)

2、CSDN-numpy

numpy copy(无拷贝 浅拷贝、深拷贝)类型说明_genghaihua的博客-CSDN博客

3、CSDN-pytorch

python、pytorch中的常见的浅拷贝、深拷贝问题总结_pytorch tensor的浅、复制_新嬉皮士的博客-CSDN博客

完整代码

import numpy as np

import copy

import torcha = [1, 2, 3, 4, [11, 22, 33, [111, 222]]]

b = a

c = a.copy()

d = copy.deepcopy(a)print('before modify\r\n a=\r\n', a, '\r\n','b = a=\r\n', b, '\r\n','c = a.copy()=\r\n', c, '\r\n','d = copy.deepcopy(a)\r\n', d, '\r\n')a[0] = 10

print('after a[0] = 10\r\n a=\r\n', a, '\r\n','b = a=\r\n', b, '\r\n','c = a.copy()=\r\n', c, '\r\n','d = copy.deepcopy(a)\r\n', d, '\r\n')a[4][0] = 100

print('after a[4][0] = 100\r\n a=\r\n', a, '\r\n','b = a=\r\n', b, '\r\n','c = a.copy()=\r\n', c, '\r\n','d = copy.deepcopy(a)\r\n', d, '\r\n')a[4][3][0] = 1000

print('after a[4][3][0] = 1000\r\n a=\r\n', a, '\r\n','b = a=\r\n', b, '\r\n','c = a.copy()=\r\n', c, '\r\n','d = copy.deepcopy(a)\r\n', d, '\r\n')a1 = np.random.randn(2, 3)

b1 = a1

c1 = a1.copy()

d1 = copy.deepcopy(a1)print('before modify\r\n a1=\r\n', a1, '\r\n','b1 = a1=\r\n', b1, '\r\n','c1 = a1.copy()=\r\n', c1, '\r\n','d1 = copy.deepcopy(a1)=\r\n', d1, '\r\n')a1[0] = 10

print('after a1[0] = 10\r\n a1=\r\n', a1, '\r\n','b1 = a1=\r\n', b1, '\r\n','c1 = a1.copy()=\r\n', c1, '\r\n','d1 = copy.deepcopy(a1)=\r\n', d1, '\r\n')a2 = torch.randn(2, 3)

b2 = torch.Tensor(a2)

bb2 = torch.tensor(a2)

c2 = a2.detach()

cc2 = a2.clone()

ccc2 = a2.clone().detach()

print('before modify\r\n a2=\r\n', a2, '\r\n','b2 = torch.Tensor(a2)=\r\n', b2, '\r\n','bb2 = torch.tensor(a2)=\r\n', bb2, '\r\n','c2 = a2.detach()=\r\n', c2, '\r\n','cc2 = a2.clone()=\r\n', cc2, '\r\n','ccc2 = a2.clone().detach()=\r\n', ccc2)

a2[0] = 0

print('after a2[0] = 0\r\n a2=\r\n', a2, '\r\n','b2 = torch.Tensor(a2)=\r\n', b2, '\r\n','bb2 = torch.tensor(a2)=\r\n', bb2, '\r\n','c2 = a2.detach()=\r\n', c2, '\r\n','cc2 = a2.clone()=\r\n', cc2, '\r\n','ccc2 = a2.clone().detach()=\r\n', ccc2)