火车头采集器AI伪原创【php源码】

大家好,本文将围绕python作业提交什么文件展开说明,python123怎么提交作业是一个很多人都想弄明白的事情,想搞清楚python期末作业程序需要先了解以下几个事情。

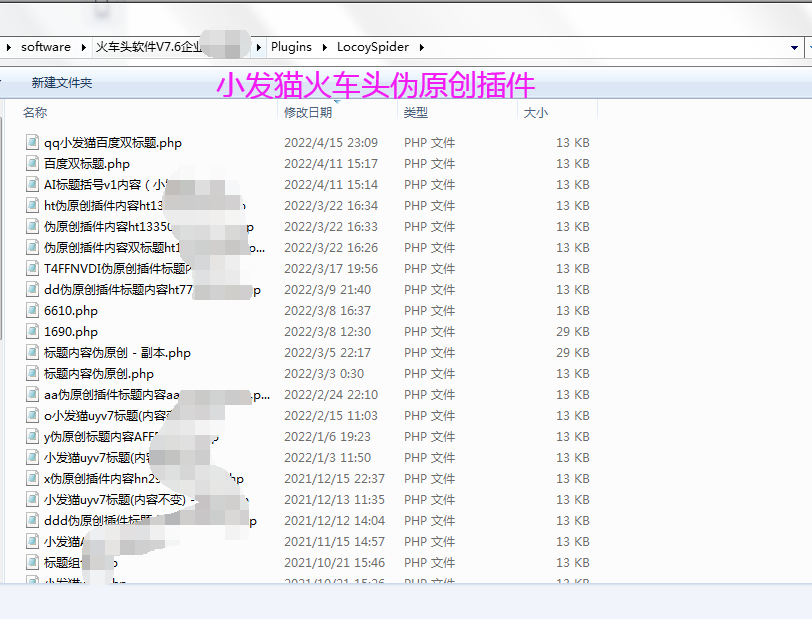

火车头采集ai伪原创插件截图:

I have a python project, whose folder has the structure

main_directory - lib - lib.py

- run - .py

.py is

from lib.lib import add_two

spark = SparkSession \

.builder \

.master('yarn') \

.appName('') \

.getOrCreate()

print(add_two(1,2))

and lib.py is

def add_two(x,y):

return x+y

I want to launch as a Dataproc job in GCP. I have checked online, but I have not understood well how to do it. I am trying to launch the with

gcloud dataproc jobs submit pyspark --cluster=$CLUSTER_NAME --region=$REGION \

run/.py

But I receive the following error message:

from lib.lib import add_two

ModuleNotFoundError: No module named 'lib.lib'

Could you help me on how I should do to launch the job on Dataproc? The only way I have found to do it is to remove the absolute path, making this change to .py:

from lib import add_two

and the launch the job as

gcloud dataproc jobs submit pyspark --cluster=$CLUSTER_NAME --region=$REGION \

--files /lib/lib.py \

/run/.py

However, I would like to avoid the tedious process to list the files manually every time.

Following the suggestion of @Igor, to pack in a zip file I have found that

zip -j --update -r libpack.zip /projectfolder/* && spark-submit --py-files libpack.zip /projectfolder/run/.py

works. However, this puts all files in the same root folder in libpack.zip, so if there were files with the same names in subfolders this would not work.

Any suggestions?

解决方案

To zip the dependencies -

cd base-path-to-python-modules

zip -qr deps.zip ./* -x .py

Copy deps.zip to hdfs/gs. Use uri when submitting the job as shown below.

Submit a python project (pyspark) using Dataproc' Python connector

from google.cloud import dataproc_v1

from google.cloud.dataproc_v1.gapic.transports import (

job_controller_grpc_transport)

region =

cluster_name =

project_id =

job_transport = (

job_controller_grpc_transport.JobControllerGrpcTransport(

address='{}-dataproc.googleapis.com:443'.format(region)))

dataproc_job_client = dataproc_v1.JobControllerClient(job_transport)

job_file =

# command line for the main job file

args = ['args1', 'arg2']

# required only if main python job file has imports from other modules

# can be one of .py, .zip, or .egg.

addtional_python_files = ['hdfs://path/to/deps.zip', 'gs://path/to/moredeps.zip']

job_details = {

'placement': {

'cluster_name': cluster_name

},

'pyspark_job': {

'main_python_file_uri': job_file,

'args': args,

'python_file_uris': addtional_python_files

}

}

res = dataproc_job_client.submit_job(project_id=project_id,

region=region,

job=job_details)

job_id = res.reference.job_id

print(f'Submitted dataproc job id: {job_id}')