yolov8-pos/yolov11-pos openvino C++部署

一、前言

本篇接上一篇 《yolov8-pos/yolov11-pos 训练》,在训练得到模型并转换为onnx格式之后,需要将其进行模型部署,如果有英伟达GPU的话,可以使用 tensorrt-alpha , 但是这里由于硬件平台为工控机,windows系统,考虑到工控机无GPU,则需使用openvino 进行加速,openvino 是英特尔开发的针对CPU的加速模型推理的库,在Python\C++\C#,均有相应接口。本篇主要使用 openvino,C++端进行模型推理加速。

二、openvino库配置

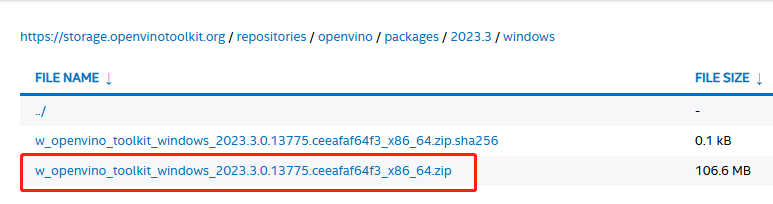

使用谷歌浏览器打开 openvino 库网址 ,下载

w_openvino_toolkit_windows_2023.3.0.13775.ceeafaf64f3_x86_64.zip

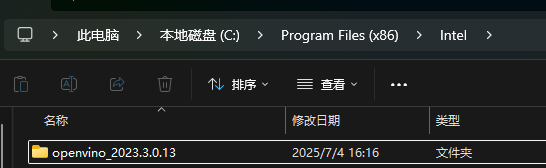

解压该zip, 改成个短些的名字,openvino_2023.3.0.13 , 复制到 C盘C:\Program Files (x86)\Intel 没有Intel 就自己创建

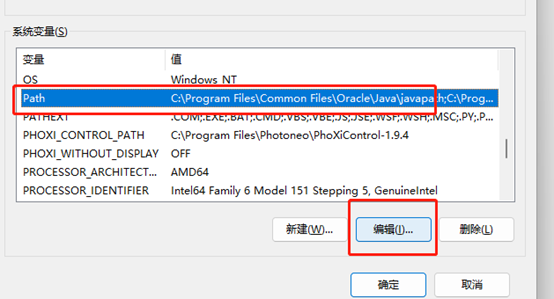

编辑系统环境变量

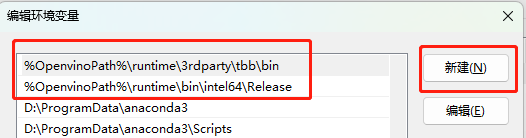

新建个 OpenvinoPath 名 和对应的 值 C:\Program Files (x86)\Intel\openvino_2023.3.0.13。然后再编辑Path变量,这里配置的是Release版的,速度比Debug快很多

%OpenvinoPath%\runtime\bin\intel64\Release

%OpenvinoPath%\runtime\3rdparty\tbb\bin

重启电脑一下

(2)opencv配置

省略,版本4点几的就行,我用的是4.8.0 ,可以参看,需要配置 Release版的

在vs2019上配置opencv,百分百成功_vs2019 配置opencv-CSDN博客

三、openvino VS2019 配置

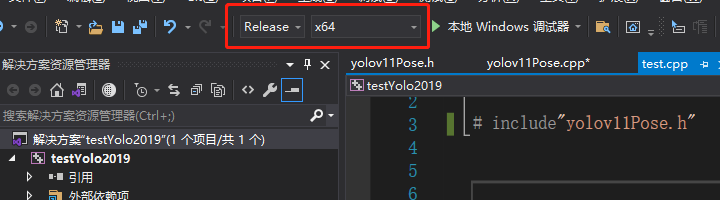

我用的是用VS2019,最好别低于2017,新建个空C++工程 testYolo2019 Release X64

项目-》属性 ,或者按Alt+Enter

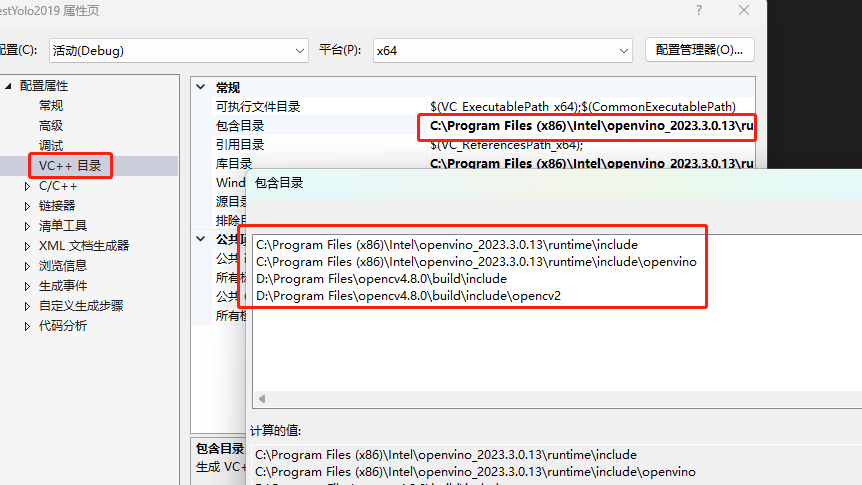

属性-》VC++目录-》包含目录,添加openvino库和opencv库的头文件的路径

C:\Program Files (x86)\Intel\openvino_2023.3.0.13\runtime\include

C:\Program Files (x86)\Intel\openvino_2023.3.0.13\runtime\include\openvino

下面Opencv的,看自己的路径

D:\Program Files\opencv4.8.0\build\include

D:\Program Files\opencv4.8.0\build\include\opencv2

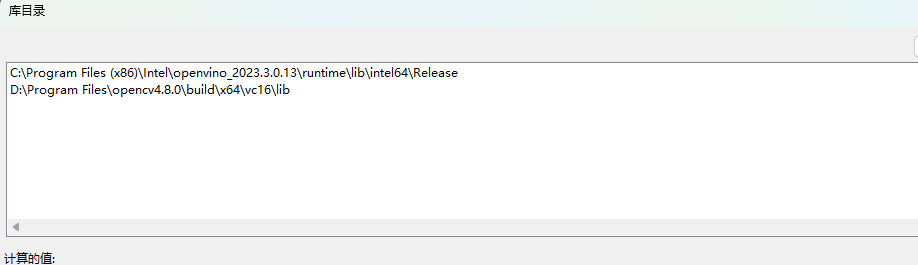

属性-》VC++目录-》库目录,添加openvino库和opencv库的lib文件的路径

C:\Program Files (x86)\Intel\openvino_2023.3.0.13\runtime\lib\intel64\Release

下面Opencv的,看自己的路径

D:\Program Files\opencv4.8.0\build\x64\vc16\lib

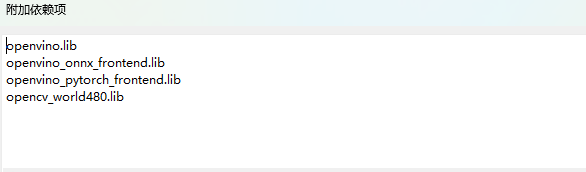

连接器->输入-》附加依赖项

openvino.lib

openvino_onnx_frontend.lib

openvino_pytorch_frontend.lib

opencv_world480.lib (这个需要看自己的opencv的版本)

四、代码

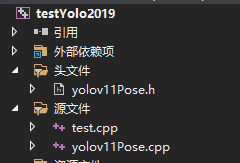

在工程里添加头文件 yolov11Pose.h 源文件 yolov11Pose.cpp test.cpp

//yolov11Pose.h#pragma once

#include<string>

#include <iostream>

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include<openvino/openvino.hpp>

#include <fstream>

#include <vector>

#include <random>

#include <algorithm>struct Config {float ripe_thre;float stem_thre;//float confThreshold;//float nmsThreshold;//float scoreThreshold;//int inpWidth;//int inpHeight;//std::string onnx_path;

};struct Resize

{cv::Mat resized_image;int dw;int dh;

};struct stby

{float score; // 分数cv::Point2i pt_1; // 第一个点,草莓头cv::Point2i pt_2; // 第二个点,草莓尖cv::Rect box; // 矩形框

};struct Detection {int class_id;float confidence;cv::Point2i pt_1;cv::Point2i pt_2;cv::Rect box;};class YOLOV8 {

public:YOLOV8( );~YOLOV8();void detImg(cv::Mat& frame, bool is_show=false);private:float ripe_thre;float stem_thre;float confThreshold;float nmsThreshold;float scoreThreshold;size_t cls_num; //类别数int inpWidth;int inpHeight;float rx; // the width ratio of original image and resized imagefloat ry; // the height ratio of original image and resized imagestd::string onnx_path;Resize resize;ov::Tensor input_tensor;ov::InferRequest infer_request;ov::CompiledModel compiled_model;void initialmodel();void preprocess_img(cv::Mat& frame);void postprocess_img(cv::Mat& frame, float* detections, ov::Shape& output_shape , bool is_show);void letterbox(const cv::Mat& source, cv::Mat& result);};//yolov11Pose.cpp#include"yolov11Pose.h"

#include<iostream>

#include<string>

#include<time.h>using namespace cv;

using namespace std;

using namespace dnn;bool isInVectorInt(int j, const std::vector<int>& vec) {// 使用 std::find 来查找 j 是否在 vec 中return std::find(vec.begin(), vec.end(), j) != vec.end();

}cv::Mat letterbox2(const cv::Mat& img, int target_size, int color = 114) {int width = img.cols;int height = img.rows;float r = float(target_size) / std::max(width, height); // 缩放比例float new_width = width * r;float new_height = height * r;// 计算边框大小int dw = (target_size - new_width) / 2;int dh = (target_size - new_height) / 2;cv::Mat new_img;cv::resize(img, new_img, cv::Size(new_width, new_height)); // 调整图像大小// 将新图像填充到目标尺寸cv::Mat result(target_size, target_size, img.type(), cv::Scalar(color, color, color));new_img.copyTo(result(cv::Rect(dw, dh, new_width, new_height))); // 放置到中心位置return result;

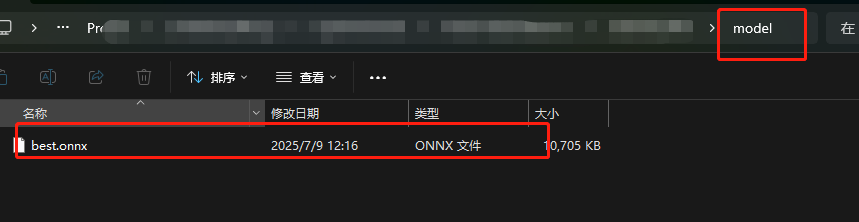

}const vector<string> coconame = { "ripe","stem","raw","motorcycle","airplane","bus","train","truck","boat","traffic light","fire hydrant","stop sign","parking meter","bench","bird","cat","dog","horse","sheep","cow","elephant","bear","zebra","giraffe","backpack","umbrella","handbag","tie","suitcase","frisbee","skis","snowboard","sports ball","kite","baseball bat","baseball glove","skateboard","surfboard","tennis racket","bottle","wine glass","cup","fork","knife","spoon","bowl","banana","apple","sandwich","orange","broccoli","carrot","hot dog","pizza","donut","cake","chair","couch","potted plant","bed","dining table","toilet","tv","laptop","mouse","remote","keyboard","cell phone","microwave","oven","toaster","sink","refrigerator","book","clock","vase","scissors","teddy bear","hair drier","toothbrush" };YOLOV8::YOLOV8( ) {this->confThreshold = 0.3; // config.confThreshold; , 设置低一些this->nmsThreshold = 0.5; // config.nmsThreshold;this->scoreThreshold = 0.5; // config.scoreThreshold;this->cls_num = 2; this->inpWidth = 640; // config.inpWidth;this->inpHeight = 640; // config.inpHeight;this->onnx_path = "model/best.onnx"; //config.onnx_path;this->initialmodel();}

YOLOV8::~YOLOV8() {}

void YOLOV8::detImg(Mat& frame, bool is_show) {/*熟果的框和梗的框 的vector长度和顺序不对应 , 需要自己做对应*/preprocess_img(frame);infer_request.infer();const ov::Tensor& output_tensor = infer_request.get_output_tensor();ov::Shape output_shape = output_tensor.get_shape();float* detections = output_tensor.data<float>();this->postprocess_img(frame, detections, output_shape , is_show);}void YOLOV8::initialmodel() {ov::Core core;std::shared_ptr<ov::Model> model = core.read_model(this->onnx_path);// Preprocessing setup for the modelov::preprocess::PrePostProcessor ppp = ov::preprocess::PrePostProcessor(model);ppp.input().tensor().set_element_type(ov::element::u8).set_layout("NHWC").set_color_format(ov::preprocess::ColorFormat::BGR);ppp.input().preprocess().convert_element_type(ov::element::f32).convert_color(ov::preprocess::ColorFormat::RGB).scale({ 255, 255, 255 });ppp.input().model().set_layout("NCHW");ppp.output().tensor().set_element_type(ov::element::f32);model = ppp.build(); // Build the preprocessed modelthis->compiled_model = core.compile_model(model, "CPU");this->infer_request = compiled_model.create_infer_request();

}void YOLOV8::letterbox(const cv::Mat& source, cv::Mat& result)

{int col = source.cols;int row = source.rows;int _max = MAX(col, row);result = Mat::zeros(_max, _max, CV_8UC3);source.copyTo(result(Rect(0, 0, col, row)));

}void YOLOV8::preprocess_img(Mat& frame) {try {/* float width = frame.cols;float height = frame.rows;cv::Size new_shape = cv::Size(inpWidth, inpHeight);float r = float(new_shape.width / max(width, height));int new_unpadW = int(round(width * r));int new_unpadH = int(round(height * r));cv::resize(frame, resize.resized_image, cv::Size(new_unpadW, new_unpadH), 0, 0 , cv::INTER_AREA); resize.dw = new_shape.width - new_unpadW;resize.dh = new_shape.height - new_unpadH;cv::Scalar color = cv::Scalar(110, 110, 110);cv::copyMakeBorder(resize.resized_image, resize.resized_image, 0, resize.dh, 0, resize.dw, cv::BORDER_CONSTANT, color);this->rx = (float)frame.cols / (float)(resize.resized_image.cols - resize.dw);this->ry = (float)frame.rows / (float)(resize.resized_image.rows - resize.dh);*/float width = frame.cols;float height = frame.rows;cv::Size new_shape = cv::Size(inpWidth, inpHeight);float r = float(new_shape.width / max(width, height));int new_unpadW = int(round(width * r));int new_unpadH = int(round(height * r));resize.dw = new_shape.width - new_unpadW;resize.dh = new_shape.height - new_unpadH;resize.resized_image = letterbox2(frame, 640 );this->rx = (float)frame.cols / (float)(resize.resized_image.cols - resize.dw );this->ry = (float)frame.rows / (float)(resize.resized_image.rows - resize.dh );//cv::imshow("resized", resize.resized_image);float* input_data = (float*)resize.resized_image.data;input_tensor = ov::Tensor(compiled_model.input().get_element_type(), compiled_model.input().get_shape(), input_data);infer_request.set_input_tensor(input_tensor); }catch (const std::exception& e) {std::cerr << "exception: " << e.what() << std::endl;}catch (...) {std::cerr << "unknown exception" << std::endl;}}void YOLOV8::postprocess_img(Mat& frame, float* detections, ov::Shape& output_shape ,bool is_show ) {std::vector<cv::Rect> boxes;vector<int> class_ids;vector<float> confidences;vector<Point2i> pt_1_vec;vector<Point2i> pt_2_vec;int out_rows = output_shape[1];int out_cols = output_shape[2];const cv::Mat det_output(out_rows, out_cols, CV_32F, (float*)detections);for (int i = 0; i < det_output.cols; ++i) {//const cv::Mat classes_scores = det_output.col(i).rowRange(4, 84); const cv::Mat classes_scores = det_output.col(i).rowRange(4, this->cls_num+4 ); // 这样才是正确排布 11 , 前4个是框 第5个开始才是类别cv::Point class_id_point;double score;cv::minMaxLoc(classes_scores, nullptr, &score, nullptr, &class_id_point);if (score > this->confThreshold ) {const float cx = det_output.at<float>(0, i);const float cy = det_output.at<float>(1, i);const float ow = det_output.at<float>(2, i);const float oh = det_output.at<float>(3, i);const float ax = det_output.at<float>(4 + this->cls_num, i); // 前4个是框,第5个开始是类别,类别完了才是 x , y , 可见idconst float ay = det_output.at<float>(5 + this->cls_num, i);const float bx = det_output.at<float>(7 + this->cls_num, i);const float by = det_output.at<float>(8 + this->cls_num, i);cv::Point2i pt_a;pt_a.x = static_cast<int>(ax);pt_a.y = static_cast<int>(ay);cv::Point2i pt_b;pt_b.x = static_cast<int>(bx);pt_b.y = static_cast<int>(by);cv::Rect box;box.x = static_cast<int>((cx - 0.5 * ow));box.y = static_cast<int>((cy - 0.5 * oh));box.width = static_cast<int>(ow);box.height = static_cast<int>(oh);boxes.push_back(box);class_ids.push_back(class_id_point.y);confidences.push_back(score);pt_1_vec.push_back(pt_a);pt_2_vec.push_back(pt_b);}}std::vector<int> nms_result;cv::dnn::NMSBoxes(boxes, confidences, this->scoreThreshold, this->nmsThreshold, nms_result);std::vector<Detection> output;for (int i = 0; i < nms_result.size(); i++){Detection result;int idx = nms_result[i];result.class_id = class_ids[idx];result.confidence = confidences[idx];result.box = boxes[idx];result.pt_1 = pt_1_vec[idx];result.pt_2 = pt_2_vec[idx];output.push_back(result);}cout << "output_size:" << output.size() << endl;for (int i = 0; i < output.size(); i++){auto detection = output[i];auto box = detection.box;auto classId = detection.class_id;auto confidence = detection.confidence;auto pt_1 = detection.pt_1;auto pt_2 = detection.pt_2;pt_1.x = this->rx * ( pt_1.x - resize.dw / 2) ;pt_1.y = this->ry * ( pt_1.y - resize.dh / 2);pt_2.x = this->rx * (pt_2.x - resize.dw / 2);pt_2.y = this->ry * (pt_2.y - resize.dh / 2);box.x = this->rx *( box.x - resize.dw / 2);box.y = this->ry * (box.y - resize.dh / 2);box.width = this->rx * box.width;box.height = this->ry * box.height;if (is_show) {float xmax = box.x + box.width;float ymax = box.y + box.height;// detection boxstd::random_device rd;std::mt19937 gen(rd());std::uniform_int_distribution<int> dis(100, 255);std::vector<cv::Scalar> color_vec = {cv::Scalar(255, 0, 0), // 蓝色cv::Scalar(0, 255, 0), // 绿色cv::Scalar(0, 0, 255), // 红色cv::Scalar(255, 255, 0), // 黄色cv::Scalar(0, 255, 255) // 青色};cv::Scalar color = color_vec[classId];cv::rectangle(frame, cv::Point(box.x, box.y), cv::Point(xmax, ymax), color, 6);// Detection box textstd::string classString = coconame[classId] + ' ' + std::to_string(confidence).substr(0, 4);cv::Size textSize = cv::getTextSize(classString, cv::FONT_HERSHEY_DUPLEX, 1, 2, 0);cv::Rect textBox(box.x, box.y - 80, textSize.width + 320, textSize.height + 40);cv::rectangle(frame, textBox, color, cv::FILLED);cv::putText(frame, classString, cv::Point(box.x + 5, box.y - 10), cv::FONT_HERSHEY_DUPLEX, 2.5, cv::Scalar(0, 0, 0), 8, 0);int radius = 14;if (classId == 0) { //草莓才要特征点cv::circle(frame, pt_1, radius, cv::Scalar(255, 255, 0), 4);cv::circle(frame, pt_2, radius, cv::Scalar(0, 255, 255), 4);}}} if ( is_show ){cv::namedWindow("image", 2);cv::imshow("image", frame);cv::waitKey(1);}}// test.cpp

#include <iostream># include"yolov11Pose.h"int main(int argc, char* argv[]) {YOLOV8 yolomodel ; // 初始化模型int src_w = 800;int src_h = 600;cv::VideoCapture cap = cv::VideoCapture(0, cv::CAP_ANY);cap.set(cv::CAP_PROP_FRAME_WIDTH, src_w);cap.set(cv::CAP_PROP_FRAME_HEIGHT, src_h);cap.set(cv::CAP_PROP_FOURCC, cv::VideoWriter::fourcc('M', 'J', 'P', 'G'));// 检查摄像头是否成功打开if (!cap.isOpened()) {std::cerr << "Error: Could not open the camera!" << std::endl;return -1;}// 用于存储摄像头帧的变量cv::Mat frame;while (true) {cap >> frame; // 从摄像头读取一帧// 检查帧是否有效if (frame.empty()) {std::cerr << "Error: Could not capture frame!" << std::endl;break;}clock_t start, end;start = clock();yolomodel.detImg(frame ,true); // 最后参数是是否可视化显示图片end = clock();std::cout << "FPS = " << 1. / (double(end - start) / CLOCKS_PER_SEC) << std::endl;}cv::destroyAllWindows();return 0;}

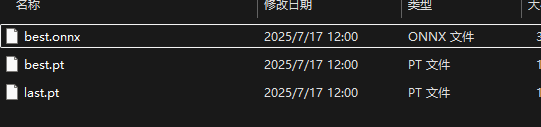

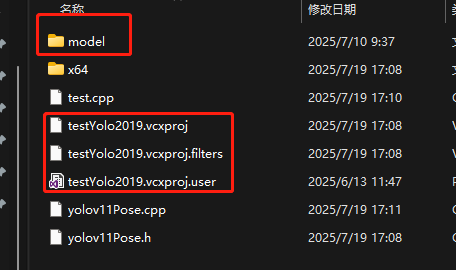

添加完代码后,需要把上一篇训练并转换好的onnx文件拷贝过来

可查看上一篇 《yolov8-pos/yolov11-pos 训练》

在工程目录,vcxproj 文件同目录,创建 model文件夹,把 上面的 best.onnx拷贝至该model文件夹

五、编译运行

回到VS2019 ,编译运行

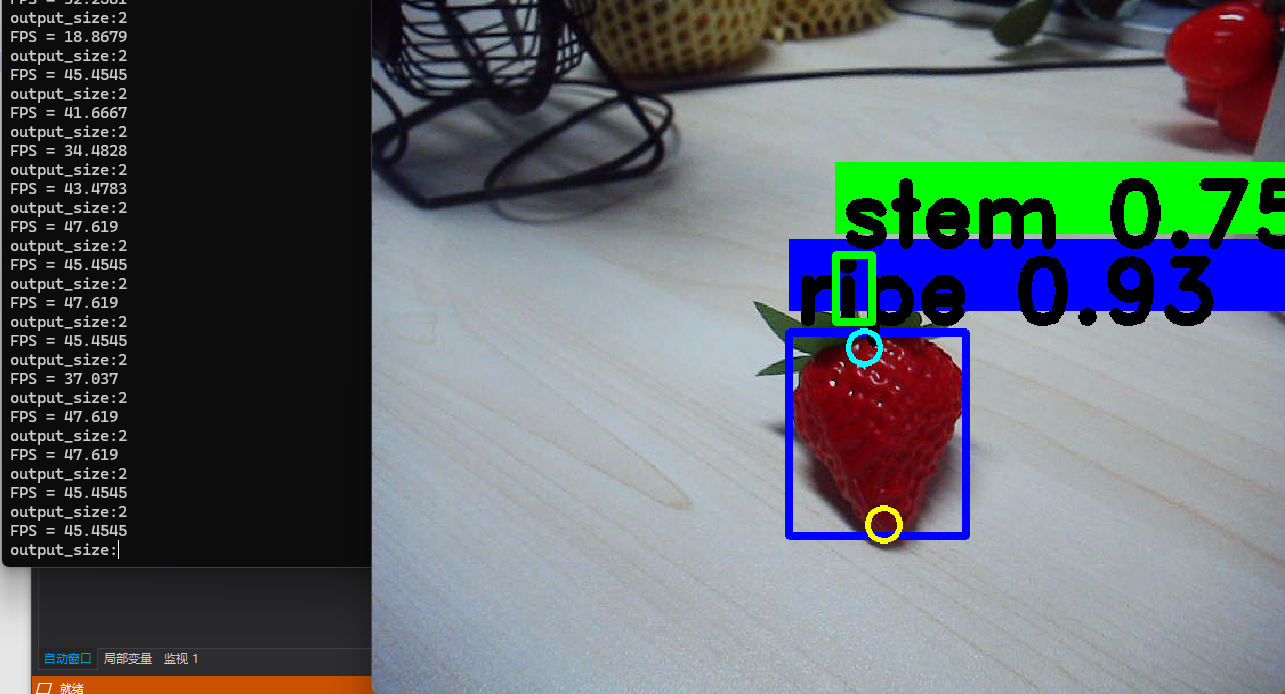

可把摄像头检测到的草莓边框和2关键点、果柄边框给显示出来,我的CPU是 12400K , FPS还挺高,有40+,不过是n模型运行快,换成s模型,速度则会降至20帧左右

~~![]() 请多点赞支持,您的点赞是我写文章的动力 ~~

请多点赞支持,您的点赞是我写文章的动力 ~~