Spring-AI系列-AI模型API

原文-知识库,欢迎大家评论互动

AI Model API

Portable ModelAPI across AI providers for Chat, Text to Image, Audio Transcription, Text to Speech, and Embedding models. Both synchronous and stream API options are supported. Dropping down to access model specific features is also supported.

With support for AI Models from OpenAI, Microsoft, Amazon, Google, Amazon Bedrock, Hugging Face and more.

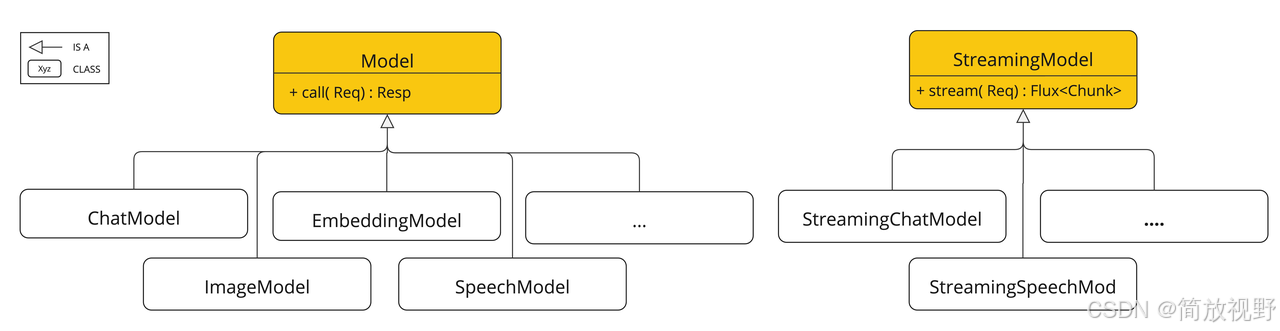

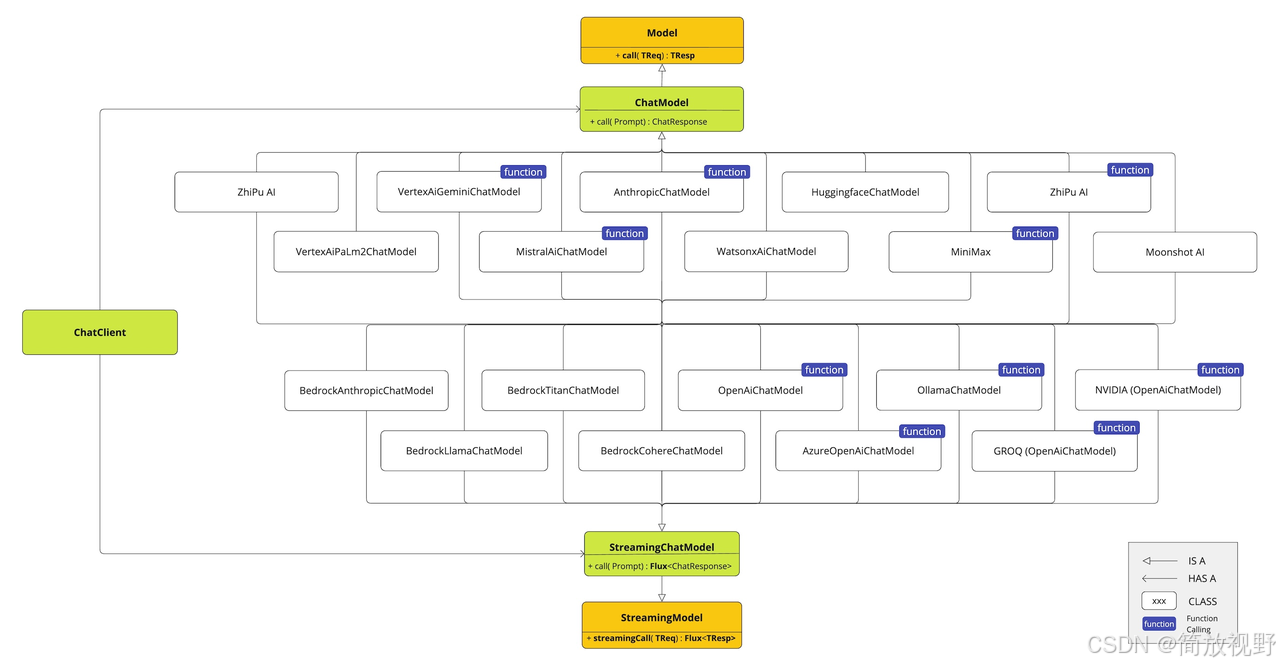

AI模型的三层结构:

- 领域抽象:Model、StreamingModel

- 能力抽象:ChatModel、EmbeddingModel

- 提供商具象:OpenAiChatModel、VertexAiGeminiChatModel、AnthropicChatModel

AI模型领域抽象

Model

调用AI模型的API

/**

* The Model interface provides a generic API for invoking AI models. It is designed to

* handle the interaction with various types of AI models by abstracting the process of

* sending requests and receiving responses. The interface uses Java generics to

* accommodate different types of requests and responses, enhancing flexibility and

* adaptability across different AI model implementations.

* <p></p>

* 调用AI模型的API

*

* @param <TReq> the generic type of the request to the AI model

* @param <TRes> the generic type of the response from the AI model

* @author Mark Pollack

* @since 0.8.0

*/

public interface Model <TReq extends ModelRequest <?>, TRes extends ModelResponse <?>> {/**

* Executes a method call to the AI model.

* @param request the request object to be sent to the AI model

* @return the response from the AI model

*/TRes call (TReq request) ;}

StreamingModel

调用AI模型的流式响应的API

/**

* The StreamingModel interface provides a generic API for invoking an AI models with

* streaming response. It abstracts the process of sending requests and receiving a

* streaming responses. The interface uses Java generics to accommodate different types of

* requests and responses, enhancing flexibility and adaptability across different AI

* model implementations.

* <p></p>

* 调用AI模型的流式响应的API

*

* @param <TReq> the generic type of the request to the AI model

* @param <TResChunk> the generic type of a single item in the streaming response from the

* AI model

* @author Christian Tzolov

* @since 0.8.0

*/

public interface StreamingModel <TReq extends ModelRequest <?>, TResChunk extends ModelResponse <?>> {/**

* Executes a method call to the AI model.

* @param request the request object to be sent to the AI model

* @return the streaming response from the AI model

*/Flux<TResChunk> stream (TReq request) ;}

ModelRequest

AI模型的请求入参

/**

* Interface representing a request to an AI model. This interface encapsulates the

* necessary information required to interact with an AI model, including instructions or

* inputs (of generic type T) and additional model options. It provides a standardized way

* to send requests to AI models, ensuring that all necessary details are included and can

* be easily managed.

* <p></p>

* AI模型的请求入参

*

* @param <T> the type of instructions or input required by the AI model

* @author Mark Pollack

* @since 0.8.0

*/

public interface ModelRequest <T> {/**

* Retrieves the instructions or input required by the AI model.

* @return the instructions or input required by the AI model

*/T getInstructions () ; // required input/**

* Retrieves the customizable options for AI model interactions.

* @return the customizable options for AI model interactions

*/

ModelOptions getOptions();}

ModelResponse

AI模型的响应出参

/**

* Interface representing the response received from an AI model. This interface provides

* methods to access the main result or a list of results generated by the AI model, along

* with the response metadata. It serves as a standardized way to encapsulate and manage

* the output from AI models, ensuring easy retrieval and processing of the generated

* information.

* <p></p>

* AI模型的响应出参

*

* @param <T> the type of the result(s) provided by the AI model

* @author Mark Pollack

* @since 0.8.0

*/

public interface ModelResponse <T extends ModelResult <?>> {/**

* Retrieves the result of the AI model.

* @return the result generated by the AI model

*/T getResult () ;/**

* Retrieves the list of generated outputs by the AI model.

* @return the list of generated outputs

*/List<T> getResults () ;/**

* Retrieves the response metadata associated with the AI model's response.

* @return the response metadata

*/

ResponseMetadata getMetadata();}

ModelResult

AI模型的输出结果

/**

* This interface provides methods to access the main output of the AI model and the

* metadata associated with this result. It is designed to offer a standardized and

* comprehensive way to handle and interpret the outputs generated by AI models, catering

* to diverse AI applications and use cases.

* <p></p>

* AI模型的输出结果

*

* @param <T> the type of the output generated by the AI model

* @author Mark Pollack

* @since 0.8.0

*/

public interface ModelResult <T> {/**

* Retrieves the output generated by the AI model.

* @return the output generated by the AI model

*/T getOutput () ;/**

* Retrieves the metadata associated with the result of an AI model.

* @return the metadata associated with the result

*/

ResultMetadata getMetadata();}

AI模型能力抽象

ChatModel

对话聊天模型

public interface ChatModel extends Model <Prompt, ChatResponse>, StreamingChatModel {default String call(String message) {Prompt prompt = new Prompt(new UserMessage(message));Generation generation = call(prompt).getResult() ;return (generation != null) ? generation .getOutput() .getText() : "";}default String call(Message... messages) {Prompt prompt = new Prompt(Arrays.asList(messages));Generation generation = call(prompt).getResult();return (generation != null) ? generation.getOutput().getText() : "";}@OverrideChatResponse call (Prompt prompt) ;default ChatOptions getDefaultOptions() {return ChatOptions.builder().build();}default Flux<ChatResponse> stream (Prompt prompt) {throw new UnsupportedOperationException("streaming is not supported");}}

StreamingChatModel

对话聊天模型的流式响应的API

@FunctionalInterface

public interface StreamingChatModel extends StreamingModel <Prompt, ChatResponse> {default Flux<String> stream(String message) {Prompt prompt = new Prompt(message);return stream(prompt).map(response -> (response.getResult() == null || response.getResult().getOutput() == null|| response.getResult().getOutput().getText() == null) ? "": response.getResult().getOutput() .getText());}default Flux<String> stream(Message... messages) {Prompt prompt = new Prompt(Arrays.asList(messages));return stream(prompt).map(response -> (response.getResult() == null || response.getResult().getOutput() == null|| response.getResult().getOutput().getText() == null) ? "": response.getResult().getOutput().getText());}@OverrideFlux<ChatResponse> stream (Prompt prompt) ;}

EmbeddingModel

嵌入模型

/**

* EmbeddingModel is a generic interface for embedding models.

* <p></p>

* 嵌入模型

*

* @author Mark Pollack

* @author Christian Tzolov

* @author Josh Long

* @author Soby Chacko

* @author Jihoon Kim

* @since 1.0.0

*

*/

public interface EmbeddingModel extends Model <EmbeddingRequest, EmbeddingResponse> {@OverrideEmbeddingResponse call (EmbeddingRequest request) ;/**

* Embeds the given text into a vector.

* @param text the text to embed.

* @return the embedded vector.

*/

default float[] embed(String text) {Assert.notNull(text, "Text must not be null");List<float[]> response = this.embed(List.of(text));return response.iterator().next();}/**

* Embeds the given document's content into a vector.

* @param document the document to embed.

* @return the embedded vector.

*/float [] embed(Document document);/**

* Embeds a batch of texts into vectors.

* @param texts list of texts to embed.

* @return list of embedded vectors.

*/

default List<float[]> embed(List<String> texts) {Assert.notNull(texts, "Texts must not be null");return this .call( new EmbeddingRequest (texts, EmbeddingOptionsBuilder. builder ().build()))

.getResults().stream().map(Embedding::getOutput).toList();}/**

* Embeds a batch of { @link Document}s into vectors based on a

* { @link BatchingStrategy}.

* @param documents list of { @link Document}s.

* @param options { @link EmbeddingOptions}.

* @param batchingStrategy { @link BatchingStrategy}.

* @return a list of float[] that represents the vectors for the incoming

* { @link Document}s. The returned list is expected to be in the same order of the

* { @link Document} list.

*/

default List<float[]> embed(List<Document> documents, EmbeddingOptions options, BatchingStrategy batchingStrategy) {Assert.notNull(documents, "Documents must not be null");List<float[]> embeddings = new ArrayList<>(documents.size());List<List<Document>> batch = batchingStrategy.batch(documents);for (List<Document> subBatch : batch) {List<String> texts = subBatch.stream().map(Document::getText).toList();EmbeddingRequest request = new EmbeddingRequest (texts, options);EmbeddingResponse response = this .call(request);for (int i = 0; i < subBatch.size(); i++) {embeddings.add(response.getResults().get(i).getOutput());}}Assert.isTrue(embeddings.size() == documents.size(),"Embeddings must have the same number as that of the documents");return embeddings;}/**

* Embeds a batch of texts into vectors and returns the { @link EmbeddingResponse}.

* @param texts list of texts to embed.

* @return the embedding response.

*/

default EmbeddingResponse embedForResponse(List<String> texts) {Assert.notNull(texts, "Texts must not be null");return this .call( new EmbeddingRequest (texts, EmbeddingOptionsBuilder. builder ().build()));}/**

* Get the number of dimensions of the embedded vectors. Note that by default, this

* method will call the remote Embedding endpoint to get the dimensions of the

* embedded vectors. If the dimensions are known ahead of time, it is recommended to

* override this method.

* @return the number of dimensions of the embedded vectors.

*/

default int dimensions() {return embed("Test String").length;}}