Java爬虫实践:Jsoup+HttpUnit爬取今日头条、网易、搜狐、凤凰新闻

0x0 背景

最近学习爬虫,分析了几种主流的爬虫框架,决定使用最原始的两大框架进行练手:

Jsoup&HttpUnit

其中jsoup可以获取静态页面,并解析页面标签,最主要的是,可以采用类似于jquery的语法获取想要的标签元素,例如:

//1.获取url地址的网页html

html = Jsoup.connect(url).get();

// 2.jsoup获取新闻<a>标签

Elements newsATags = html.select("div#headLineDefault").select("ul.FNewMTopLis").select("li").select("a");

但是,有些网页(例如今日头条)并非是静态页面,而是在首页加载后通过ajax获取新闻内容然后用js渲染到页面上的。对于这种页面,我们需要使用htmlunit来模拟一个浏览器访问该url,即可获取该页面的html字符串。代码如下:

WebClient webClient = new WebClient(BrowserVersion.CHROME);

webClient.getOptions().setJavaScriptEnabled(true);

webClient.getOptions().setCssEnabled(false);

webClient.getOptions().setActiveXNative(false);

webClient.getOptions().setCssEnabled(false);

webClient.getOptions().setThrowExceptionOnScriptError(false);

webClient.getOptions().setThrowExceptionOnFailingStatusCode(false);

webClient.getOptions().setTimeout(10000);

HtmlPage htmlPage = null;

try {htmlPage = webClient.getPage(url);webClient.waitForBackgroundJavaScript(10000);String htmlString = htmlPage.asXml();return Jsoup.parse(htmlString);

} finally {webClient.close();

}

0x1 搜狐、凤凰、网易爬虫

这三家的页面都是静态的,因此代码都差不多,只要分析页面标签找到对应的元素,提取出想要的内容即可。

爬虫基本步骤为以下四步:

(1)获取首页

(2)使用jsoup获取新闻<a>标签

(3)从<a>标签中抽取基本信息,封装成News对象

(4)根据新闻url访问新闻页面,获取新闻内容、图片等

1.爬虫接口

一个接口,接口有一个抽象方法pullNews用于拉新闻,有一个默认方法用于获取新闻首页:

public interface NewsPuller {void pullNews();// url:即新闻首页url// useHtmlUnit:是否使用htmlunitdefault Document getHtmlFromUrl(String url, boolean useHtmlUnit) throws Exception {if (!useHtmlUnit) {return Jsoup.connect(url)//模拟火狐浏览器.userAgent("Mozilla/4.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)").get();} else {WebClient webClient = new WebClient(BrowserVersion.CHROME);webClient.getOptions().setJavaScriptEnabled(true);webClient.getOptions().setCssEnabled(false);webClient.getOptions().setActiveXNative(false);webClient.getOptions().setCssEnabled(false);webClient.getOptions().setThrowExceptionOnScriptError(false);webClient.getOptions().setThrowExceptionOnFailingStatusCode(false);webClient.getOptions().setTimeout(10000);HtmlPage htmlPage = null;try {htmlPage = webClient.getPage(url);webClient.waitForBackgroundJavaScript(10000);String htmlString = htmlPage.asXml();return Jsoup.parse(htmlString);} finally {webClient.close();}}}}

2.搜狐爬虫

@Component("sohuNewsPuller")

public class SohuNewsPuller implements NewsPuller {private static final Logger logger = LoggerFactory.getLogger(SohuNewsPuller.class);@Value("${news.sohu.url}")private String url;@Autowiredprivate NewsService newsService;@Overridepublic void pullNews() {logger.info("开始拉取搜狐新闻!");// 1.获取首页Document html= null;try {html = getHtmlFromUrl(url, false);} catch (Exception e) {logger.error("==============获取搜狐首页失败: {}=============", url);e.printStackTrace();return;}// 2.jsoup获取新闻<a>标签Elements newsATags = html.select("div.focus-news").select("div.list16").select("li").select("a");// 3.从<a>标签中抽取基本信息,封装成newsHashSet<News> newsSet = new HashSet<>();for (Element a : newsATags) {String url = a.attr("href");String title = a.attr("title");News n = new News();n.setSource("搜狐");n.setUrl(url);n.setTitle(title);n.setCreateDate(new Date());newsSet.add(n);}// 4.根据新闻url访问新闻,获取新闻内容newsSet.forEach(news -> {logger.info("开始抽取搜狐新闻内容:{}", news.getUrl());Document newsHtml = null;try {newsHtml = getHtmlFromUrl(news.getUrl(), false);Element newsContent = newsHtml.select("div#article-container").select("div.main").select("div.text").first();String title = newsContent.select("div.text-title").select("h1").text();String content = newsContent.select("article.article").first().toString();String image = NewsUtils.getImageFromContent(content);news.setTitle(title);news.setContent(content);news.setImage(image);newsService.saveNews(news);logger.info("抽取搜狐新闻《{}》成功!", news.getTitle());} catch (Exception e) {logger.error("新闻抽取失败:{}", news.getUrl());e.printStackTrace();}});}

}

2.凤凰新闻爬虫

@Component("ifengNewsPuller")

public class IfengNewsPuller implements NewsPuller {private static final Logger logger = LoggerFactory.getLogger(IfengNewsPuller.class);@Value("${news.ifeng.url}")private String url;@Autowiredprivate NewsService newsService;@Overridepublic void pullNews() {logger.info("开始拉取凤凰新闻!");// 1.获取首页Document html= null;try {html = getHtmlFromUrl(url, false);} catch (Exception e) {logger.error("==============获取凤凰首页失败: {} =============", url);e.printStackTrace();return;}// 2.jsoup获取新闻<a>标签Elements newsATags = html.select("div#headLineDefault").select("ul.FNewMTopLis").select("li").select("a");// 3.从<a>标签中抽取基本信息,封装成newsHashSet<News> newsSet = new HashSet<>();for (Element a : newsATags) {String url = a.attr("href");String title = a.text();News n = new News();n.setSource("凤凰");n.setUrl(url);n.setTitle(title);n.setCreateDate(new Date());newsSet.add(n);}// 4.根据新闻url访问新闻,获取新闻内容newsSet.parallelStream().forEach(news -> {logger.info("开始抽取凤凰新闻《{}》内容:{}", news.getTitle(), news.getUrl());Document newsHtml = null;try {newsHtml = getHtmlFromUrl(news.getUrl(), false);Elements contentElement = newsHtml.select("div#main_content");if (contentElement.isEmpty()) {contentElement = newsHtml.select("div#yc_con_txt");}if (contentElement.isEmpty())return;String content = contentElement.toString();String image = NewsUtils.getImageFromContent(content);news.setContent(content);news.setImage(image);newsService.saveNews(news);logger.info("抽取凤凰新闻《{}》成功!", news.getTitle());} catch (Exception e) {logger.error("凤凰新闻抽取失败:{}", news.getUrl());e.printStackTrace();}});logger.info("凤凰新闻抽取完成!");}

}

3.网易爬虫

@Component("netEasyNewsPuller")

public class NetEasyNewsPuller implements NewsPuller {private static final Logger logger = LoggerFactory.getLogger(NetEasyNewsPuller.class);@Value("${news.neteasy.url}")private String url;@Autowiredprivate NewsService newsService;@Overridepublic void pullNews() {logger.info("开始拉取网易热门新闻!");// 1.获取首页Document html= null;try {html = getHtmlFromUrl(url, false);} catch (Exception e) {logger.error("==============获取网易新闻首页失败: {}=============", url);e.printStackTrace();return;}// 2.jsoup获取指定标签Elements newsA = html.select("div#whole").next("div.area-half.left").select("div.tabContents").first().select("tbody > tr").select("a[href~=^http://news.163.com.*]");// 3.从标签中抽取信息,封装成newsHashSet<News> newsSet = new HashSet<>();newsA.forEach(a -> {String url = a.attr("href");News n = new News();n.setSource("网易");n.setUrl(url);n.setCreateDate(new Date());newsSet.add(n);});// 4.根据url访问新闻,获取新闻内容newsSet.forEach(news -> {logger.info("开始抽取新闻内容:{}", news.getUrl());Document newsHtml = null;try {newsHtml = getHtmlFromUrl(news.getUrl(), false);Elements newsContent = newsHtml.select("div#endText");Elements titleP = newsContent.select("p.otitle");String title = titleP.text();title = title.substring(5, title.length() - 1);String image = NewsUtils.getImageFromContent(newsContent.toString());news.setTitle(title);news.setContent(newsContent.toString());news.setImage(image);newsService.saveNews(news);logger.info("抽取网易新闻《{}》成功!", news.getTitle());} catch (Exception e) {logger.error("新闻抽取失败:{}", news.getUrl());e.printStackTrace();}});logger.info("网易新闻拉取完成!");}

}

0x2 今日头条爬虫

由于今日头条页面中的新闻是通过ajax获取后加载的,因此需要使用httpunit进行抓取。

主要代码如下:

@Component("toutiaoNewsPuller")

public class ToutiaoNewsPuller implements NewsPuller {private static final Logger logger = LoggerFactory.getLogger(ToutiaoNewsPuller.class);private static final String TOUTIAO_URL = "https://www.toutiao.com";@Autowiredprivate NewsService newsService;@Value("${news.toutiao.url}")private String url;@Overridepublic void pullNews() {logger.info("开始拉取今日头条热门新闻!");// 1.load html from urlDocument html = null;try {html = getHtmlFromUrl(url, true);} catch (Exception e) {logger.error("获取今日头条主页失败!");e.printStackTrace();return;}// 2.parse the html to news information and load into POJOMap<String, News> newsMap = new HashMap<>();for (Element a : html.select("a[href~=/group/.*]:not(.comment)")) {logger.info("标签a: \n{}", a);String href = TOUTIAO_URL + a.attr("href");String title = StringUtils.isNotBlank(a.select("p").text()) ?a.select("p").text() : a.text();String image = a.select("img").attr("src");News news = newsMap.get(href);if (news == null) {News n = new News();n.setSource("今日头条");n.setUrl(href);n.setCreateDate(new Date());n.setImage(image);n.setTitle(title);newsMap.put(href, n);} else {if (a.hasClass("img-wrap")) {news.setImage(image);} else if (a.hasClass("title")) {news.setTitle(title);}}}logger.info("今日头条新闻标题拉取完成!");logger.info("开始拉取新闻内容...");newsMap.values().parallelStream().forEach(news -> {logger.info("===================={}====================", news.getTitle());Document contentHtml = null;try {contentHtml = getHtmlFromUrl(news.getUrl(), true);} catch (Exception e) {logger.error("获取新闻《{}》内容失败!", news.getTitle());return;}Elements scripts = contentHtml.getElementsByTag("script");scripts.forEach(script -> {String regex = "articleInfo: \\{\\s*[\\n\\r]*\\s*title: '.*',\\s*[\\n\\r]*\\s*content: '(.*)',";Pattern pattern = Pattern.compile(regex);Matcher matcher = pattern.matcher(script.toString());if (matcher.find()) {String content = matcher.group(1).replace("<", "<").replace(">", ">").replace(""", "\"").replace("=", "=");logger.info("content: {}", content);news.setContent(content);}});});newsMap.values().stream().filter(news -> StringUtils.isNotBlank(news.getContent()) && !news.getContent().equals("null")).forEach(newsService::saveNews);logger.info("今日头条新闻内容拉取完成!");}

}

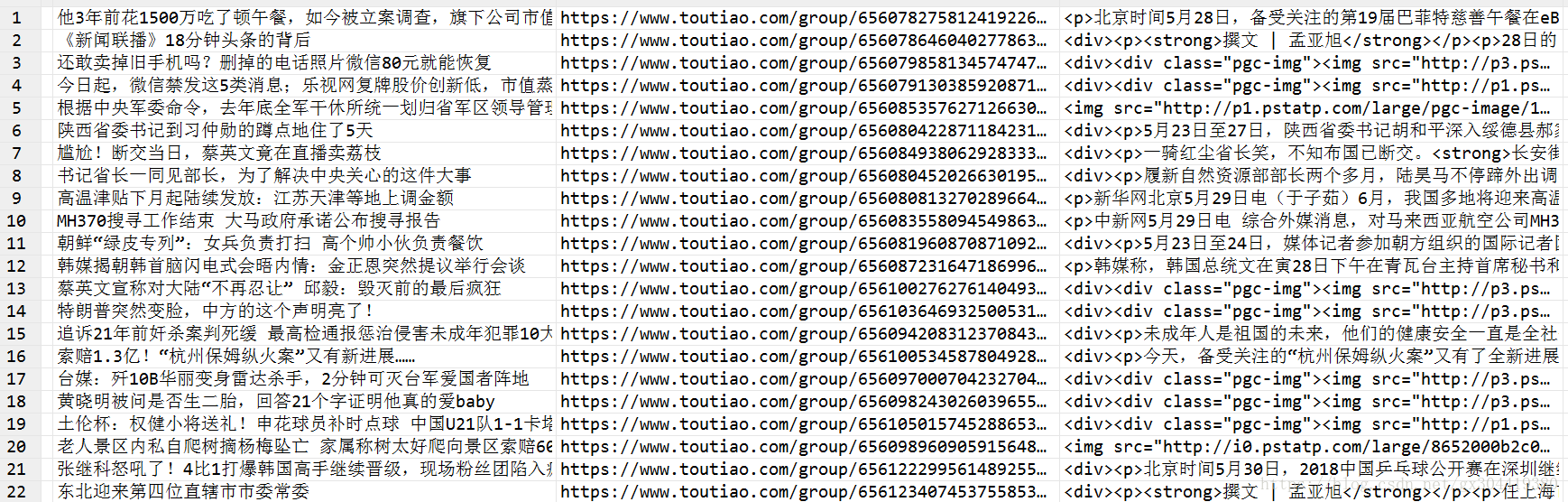

拉取结果:

不好意思各位,好久没上csdn,现在吧github地址发一下:https://github.com/gx304419380/NewsCrawler