eccv 2020_为什么我停止使用gan eccv2020

eccv 2020

重点 (Top highlight)

GAN — vs —归一化流量 (GAN — vs — Normalizing Flow)

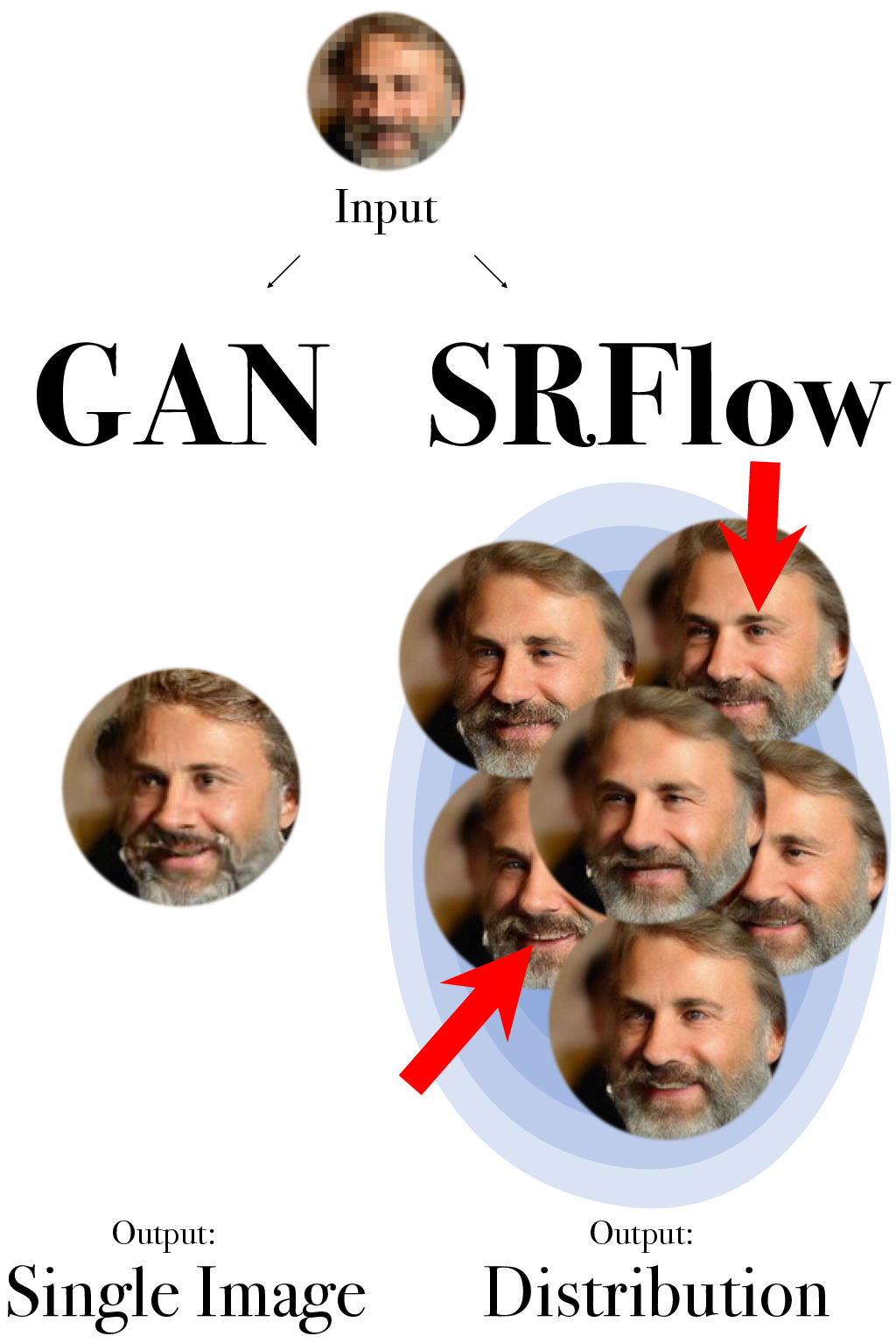

The benefits of Normalizing Flow. In this article, we show how we outperformed GAN with Normalizing Flow. We do that based on the application super-resolution. There we describe SRFlow, a super-resolution method that outperforms state-of-the-art GAN approaches. We explain it in detail in our ECCV 2020 paper.

标准化流程的好处。 在本文中,我们展示了在归一化流方面如何胜过GAN。 我们基于应用程序的超分辨率来执行此操作。 在那里,我们描述了SRFlow,这是一种超分辨率方法,其性能优于最新的GAN方法。 我们将在ECCV 2020文件中对其进行详细说明。

Intuition for Conditional Normalizing Flow. We train a Normalizing Flow model to transform an image into a gaussian latent space. During inference, we sample a random gaussian vector to generate an image. That works because the mapping is bijective and therefore outputs an image for a gaussian vector. SRFlow extends this method to use an image as conditional input.

有条件归一化流的直觉。 我们训练归一化流模型以将图像转换为高斯潜在空间。 在推断过程中,我们对随机的高斯向量进行采样以生成图像。 这是可行的,因为映射是双射的,因此会输出高斯矢量的图像。 SRFlow扩展了此方法,以将图像用作条件输入。

ECCV 2020 Spotlight [Paper]

ECCV 2020聚焦 [ 论文 ]

Code will follow [Github]

代码将遵循 [ Github ]

Advantages of Conditional Normalizing Flow over GAN approaches

与GAN方法相比,条件归一化流的优势

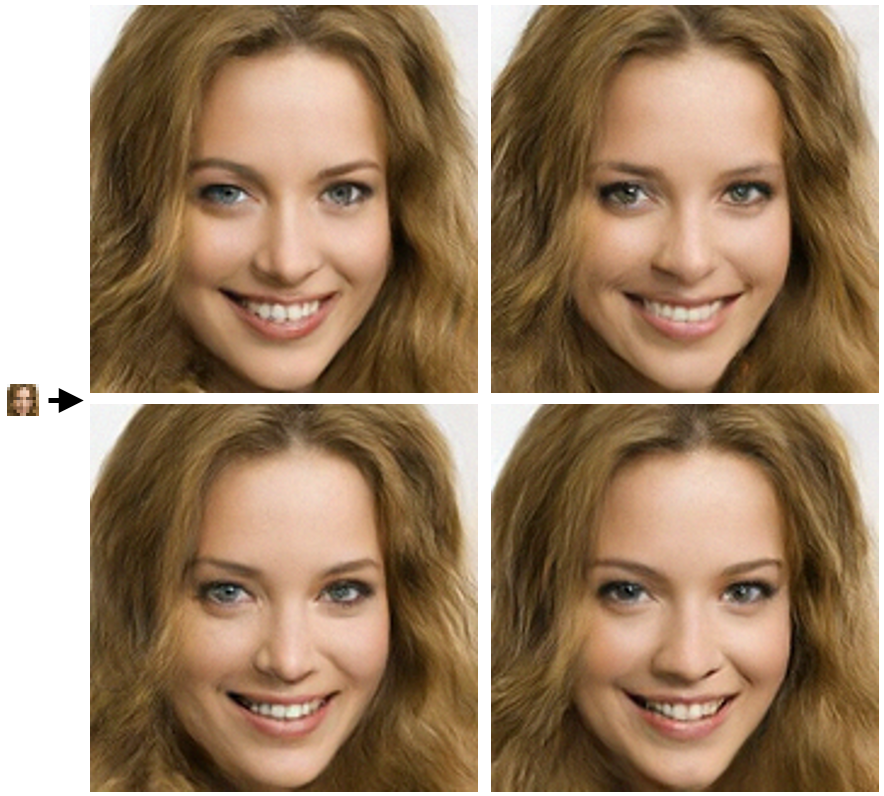

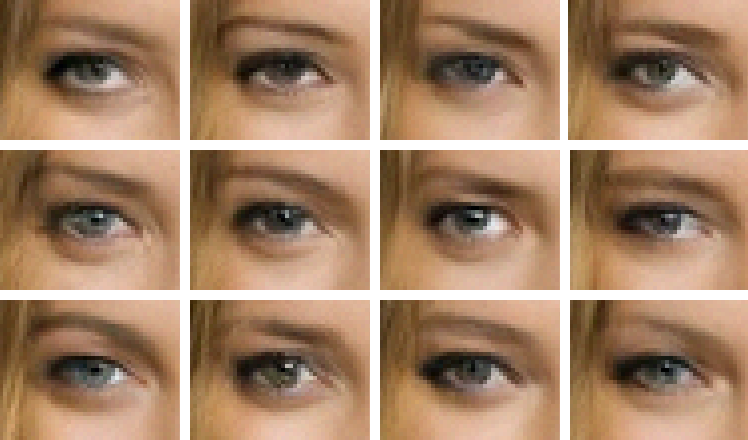

Sampling: SRFlow outputs many different images for a single input.

采样: SRFlow可为单个输入输出许多不同的图像。

Stable Training: SRFlow has much fewer hyperparameters than GAN approaches, and we did not encounter training stability issues.

稳定的训练: SRFlow的超参数比GAN方法少得多,而且我们没有遇到训练稳定性问题。

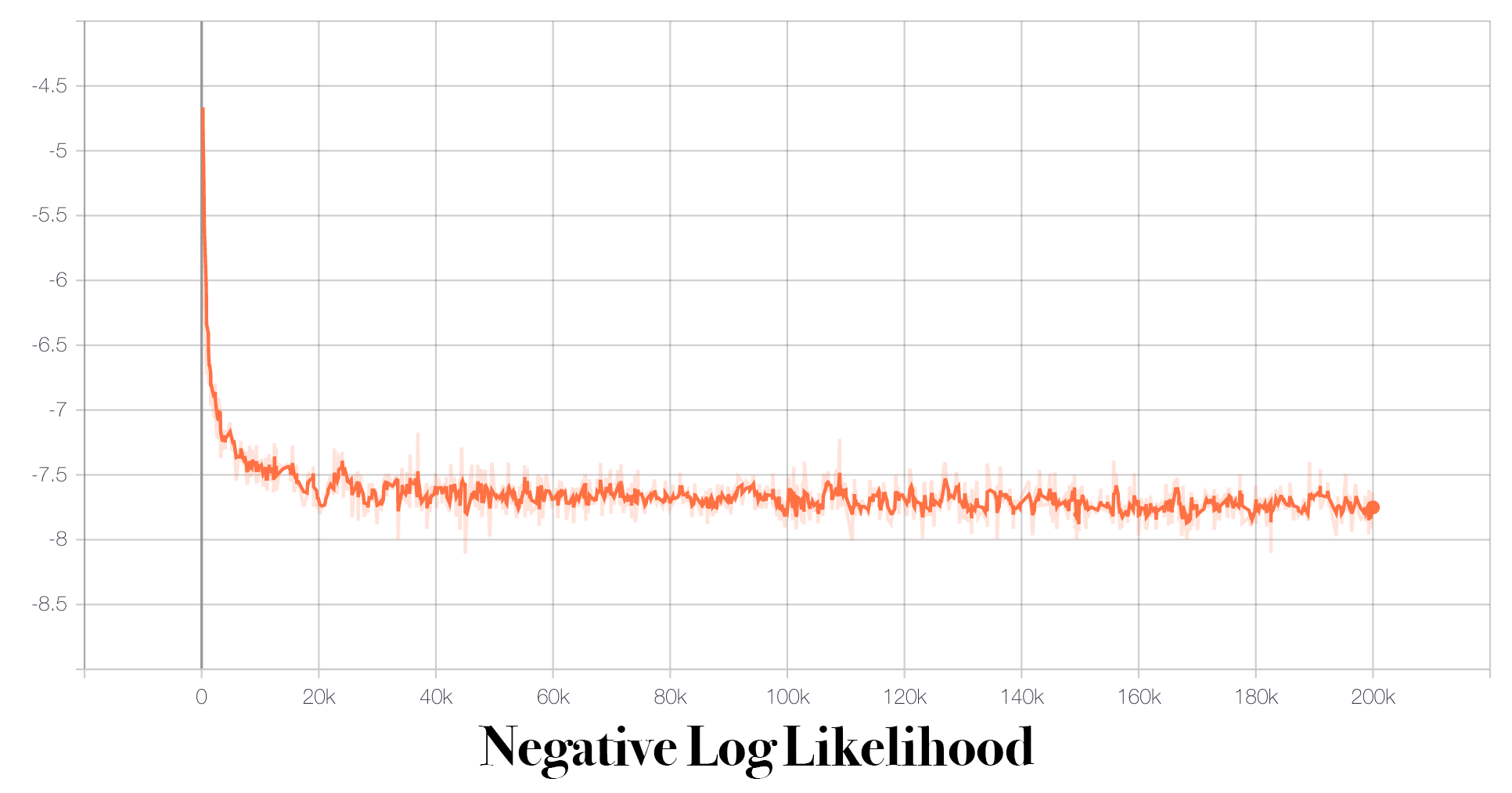

Convergence: While GANs cannot converge, conditional Normalizing Flows converge monotonic and stable.

收敛:虽然GAN无法收敛,但条件规范化流收敛是单调且稳定的。

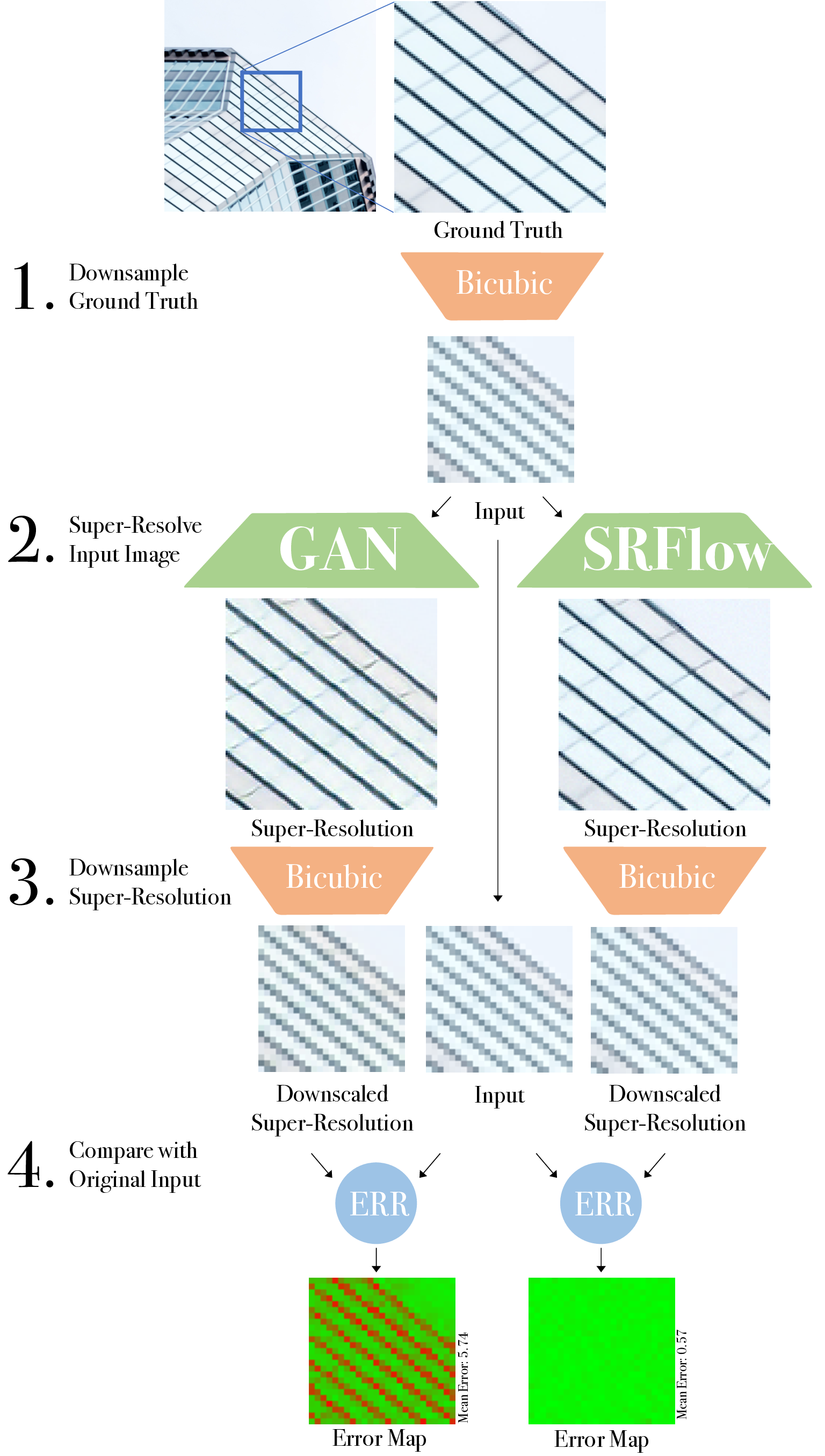

Higher Consistency: When downsampling the super-resolution, one obtains almost the exact input.

更高的一致性:对超分辨率进行下采样时,几乎可以获得准确的输入。

条件GAN的随机替代 (A stochastic alternative to Conditional GANs)

Designing conditional GANs that produce highly stochastic output, and thereby capture the full entropy of the conditional distributions they model, is an important question left open by the present work.

设计产生高度随机输出的条件GAN,从而捕获它们建模的条件分布的全部熵,是当前工作遗留的一个重要问题。

— Isola, Zhu, Zhou, Efros [CVPR 2017, pix2pix]

— Isola,Zhu,Efros [CVPR 2017,pix2pix]

Since [the GAN generator] G is conditioned on the input frames X, there is variability in the input of the generator even in the absence of noise, so noise is not a necessity anymore. We trained the network with and without adding noise and did not observe any difference.

由于[GAN生成器] G以输入帧X为条件,因此即使在没有噪声的情况下,生成器的输入也存在变化,因此不再需要噪声。 我们在有无噪声的情况下训练了网络,没有发现任何差异。

— Mathieu, Couprie, LeCun [ICLR 2016]

— Mathieu,Couprie和LeCun [ICLR 2016]

Conditional GANs ignore the Random Input. Initially, GAN was created to generate a diverse output. A change in the input vector changes the output to another realistic image. However, for image-to-image tasks, the groups of Efros and LeCun discovered that the generator widely ignores the random vector, as cited above. Therefore most GAN based image-to-image mappings are deterministic.

条件GAN会忽略随机输入。 最初,GAN的创建是为了产生多样化的输出。 输入向量的更改将输出更改为另一个逼真的图像。 但是,对于图像到图像的任务, Efros和LeCun小组发现生成器广泛忽略了随机向量,如上所述。 因此,大多数基于GAN的图像到图像映射都是确定性的。

Strategies for stochastic Conditional GANs. To make a conditional GAN for super-resolution stochastic, Bahat and Michaeli added a control signal to ESRGAN and discarded the reconstruction loss. Similarly, Menon et al. also consider the stochastic SR in the GAN setting and address the ill-posed nature of the problem.

随机条件GAN的策略。 为超分辨率随机变量创建条件GAN, Bahat和Michaeli 向ESRGAN添加了控制信号,并丢弃了重建损失。 同样, 梅农等。 还应考虑GAN环境中的随机SR并解决问题的不适定性。

How to generate a stochastic output using Normalizing Flow. During the training, Normalizing Flow learns to transform high-resolution images into a gaussian distribution. While the discriminator loss of GANs often causes mode collapse, we observed that this is not the case for image conditional Normalizing Flow. Therefore SRFlow is trained with a single loss and intrinsically samples a stochastic output.

如何使用规范化流生成随机输出。 在训练过程中,“规范化流”学习将高分辨率图像转换为高斯分布。 虽然GAN的鉴别器丢失通常会导致模式崩溃,但我们观察到图像条件规范化流不是这种情况。 因此 SRFlow经过单次损失训练,并从本质上对随机输出进行采样。

用更稳定的方法替换条件GAN (Replace conditional GAN with a more stable method)

Conditional GANs need careful hyperparameter tuning. As seen in many works that use conditional GAN, the loss comprises of a weighted sum of multiple losses. Zhu et al. developed CycleGAN and carefully tuned the weights of eight different losses. In addition, they had to balance the generator and discriminator strength to make the training stable.

条件GAN需要仔细的超参数调整。 从使用条件GAN的许多著作中可以看出,损失包括多个损失的加权和。 朱等。 开发了CycleGAN并仔细调整了八种不同损失的权重。 另外,他们必须平衡发生器和鉴别器的强度,以使训练稳定。

Why Normalizing Flow is intrinsically stable. Normalizing Flow only has a single network and a single loss. Therefore it has much fewer hyperparameters, and it is easier to train. Especially for researchers that develop new models, this is very practical, as it makes it much easier to compare different architectures.

为什么归一化流本质上是稳定的。 规范化流只有一个 单一网络和单一损失 。 因此,它的超参数要少得多,并且更容易训练。 特别是对于开发新模型的研究人员而言,这非常实用,因为它使比较不同的体系结构变得更加容易。

规范化流程中无需多次损失 (No need for multiple losses in Normalizing Flows)

A Minimax loss is very unstable to train. The loss in a GAN training comprises of a generator that tries to fake images, so the discriminator cannot know they are fake. And the discriminator tries to determine if an image comes from the generator or is from the training set. Those two conflicting targets cause a continuous drift for the learned parameters.

Minimax损失很难训练。 GAN训练中的损失包括试图伪造图像的生成器,因此区分者无法知道它们是伪造的。 鉴别器尝试确定图像是来自生成器还是来自训练集。 这两个冲突的目标导致学习参数的连续漂移。

Normalizing Flow is trained with a single Loss. Normalizing Flow, and its image conditional version is simply trained using maximum likelihood. This is possible as the input images are transformed into a gaussian latent space. There we simply calculate the likelihood of the obtained Gaussian vector. Using an off-the-shelf Adam optimizer, this loss converges stably and steadily.

使用单个损失训练归一化流量。 使用最大似然率简单地训练归一化流及其图像条件版本。 由于输入图像被转换为高斯潜空间,因此这是可能的。 在那里我们 只需计算可能性 获得的高斯向量的向量。 使用现成的Adam优化器,该损失稳定稳定地收敛。

建立更多基于证据的模型 (Towards more evidence-based models)

Without further intervention, conditional GANs are not input consistent. For super-resolution, an important question is if a super-resolved image is consistent with the low-resolution image. If this is not the case, it is questionable if the method actually does super-resolution or just image hallucination.

如果没有进一步干预,则条件GAN的输入将不一致。 对于超分辨率,一个重要的问题是超分辨率图像是否与低分辨率图像一致。 如果不是这种情况,则该方法是否实际上是超分辨率还是仅是图像幻觉就值得怀疑。

Why Normalizing Flow’s output is consistent with the input. While GANs have an unsupervised loss that encourages image hallucination, conditional Normalizing Flow lacks such an incentive. Its only task is to model the distribution of high-resolution images conditioned on an input image. As shown in our SRFlow paper, this provides an almost perfect consistency with the input image.

为什么规范化Flow的输出与输入一致。 尽管GAN具有无监督的损耗,这会促使图像产生幻觉,但有条件的规范化流缺乏这种激励。 它的唯一任务是对以输入图像为条件的高分辨率图像的分布进行建模。 如我们的SRFlow文件中所示,这提供了一个 与输入图像几乎完美的一致性 。

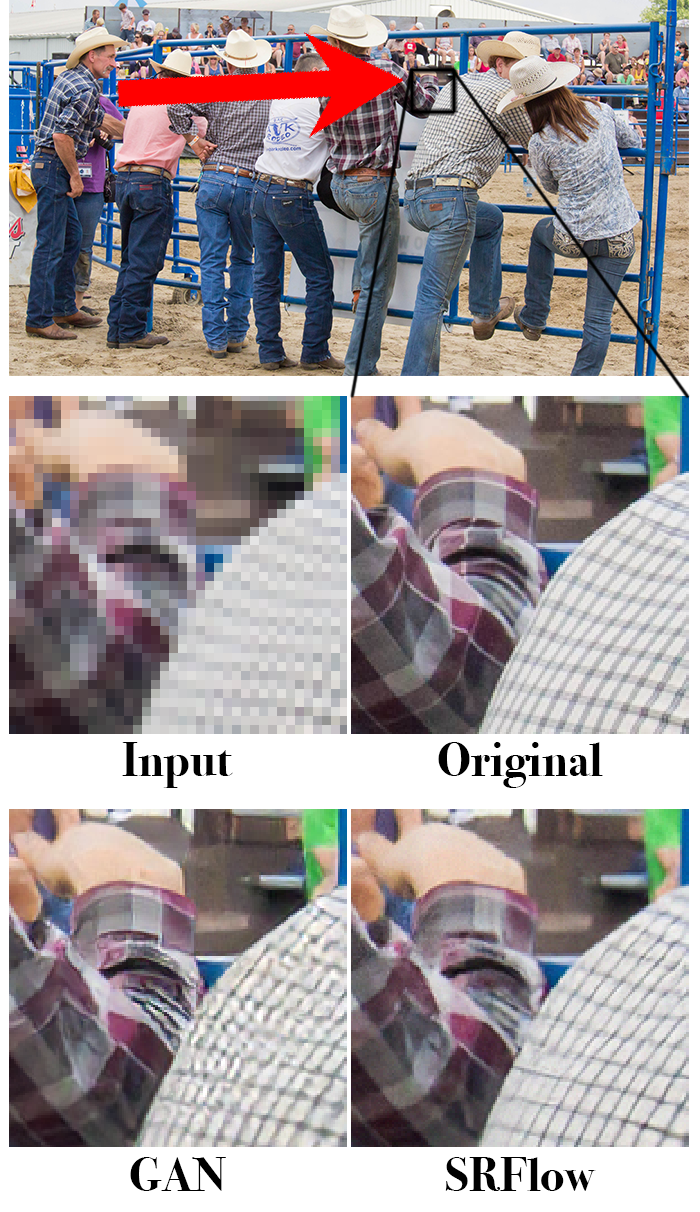

进一步的视觉比较 (Further Visual comparison)

ECCV 2020论文中的更多图像和细节 (More visuals and details in our ECCV 2020 paper)

在下一个项目中使用SRFlow (Use SRFlow in your next project)

Our SRFlow ECCV 2020 paper revels:

我们的SRFlow ECCV 2020论文揭示了:

How to train Conditional Normalizing Flow

如何训练条件归一化流

We designed an architecture that archives state-of-the-art super-resolution quality.

我们设计了一种架构,可存档最新的超分辨率质量。

How to train Normalizing Flow on a single GPUWe based our network on GLOW, which uses up to 40 GPUs to train for image generation. SRFlow only needs a single GPU for training conditional image generation.

如何在单个GPU上训练归一化流我们的网络基于GLOW ,该网络使用多达40个GPU来训练图像生成。 SRFlow只需要一个GPU即可训练条件图像生成。

How to use Normalizing Flow for image manipulation

如何使用标准化流进行图像处理

How to exploit the latent space for

如何利用潜在空间

Normalizing Flow for controlled image manipulations

归一化流程以控制图像

See many Visual Results

看到许多视觉结果

Compare GAN vs Normalizing Flow yourself. We’ve included a lot of visuals results in our paper.

自己比较GAN与规范化流。 我们的论文中包含了很多视觉效果。

ECCV 2020 Spotlight [Paper]

ECCV 2020聚焦 [ 论文 ]

Code will follow [Github]

代码将遵循 [ Github ]

This is our first blog post ever. Did you get what you expected? Please click on ‘responses’ below and tell us what you think!

这是我们有史以来的第一篇博客文章。 你得到了你所期望的吗? 请点击下面的“回复”,告诉我们您的想法!

翻译自: https://medium.com/@CVZurich/why-i-stopped-using-gan-eccv2020-d2b20dcfe1d

eccv 2020